Can we get rid of AI bias?

Bias is one of the many imperfections of humanity that causes us to make mistakes and holds us back from growing and innovating. However, bias is not only a human reality, but is also a reality for artificial intelligence as well. AI bias is a well-documented phenomenon that is widespread among machine learning tools from a variety of sectors, and it is notoriously difficult to get rid of.

In 2020, the Guardian reported that more than half of councils in England are using algorithms to make decisions about benefits (welfare), without having consulted with the public about this use of the technology. If they are using any kind of biased AI, then this could have severe consequences for the disenfranchised in society.

Artificial intelligence is meant to free us from our human limitations. The ability for a machine to scan massive amounts of data and spot trends and patterns in a matter of minutes is an invaluable tool that helps us save time and boost efficiency. After all, machines can not only analyse data much faster than humans, but they can also detect patterns that would be impossible for a human to do on his or her own. However, when the artificial intelligence we use is biased, then the extrapolations that we get from the AI will be faulty. Let’s go over some of the comon bias in AI.

AI bias occurs when incorrect assumptions in the machine learning process lead to systematically prejudiced results. This machine learning bias can occur as a result of human bias from the people designing or training the system, or it can result from incomplete or faulty data sets used to train the system.

For example, if you train an algorithm to detect specific skin conditions using a variety of pictures of skin conditions, and the pictures you use are primarily of lighter-skinned people, then this can negatively impact the AI’s ability to detect the same skin conditions on darker skin. No matter what the cause, AI bias has a negative effect on society, from the algorithms we use in hiring practices to the AI used to determine high-risk criminal defendants.

AI bias has a long and complicated history, dating back to the early days of computers and machine learning. Here’s an example of an early AI bias - in 1988, the UK Commission for Racial Equality found that a British medical school was guilty of discrimination because of AI bias in their computer programming. It was found that their computer program unfairly discriminated against women and people with non-European sounding names when selecting candidates for the interview process.

Interestingly enough, this computer program was designed to mirror the decisions of the human admissions officers, which it managed to do with 90-95% accuracy. Because the AI technology was developed to mimic human decisions, it unintentionally incorporated human biases into AI bias and frequently denied interviews to women and racial minorities.

Fast Data Science - London

Artificial intelligence is commonly used in the criminal justice system to flag citizens more likely to be deemed “high-risk”. Because many of these machine learning tools are trained using existing police records, these tools can incorporate human biases into their algorithms.

For example, some police officers have raised concerns that because young black men are more likely to be stopped and searched in the street than young white men, this affects the datasets that are then used for AI crime prediction, which then furthers the racial bias. Concerns have also been raised that because people from disadvantaged backgrounds are more likely to use public services more frequently, this will skew their representation in the data, making them more likely to be flagged as a risk.

Artificial intelligence programs are also commonly used to review job applicants’ resumes in order to recruit top talent. However, these algorithms have been known to display AI bias, resulting in the exclusion of qualified candidates based solely on their race or gender. Amazon recently stopped using its AI recruitment tool in 2018 once it realized that the program was biased against women.

Amazon’s artificial intelligence tool was trained to detect patterns across CVs, and it noticed that a majority of applicants were men, causing the program to unintentionally favour male applicants. It did so by downgrading applications that included the word “women”, such as applicants who went to a women’s college or participated in a female group or organisation. Although Amazon attempted to filter out this bias in its program, it ultimately scrapped it entirely.

It turns out that checking whether an applicant frequents a manga forum is a sexist way of choosing employees.

One online tech-hiring platform called Gild went so far as to analyse applicants’ social media presence to produce a hiring score. This took into account activity on tech sites such as Github, but the algorithm also gave extra points to applicants who had been active on a particular Japanese manga forum, which had a high male user base. The reasoning was that interest in manga was a strong predictor of coding ability, but the developers of this algorithm neglected to consider the implicit gender bias that they were introducing into hiring practices, - this bias in AI led to distorted results as well.

Many natural language processing models are trained on real world data. Since news articles and literature are typically male-focused, this creates a bias.

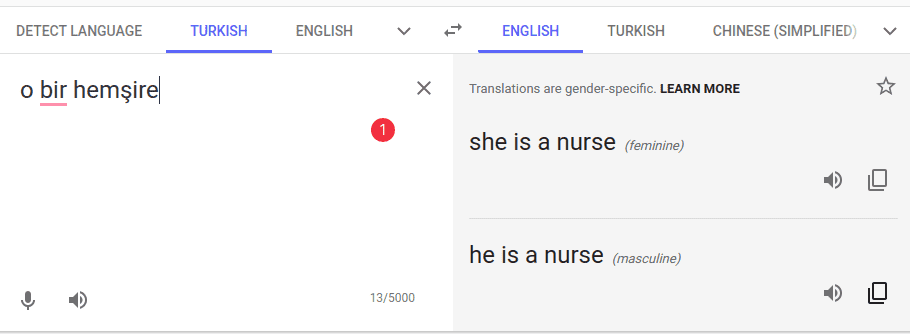

Here’s an example of an NLP AI bias.

In the past, Google Translate rendered the gender-neutral Turkish phrase o bir hemşire as ‘she is a nurse’, while o bir doktor was translated to ‘he is a nurse’. The pronoun o is gender-neutral and can be translated equally as ‘he’ or ‘she’.

Fortunately, when I checked in 2023, Google Translate has been modified to offer both masculine and feminine translations.

Google Translate now correctly offers both options for translations from gender-neutral language, instead of defaulting to female or male pronouns. Image source: Google

So what was the cause of the original biased translations?

Well, machine translation algorithms are trained on corpora, which are large bodies of text consisting of news articles, literature and any other kind of content. The machine translation models are statistics-based, meaning that they rely on probabilities of particular sentences occurring in a language. A sentence such as ‘she is a nurse’ would have been calculated as more likely than ‘he is a nurse’ - perhaps the female version appeared more often than the male version in the corpus that Google Translate was trained on.

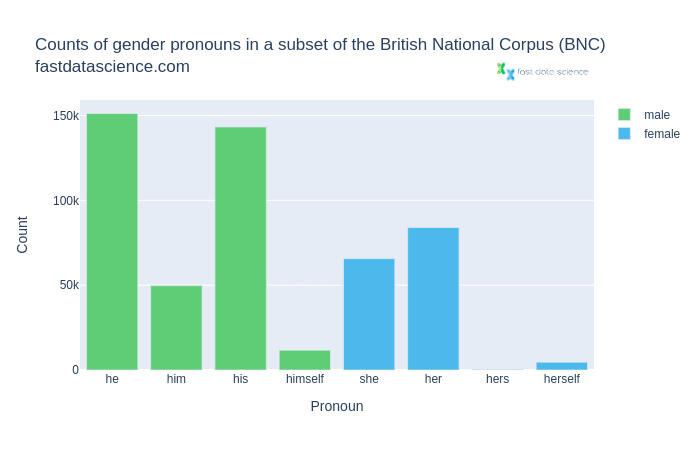

As an example, let’s look at the British National Corpus, one of the best known corpora for British English.

Counts of gendered pronouns in the British National Corpus. Male pronouns such as he, him, his and himself are much more common than female pronouns.

We can see that in general, the male pronouns are much more common than their female counterparts.

Taking this imbalance into account, it’s no wonder that machine translation algorithms are absorbing and further propagating a gender bias in translated texts.

Although AI bias is a serious problem that affects the accuracy of many machine learning programs, it may also be easier to deal with than human bias in some ways. Unlike human bias, which is often unconscious and unnoticed, AI bias is much more easy to spot. Algorithms can be much more easily searched for bias, which can often reveal unnoticed human bias in the datasets entered into the system. This can help us detect systemic bias, alter our approach to collecting data, and reduce bias in AI models.

Although fixing AI bias is a difficult proposition, there are ways to reduce bias in AI models and algorithms. By testing algorithms in settings similar to ones they’ll be used in in the real world, we can effectively train AI to detect appropriate patterns without incorporating unconscious bias. Developers will also have to be careful to make sure that the data systems they use to train machine learning are both free of bias and accurately representative of all races and genders.

Researchers have made attempts to define “fairness” in AI algorithms by either requiring AI models to have equal predictive value across all groups or by requiring them to have equal false positive and false negative rates. They have even gone so far as to incorporate counterfactual fairness into their AI models, in which they test to make sure the outcomes would be the same in a world in which commonly sensitive attributes such as race or gender were changed.

In a previous blog post, I have advocated introducing a standard of penetration testing, where AI algorithms are stress-tested for bias and a tester attempts to reconstruct protected characteristics which have been removed from training data.

Ultimately, the best way to reduce bias in AI models is for both the people training the artificial intelligence and the people testing it to be mindful of any potential bias, and of course to maintain diversity in the teams of developers working on the algorithm. By keeping an eye out for unconscious bias at both ends, developers can quickly spot inaccuracies and make the necessary changes.

Cathy O’Neil, How algorithms rule our working lives, The Guardian (2016)

Caroline Criado Perez, Invisible Women (2019)

The British National Corpus, version 3 (BNC XML Edition). 2007. Distributed by Bodleian Libraries, University of Oxford, on behalf of the BNC Consortium. URL: http://www.natcorp.ox.ac.uk/

Marsh and McIntyre, Nearly half of councils in Great Britain use algorithms to help make claims decisions, The Guardian, 2020

Looking for experts in Natural Language Processing? Post your job openings with us and find your ideal candidate today!

Post a Job

We are excited to introduce the new Harmony Meta platform, which we have developed over the past year. Harmony Meta connects many of the existing study catalogues and registers.

Guest post by Jay Dugad Artificial intelligence has become one of the most talked-about forces shaping modern healthcare. Machines detecting disease, systems predicting patient deterioration, and algorithms recommending personalised treatments all once sounded like science fiction but now sit inside hospitals, research labs, and GP practices across the world.

If you are developing an application that needs to interpret free-text medical notes, you might be interested in getting the best possible performance by using OpenAI, Gemini, Claude, or another large language model. But to do that, you would need to send sensitive data, such as personal healthcare data, into the third party LLM. Is this allowed?

What we can do for you