In recent weeks a number of Apple Card users in the US have been reporting that they and their partners have been allocated vastly different credit limits on the branded credit card, despite having the same income and credit score (see BBC article). Steve Wozniak, a co-founder of Apple, tweeted that his credit limit on the card was ten times higher than his wife’s, despite the couple having the same credit limit on all their other cards.

The Department of Financial Services in New York, a financial services regulator, is investigating allegations that the users’ gender may be the base of the disparity. Apple is keen to point out that Goldman Sachs is responsible for the algorithm, seemingly at odds with Apple’s marketing slogan ‘Created by Apple, not a bank’.

Since the regulator’s investigation is ongoing and no bias has yet been proven, I am writing only in hypotheticals in this article.

The Apple Card story isn’t the only recent example of algorithmic bias hitting the headlines. In July last year the NAACP (National Association for the Advancement of Colored People) in the US signed a statement requesting a moratorium on the use of automated decision-making tools, since some of them have been shown to have racial bias when used to predict recidivism - in other words, how likely an offender is to re-offend.

In 2013, Eric Loomis was sentenced to six years in prison, after the state of Wisconsin used a program called COMPAS to calculate his odds of committing another crime. COMPAS is a proprietary algorithm whose inner workings are known only to its vendor Equivant. Loomis attempted to challenge the use of the algorithm in Wisconsin’s Supreme Court but his challenge was ultimately denied.

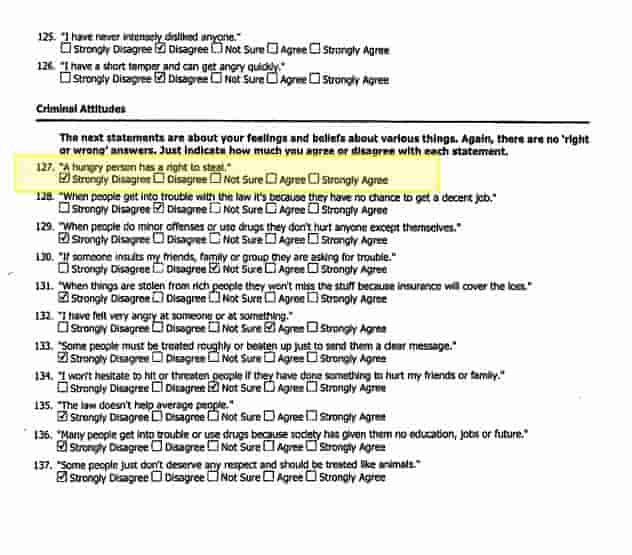

A screenshot of the questionnaire that a suspect fills out, which the COMPAS model uses to predict recidivism risk. The COMPAS model has been accused of AI bias. Image source and full document.

Unfortunately incidents such as these are only worsening the widely held perception of AI as a dangerous tool, opaque, under-regulated, capable of encoding the worst of society’s prejudices.

I will focus here on the example of a loan application, since it is a simpler problem to frame and analyse, but the points I make are generalisable to any kind of bias and protected category.

I would like to point out first that I strongly doubt that anybody at Apple or Goldman Sachs has sat down and created an explicit set of rules that take gender into account for loan decisions.

Let us first of all imagine that we are creating a machine learning model which predicts the probability of a person defaulting on a loan. There are a number of ‘protected categories’, such as gender, which we are not allowed to discriminate on.

Developing and training a loan decision AI is that kind of ‘vanilla’ data science problem that routinely pops up on Kaggle (a website that lets you participate in data science competitions) and which aspiring data scientists can expect to be asked about in job interviews. The recipe to make a robot loan officer is as follows:

Imagine you have a large table of 10 thousand rows, all about loan applicants that your bank has seen in the past:

| age | income | credit score | gender | education level | number of years at employer | job title | did they default? |

| 38 | 28000 | 460 | M | BSc | 2 | Nurse | No |

Fast Data Science - London

The final column is what we want to predict.

You would take this data, and split the rows into three groups, called the training set, the validation set and the test set.

You then pick a machine learning algorithm, such as Linear Regression, Random Forest or Neural Networks, and let it ’learn’ from the training rows without letting it see the validation rows. You then test it on the validation set. You rinse and repeat for different algorithms, tweaking the algorithms each time, and the model you will eventually deploy is the one that scored the highest on your validation rows.

When you have finished you are allowed to test your model on the test dataset and check its performance.

Now obviously if the ‘gender’ column was present in the training data, then there is a risk of building a biased model.

However the Apple/Goldman data scientists probably removed that column from their dataset at the outset.

So how can the digital money lender still be gender biased? Surely there’s no way for our algorithm to be sexist, right? After all it doesn’t even know an applicant’s gender!

Unfortunately and counter-intuitively, it is still possible for bias to creep in!

There might be information in our dataset that is a proxy for gender. For example: tenure in current job, salary and especially job title could all correlate with our applicant being male or female.

If it’s possible to train a machine learning model on your sanitised dataset to predict the gender with any degree of accuracy, then you are running the risk of your model accidentally being gender biased. Your loan prediction model could learn to use the implicit hints about gender in the dataset, even if it can’t see the gender itself.

I would like to propose an addition to the workflow of AI development: we should attack our AI from different angles, attempting to discover any possible bias, before deploying it.

It’s not enough just to remove the protected categories from your dataset, dust off your hands and think ‘job done’.

We also need to play devil’s advocate when we develop an AI, and instead of just attempting to remove causes of bias, we should attempt to prove the presence of bias.

If you are familiar with the field of cyber security, then you will have heard of the concept of a pen-test or penetration test. A person who was not involved in developing your system, perhaps an external consultant, attempts to hack your system to discover vulnerabilities.

I propose that we should introduce AI pen-tests: an analogy to the pen-test for uncovering and eliminating AI bias:

To pen-test an AI for bias, either an external person, or an internal data scientist who was not involved in the algorithm development, would attempt to build a predictive model to reconstruct the removed protected categories.

So returning to the loan example, if you have scrubbed out the gender from your dataset, the pen-tester would try his or her hardest to make a predictive model to put it back. Perhaps you should pay them a bonus if they manage to reconstruct the gender with any degree of accuracy, reflecting the money you would otherwise have spent on damage control, had you unwittingly shipped a sexist loan prediction model.

In addition to the pen-test above, I suggest the following further checks:

Segment the data into genders. Evaluate the accuracy of the model for each gender.

Identify any tendency to over and under estimate probability of default for either gender

Identify any difference in model accuracy by gender.

I have not covered some of the more obvious causes of AI bias. For example it is possible that the training data itself is biased. This is highly likely in the case of some of the algorithms used in the criminal justice system.

Let’s assume that you have discovered that the algorithm you have trained does indeed exhibit a bias for a protected category such as gender. Your options to mitigate this are:

One application of this approach that I would be interested in investigating further, is how to eliminate bias if you are using machine learning for recruitment. Imagine you have an algorithm matching CVs to jobs. If it inadvertently spots gaps in people’s CVs that correspond to maternity leave and therefore gender, we run the risk of a discriminatory AI. I imagine this could be compensated for by some of the above suggestions, such as tweaking the training data and artificially removing this kind of signal. I think that the pen-test would be a powerful tool for this challenge.

Today large companies are very much aware of the potential for bad PR to go viral. So if the Apple Card algorithm is indeed biased I am surprised that nobody checked the algorithm more thoroughly before shipping it.

A loan limit differing by a factor of 10 depending on gender is an egregious error.

Had the data scientists involved in the loan algorithm, or indeed the recidivism prediction algorithm used by the state of Wisconsin, followed my checklist above for pen-testing and stress testing their algorithms, I imagine they would have spotted the PR disaster before it had a chance to make headlines.

Of course it is easy to point fingers after the fact, and the field of data science in big industry is as yet in its infancy. Some would call it a Wild West of under-regulation, and regulators around the world are working on AI ethics frameworks.

I think we can also be glad that some conservative industries such as healthcare have not yet adopted AI for important decisions. Imagine the fallout if a melanoma-analysing algorithm, or amniocentesis decision making model, turned out to have a racial bias.

For this reason I would strongly recommend that large companies releasing algorithms into the wild to take important decisions start to segregate out a team of data scientists whose job is not to develop algorithms, but to pen-test and stress test them.

The data scientists developing the models are under too much time pressure to be able to do this themselves, and as the cybersecurity industry has discovered through years of experience, sometimes it is best to have an external person play devil’s advocate and try to break your system.

Unleash the potential of your NLP projects with the right talent. Post your job with us and attract candidates who are as passionate about natural language processing.

Hire NLP Experts

We are excited to introduce the new Harmony Meta platform, which we have developed over the past year. Harmony Meta connects many of the existing study catalogues and registers.

Guest post by Jay Dugad Artificial intelligence has become one of the most talked-about forces shaping modern healthcare. Machines detecting disease, systems predicting patient deterioration, and algorithms recommending personalised treatments all once sounded like science fiction but now sit inside hospitals, research labs, and GP practices across the world.

If you are developing an application that needs to interpret free-text medical notes, you might be interested in getting the best possible performance by using OpenAI, Gemini, Claude, or another large language model. But to do that, you would need to send sensitive data, such as personal healthcare data, into the third party LLM. Is this allowed?

What we can do for you