What are artificial neural networks and how do they learn? What do we use them for? What are some examples of artificial neural networks? How do we use neural networks?

In my day job I use neural networks to do things like turning clinical trial plans in PDF format into a high or low risk rating, or matching mental health questionnaires such as GAD-7 to equivalents in other languages and countries.

You will have heard terms like artificial neural networks but you may be unsure what they do. Are they really all they are hyped up to be?

Neural networks are a way you can get a program to write itself. Let’s imagine you want to write a program to identify whether a person is smiling or frowning.

Back in the nineties or early 2000s, people would have approached this by writing a long complicated list of rules to identify from the pixels of an image whether it is a smile or a frown.

Nowadays, there is a much better way to do it but you need a lot of competitional power. You would collect 1000 images of people smiling and 1000 images of people frowning and you’d decide that there’s a formula to get from the values in the pixels to a 1 for Smile and a 0 for Frown… just that we don’t know the formula!

No worries, we can just try a lot of different formulas. The one that gets the smiles and frowns right is the correct one.

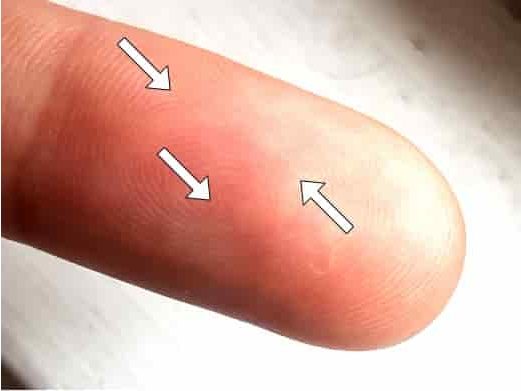

The idea of writing instructions to describe how to interpret an image seems far-fetched. But one of the best-known algorithms for fingerprint recognition, the Bozorth algorithm, is 1775 lines of code describing how to find minutiae (features such as junctions and dead ends) in a fingerprint image. You can marvel at the Bozorth code on Github.

Training neural networks

First we start with some assumptions. We don’t allow just any formula. Normally our formula will involve taking the pixel values in the image and repeatedly summing them, multiplying them by some unknown values called weights, adding some other unknown values called biases, setting them to zero if they are negative, until we get a single number out. A neural network for images might do this about 20 times. This would be called a Convolutional Neural Network (CNN) with 20 layers.

So if your original image was greyscale and 100×100 pixels (10,000 numbers), the neural network will combine them over its 20 layers to get a single value between 0 and 1.

So the unknown ingredients in our neural network are the weights and biases, which together are called parameters. How do we find them?

Well we start with a random guess. Let’s set all our parameters to random numbers.

Then we can see how well our model can distinguish between smiles and frowns. The chances are that it performs no better than a coin toss, because that’s essentially what we have done: we’ve made a random function.

But here’s the genius: we can use a mathematical trick called calculus, which is the mathematics of change, to find out how much each weight or bias needs to change to make our model get better at distinguishing smiles from frowns. And at this point pretty much any change will improve it because it is completely useless.

(Calculus was discovered by two of the great scientists of the 17th Century, Isaac Newton and Gottfried Wilhelm Leibniz. They fought over who got there first and who plagiarised who, but neither could have guessed how neural networks would be used to recommend memes on social media today.)

Newton. Public domain image.

Leibniz. Public domain image.

We then adjust the parameters a tiny bit in the direction that would improve the model’s performance and make it better at identifying smiles and frowns on our 1000 images.

We’d keep doing this adjustment thousands of times. If we’ve set everything up correctly then we’d expect the model to gradually get better at recognising grins and grimaces.

In practice the model doesn’t output exactly 1 or 0. It will give messy numbers such as 0.86532. We can calculate a number called a Loss Function, which is how far these values are from the correct 0s and 1s. Over time the Loss Function will get smaller.

The example I’ve described above is for a CNN. CNNs were developed for image classification. But neural networks can do many other things.

A hot topic right now is Transformers, which are very good at language data (text, sound files, etc). They work by going along a sequence of words and keeping attention on other words elsewhere in the sentence that are pertinent for the current work. The most popular languages on Google Translate now use Transformer networks, and OpenAI’s GPT-3 is an extremely powerful and sophisticated Transformer network.

You can also have LSTMs, or Long Short Term Memory networks, which are good at remembering information from earlier in a document.

Transformers and LSTMs are also good at speech recognition.

I’ve written about how similar neural networks are to the human brain here.

Unleash the potential of your NLP projects with the right talent. Post your job with us and attract candidates who are as passionate about natural language processing.

Hire NLP Experts

Fast Data Science appeared at the Hamlyn Symposium event on “Healing Through Collaboration: Open-Source Software in Surgical, Biomedical and AI Technologies” Thomas Wood of Fast Data Science appeared in a panel at the Hamlyn Symposium workshop titled “Healing Through Collaboration: Open-Source Software in Surgical, Biomedical and AI Technologies”. This was at the Hamlyn Symposium on Medical Robotics on 27th June 2025 at the Royal Geographical Society in London.

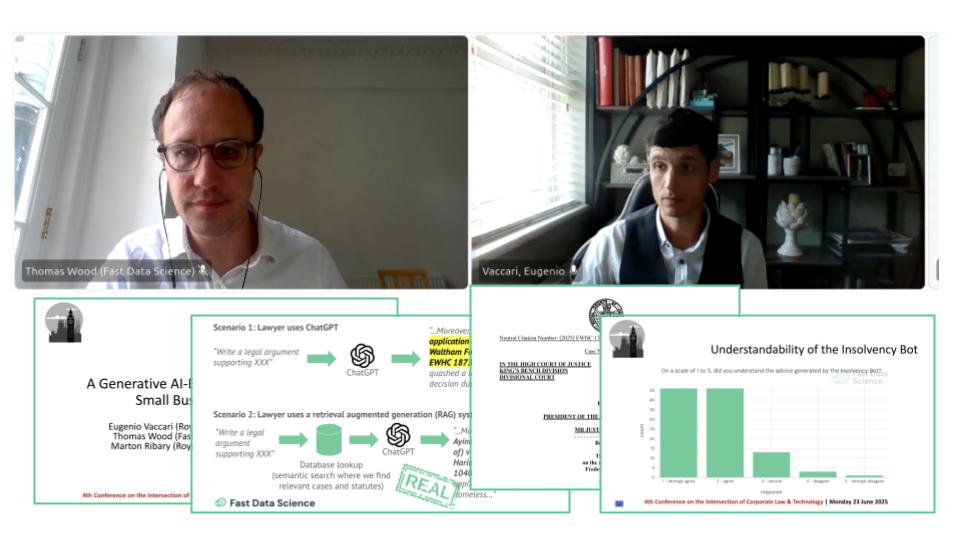

We presented the Insolvency Bot at the 4th Annual Conference on the Intersection of Corporate Law and Technology at Nottingham Trent University Dr Eugenio Vaccari of Royal Holloway University and Thomas Wood of Fast Data Science presented “A Generative AI-Based Legal Advice Tool for Small Businesses in Distress” at the 4th Annual Conference on the Intersection of Corporate Law and Technology at Nottingham Trent University

What is generative AI consulting? We have been taking on data science engagements for a number of years. Our main focus has always been textual data, so we have an arsenal of traditional natural language processing techniques to tackle any problem a client could throw at us.

What we can do for you