Natural Language Processing (NLP) is the area of artificial intelligence dealing with human language and speech. It sits at the crossroads between a diverse number of disciplines, from linguistics to computer science and engineering, and of course, AI.

NLP involves teaching computers how to speak, write, listen to, and interpret human language. If you’ve used a search engine, a GPS navigation system, or Amazon Echo today, you’ve already interacted with an NLP system. NLP has been around for decades, and NLP models have recently become much more powerful thanks to the advent of deep learning and neural networks. NLP is a fascinating area of AI and has enormous potential to change the way we live, play, and work.

Here are some of the areas which are on the cusp of being transformed by natural language processing.

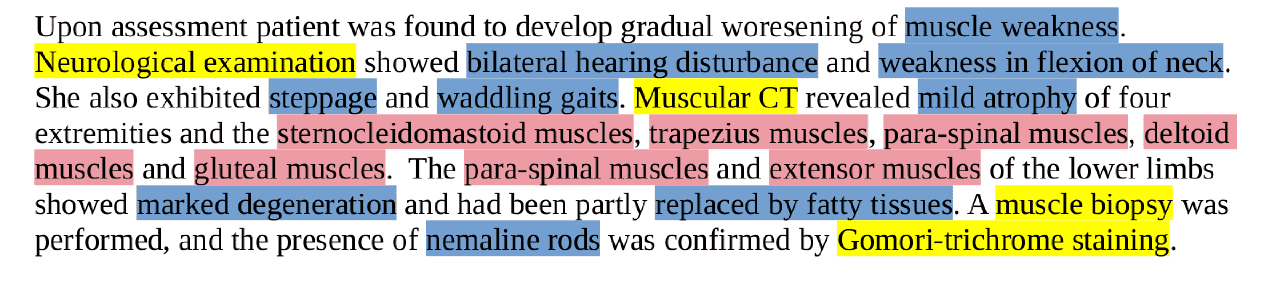

Many countries’ healthcare systems are moving away from paper records towards a system of Electronic Medical Records (EMR). This has created a wealth of analytics-driven opportunities to improve healthcare delivery and results.

However, a significant challenge for healthcare systems is to use this data to its full potential. Electronic Medical Records contain a lot of unstructured data in text format. It is much harder to perform analytics on text data than on the structured data which is common in other industries.

For this reason, healthcare organisations are beginning to adopt NLP to gain insights into health records and other text data.

NLP can analyse Electronic Medical Records and improve stroke prevention and treatment, helping physicians to decide when to apply intravenous thrombolysis (a drip).

Predicting the likelihood of certain adverse events such as heart attacks and strokes, and the subsequent progression of these diseases, is difficult to achieve with any accuracy. Often the most pertinent information held on a patient’s history can be in text format, and it can be hard to incorporate the data into any kind of predictive model.

In 2018, a team of researchers in a neurology department in Taiwan developed an NLP system which could process electronic medical records help clinicians determine which stroke patients should receive intravenous thrombolysis (medication to prevent blood clotting).

They found that their model was able to significantly improve decision making and improve quality of care.

The World Health Organisation has estimated that suicide is among the top 10 causes of death worldwide, and every death by suicide is likely to affect the lives of 138 people. Often, a person’s contact with health professionals is not frequent enough for the indications of suicide risk to be spotted soon enough. In addition, most standard methods of risk assessment require the individual to disclose their risk of self-harm to a professional.

The availability of social media has offered new opportunities to analyse and understand a person’s risk. A team in Boston, USA, has developed a deep learning natural language processing model to detect signals in a person’s social media use which indicate high risk. In addition, Facebook has a suicide prevention AI, which scans posts on the platform to assess risk.

Fast Data Science - London

Needless to say, the applications in this area have raised unease in some quarters, with many observers concerned about privacy implications and the worst-case scenario of a malicious actor even obtaining the suicide risk data and encouraging individuals to commit suicide.

Facebook’s algorithm has been prevented from being deployed in the EU because it does not comply with the GDPR’s rules about consent. However, many academics and privacy experts have stated that the potential of this technology for public good outweighs the privacy concerns.

The pharmaceutical industry has faced many new challenges over the last three decades. While new technologies have emerged which accelerate the drug discovery and development process, pharma remains a high-risk industry. Almost 90% of drugs which reach phase 1 clinical trials never reach the market because they are unsafe or ineffective. The entire process of bringing a drug to market takes an average 12 years and costs up to $3 billion.

Doctors fighting to save six trial participants in the infamous ‘Elephant Man’ drugs trial, run by Parexel in London in 2006. Unexpected side effects of the drug being tested, TGN1412, caused lasting injuries for some participants. Image source: BBC.

At all stages in the drug development process, from drug discovery through to human trials, a wealth of safety-relevant information is buried in unstructured text. Internal safety reports, medical literature, electronic medical records, social media, and conference proceedings, can all be mined for key safety information such as reports of adverse events, side effects, dosage information, and other data. NLP models are able to transform this information into structured data which can be incorporated into analytics and decision making processes.

This allows researchers to act on the best information available and identify critical safety issues earlier, reducing the pharma company’s losses if the drug does not make it to market.

When clinical trials are run, the sponsoring organisation must make public a clinical trial protocol describing the experimental design and entire procedure of the trial. These documents are typically 200 pages long, distributed in PDF format, and written in technical but unstructured English.

It is possible to develop a natural language processing model to extract relevant data from a trial protocol, such as the number and age of participants in a trial, the experimental design, type of treatment, or potential toxicities. At Fast Data Science we have developed a model for the German pharma company Boehringer Ingelheim which analyses a clinical trial protocol and predicts various measures of trial complexity which can be fed into a cost model. This allows the company to analyse and understand what will be involved in running a trial without spending huge amounts of time reading through protocols.

According to a recent report by the Economist, investment banks and other finance institutions have been adopting AI and machine learning mainly for analytics, using structured data to answer business questions such as customer churn. However, take-up of natural language processing is not far behind.

The online chatbot on HSBC’s website is able to answer common queries, deflecting requests from its call centres. Image source: HSBC

Most large banks with an online presence now have a virtual assistant or chatbot on their homepage, saving money on call centres. These can deal with basic tasks such as balance enquiries, account details, and loan queries. They are often deployed on the banks’ phone systems, enabling efficient triage of queries to the right department if a human does need to get involved.

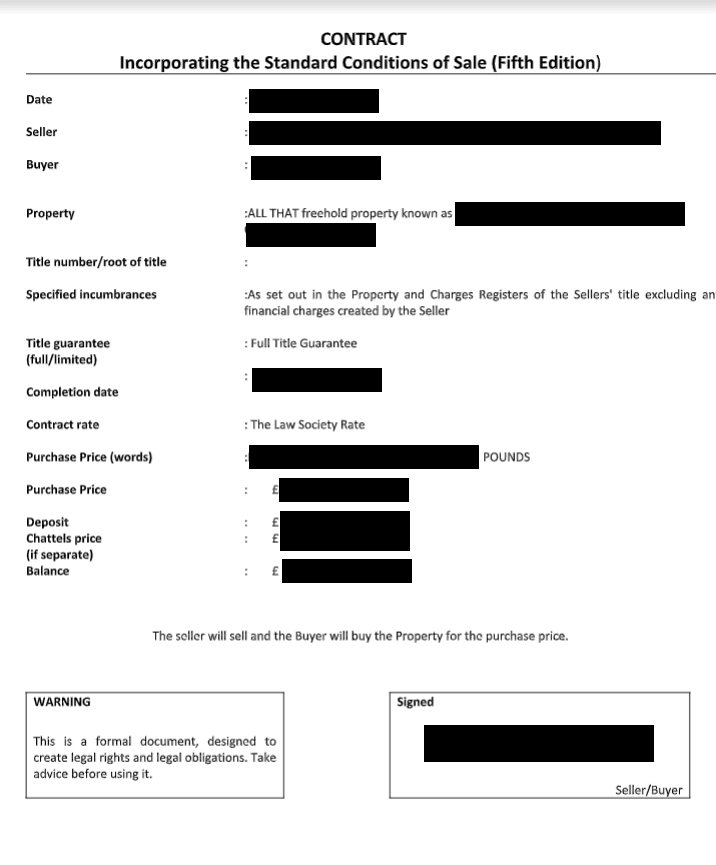

An example of an anonymised contract for a house sale. NLP can extract names of the parties to a contract, extract key data such as costs or contract terms, and can even anonymise a document for compliance purposes.

Many financial institutions deal with large numbers of legal documents, such as contracts, NDAs and trust deeds, on a daily basis.

Natural language processing solutions are being used to extract key information from unstructured documents, and classify the document according to business requirements. This is difficult to do with traditional non-AI programming techniques, since no two legal documents are the same, formatting can vary immensely, and documents are often received in paper format and scanned due to the need for a physical signature in many jurisdictions.

NLP is also being used to anonymise legal documents. For many business or regulatory purposes, it is necessary to redact or sanitise names, dates, locations, or prices from legal documents. The need to anonymise data for compliance has mushroomed with increased regulation, and a number of products have appeared on the market in particular since the advent of GDPR.

Many investment firms are beginning to use NLP to analyse company annual reports and news articles. Often a key event such as a CEO being sent on gardening leave by the board of directors can be market-moving information, and it is expensive to pay people to read through all the documents relevant to a company.

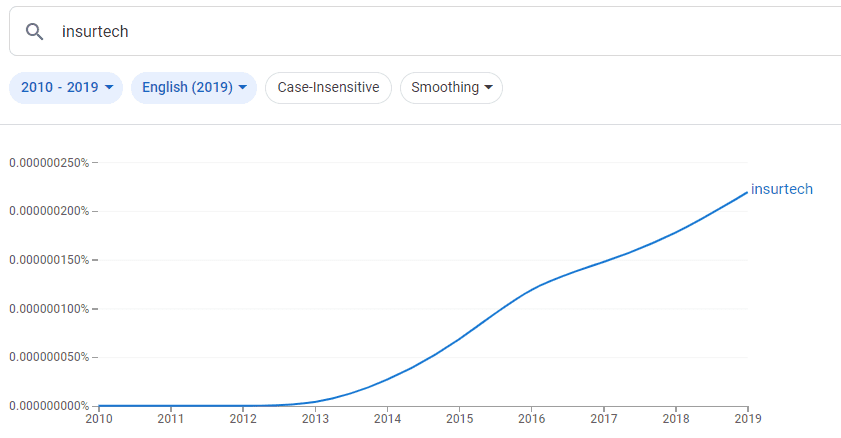

There has been a recent wave of technology innovations for improving efficiency in the insurance industry, which has been dubbed InsurTech, inspired by the term FinTech. This is partly due to an uptake in natural language processing, which can deliver large efficiency gains in the industry.

Google NGrams viewer showing the coinage of the term InsurTech, which includes a lot of AI and NLP in insurance

Insurance companies have to deal with large numbers of unstructured documents when processing insurance claims. For example, if a customer submits a claim to their travel insurance because they fell ill while on holiday, the insurance provider may have to wade through ten documents, all uploaded in scanned form, before deciding if the claim meets the policy’s criteria.

Insurers and underwriters are beginning to look at natural language processing as the natural solution to streamline this process. Given a dataset of the past three years of claims made and decisions taken, a simple supervised learning algorithm can process documents and give a probability of the claim being granted. They can even run in realtime on the company’s web interface, indicating to the customer that they need to upload more supporting documents, and removing the need to involve a customer service representative.

Natural language processing has already transformed a number of industries. However, certain areas such as healthcare and finance have been held back in the past by regulatory or ethical considerations, as well as the sheer practical difficulty of solving their big data problems with AI, despite possessing goldmines of unstructured text data. We have already seen machine learning transform the structured data in these industries, while natural language processing has tailed closely behind.

As regulation, technology, and business practices catch up, the 2020s will see NLP impact the untapped potential in these industries, and the regulation in these areas will need to catch up. We can look forward to huge and long-awaited improvements in healthcare, pharma, the law, insurance, and finance.

Unleash the potential of your NLP projects with the right talent. Post your job with us and attract candidates who are as passionate about natural language processing.

Hire NLP Experts

We are excited to introduce the new Harmony Meta platform, which we have developed over the past year. Harmony Meta connects many of the existing study catalogues and registers.

Guest post by Jay Dugad Artificial intelligence has become one of the most talked-about forces shaping modern healthcare. Machines detecting disease, systems predicting patient deterioration, and algorithms recommending personalised treatments all once sounded like science fiction but now sit inside hospitals, research labs, and GP practices across the world.

If you are developing an application that needs to interpret free-text medical notes, you might be interested in getting the best possible performance by using OpenAI, Gemini, Claude, or another large language model. But to do that, you would need to send sensitive data, such as personal healthcare data, into the third party LLM. Is this allowed?

What we can do for you