You are probably familiar with traditional databases. For example, a teacher at a school will need to enter students’ grades into a system where they get stored, and at the end of the year the grades would need to be retrieved to create the report card for each student. Or an employee database might store employees’ home addresses, pay grades, start dates, and other crucial information. Traditionally, organisations use a structure called a relational database, where different types of data are stored in different tables, with links between them, and they can be queried using a special language called SQL.

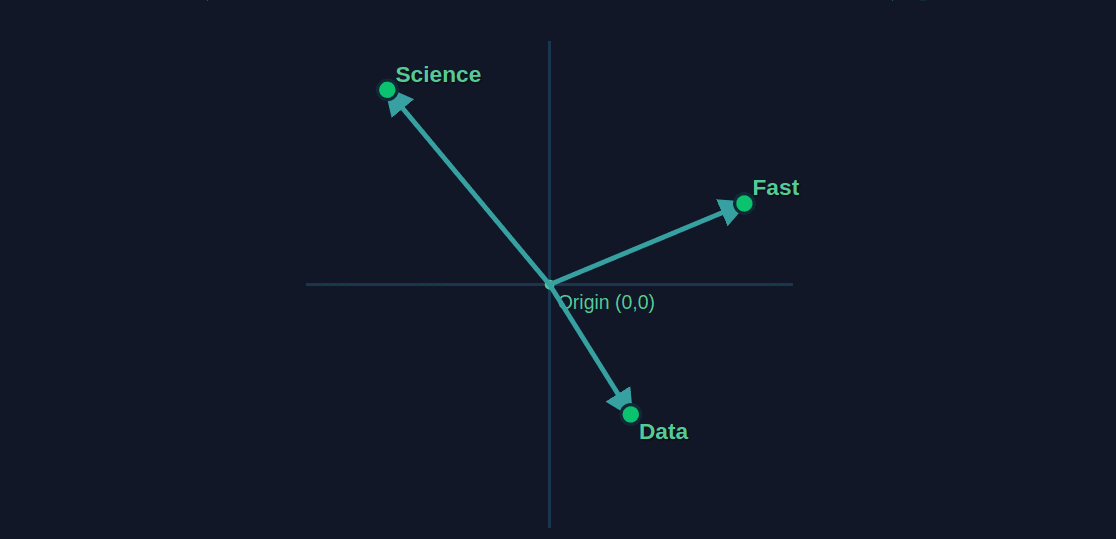

A vector index is a specialised database that stores and organises text or image data as vectors (also called embeddings or vector embeddings), which are numerical representations of information. Think of a vector as a list of numbers that capture the semantic meaning and context of data like text, images, or audio. For example, a word like “apple” might be represented by a vector where the numbers reflect its association with fruit, technology, and health. If I convert “apple” into a vector and “pear” into another vector, then the similarity between the two can be calculated using the cosine similarity formula and would ideally come to a number close to 1, whereas comparing the vectors of “apple” and “machinery” would give a much lower similarity, perhaps around 0.4.

What’s really cool is that large language models let us calculate a vector representation not only of a single word, but also of an entire sentence. There are also recipes for turning images, audio and video, or really anything, such as a customer’s buying patterns, into a vector representation.

You can try the demo below to see how text can be converted into a vector in multi-dimensional space and get a feel for how the cosine similarity works. I made the demo project the vectors of the two words onto two dimensional space so you can see the angle between them. It may take about 20 seconds or possibly up to a minute for the neural network to load, as it runs in your browser.

--

--

If you have a list of patients enrolled in a primary healthcare setting such as a GP surgery in the UK or family doctor elsewhere, there will usually be a list of electronic health records. There may also be a traditional SQL database.

There are certain circumstances in healthcare where you may need to retrieve all patients with a particular set of symptoms. For example, in the UK and most states in the US, a cancer registry must be notified of all tumours in the brain or central nervous system, and there are registries and reporting systems for HIV and AIDS and other diseases.

If you have a database of patient notes and you want to retrieve all patients’ details with a particular diagnosis, you can run an SQL query like the following:

SELECT

patient_id,

first_name,

last_name,

date_of_birth,

diagnosis

FROM

patients

WHERE

diagnosis LIKE '%cancer%';

However, this will fall over when the diagnosis does not contain the particular substring that you’re searching for. For example, if a diagnosis contains the word “leukemia”, this query would not pick it up, and likewise for a diagnosis text containing “cancer can be excluded”.

It’s often useful to supplement the SQL database with a vector database, where the unstructured text fields are all indexed in vector form.

In practice I have worked with the following vector index technologies: Weaviate, Elasticsearch, and Pinecone. All of them have their own syntax, so there isn’t (to my knowledge) a nice equivalent to SQL as a query language which works across platforms, and so the process of querying a vector index involves converting our search term “cancer” to a vector, and checking which vector entries in the index are closest in vector space to that search term.

In Weaviate it looks a little like this, using the with_near_text function which tells Weaviate to perform a semantic search using vector similarity instead of just a plain text search like in our SQL query above:

response = weaviate_client.query

.get("Patients", ["patient_id", "diagnosis", "notes"])

.with_near_text({

"concepts": [query_text]

})

.with_limit(5)

.do()

The key benefit of a vector index is its ability to perform highly efficient similarity searches. Instead of just looking for exact keyword matches, it finds items with similar vector representations. This allows for more intuitive and context-aware searches.

Since calculating the similarity between a query vector and multiple vectors in the index could take a long time, the vector index is designed in such a way that the query doesn’t need to be compared to every single vector in the index.

The simplest approach to this is called the inverted file, or IVF. The stored vectors are assigned to clusters, or bins, using a technique such as k-means clustering, so when a query comes in, we identify which cluster it would belong to and search within that cluster. In practice we use more sophisticated algorithms such as the Hierarchical Navigable Small World (HNSW) algorithm.

Above: a visualisation in 3D of a large number of vectors which represent documents and which could be stored in a vector index.

We are currently developing a vector index of all longitudinal studies in the UK according to the variables measured. A longitudinal study is a long project that runs for many years, where researchers track a cohort of individuals over time. For example, the Millennium Cohort Study followed a number of children born around the turn of the millennium who would now be in their twenties. Each year they are contacted and asked a number of questions, allowing researchers to track trends such as anxiety levels and even quantify how these were influenced by external factors such as the pandemic.

There are about 69 longitudinal studies that are running in the UK. Each one may have measured thousands of variables. A variable corresponds to a question like “I often feel anxious”, or could be a biomarker such as the presence or absence of a particular gene variant.

We constructed a multi-level vector index which is also relational, in order to represent the complexity of these studies. Each study and its description is an entry in our index, and each study has multiple datasets inside, which each have multiple variables. We used Weaviate for this project (although we experimented with other vector index providers), and set up a query mechanism where the user can search for a text such as “I like playing video games” and will see responses coming back which are semantically similar in vector space. They may have no words in common, or even be in a different language, but if the semantic content is similar, then the cosine similarity between the query vector and the vector in the index will be high (close to 1), and that result will be returned close to the top of the result set.

In the Harmony project, we had a number of additional challenges, because the variables themselves are not very much use to researchers. If a user searches for “I feel anxious”, they don’t want to just find the variable where that question was asked - they also want to find the study and dataset where that data resides. For that reason, we implemented a multi-level index where queries return the parent and grandparent (all direct ancestors) of each item that is found, so that the user sees more useful and informative results. To my knowledge, this is a unique solution, as I have not been able to find other implementations of relational vector indices where items can have parents and grandparents and all related items have to be retrieved when a search is conducted.

Vector indices are efficient because they use algorithms designed to perform similarity searches on high-dimensional data. This task would be computationally expensive for traditional databases. A vector index would not calculate the similarity between your search vector and every vector in the database, but would organise the vectors in a way that allows for rapid approximate nearest-neighbor (ANN) searches.

In short, vector indices achieve efficiency through two main methods:

Approximate Nearest Neighbour (ANN) Algorithms: Unlike a standard database that has to compare a query to every single item (a “linear scan”), a vector index uses algorithms like Hierarchical Navigable Small World (HNSW) or Inverted File Index (IVF). These algorithms create a structured map or graph of the vectors. When you submit a query, the algorithm navigates this structure to quickly find a small subset of the most likely matches. It’s like finding a person in a city using a street map instead of knocking on every single door. This approach trades a tiny bit of precision for a massive increase in speed.

Specialised Data Structures: Vector indices use data structures optimized for numerical operations. For example, they might compress the vectors to reduce their size, allowing more data to fit into memory for faster access. They are also designed to work well with modern hardware, leveraging parallel processing capabilities (like GPUs) to speed up calculations.

Vector indices are efficient because they are built from the ground up for one specific purpose: finding approximate matches in vast collections of vectors as quickly as possible. This is fundamentally different from a traditional database, which is optimised for exact matches and structured queries.

Vector indices are quite heavy duty. They often need a big server to run on, so they may be overkill for your solution. For example, if you routinely have to compare vectors of 100 documents only, it makes much more sense to calculate those and store them in a regular file.

Likewise, if your application needs a lot of relationships between different types of data, such as a user database or employee database (which would have salary tables, address tables, department tables, all inter-connected), then a vector database probably isn’t the right tool.

However, where your data contains a lot of unstructured text or images, and you need to compare new incoming texts or images to the indexed items, a vector index is the ideal tool for the job. A fashion company can index images of all the products they sell, and a vector index would allow users to upload photos and retrieve the most similar image in the database. This is probably something like how Google’s Search by Image / Google Lens works, although that information is not public. Likewise, if you have a database of job applicants and you want to find someone with a particular set of skills then you could set up a semantic search where you don’t need to use the exact wording that they used in their CV.

Vector indices are not a one-size-fits-all solution for every data problem. While they are a game-changer for unstructured data, such as text, images, and audio, their complexity and resource requirements can be overkill for smaller-scale projects or data that is already highly structured.

For applications that rely on exact matches, complex relationships, and structured data, a traditional relational database or a graph database remains the best choice.

However, when the core function of your application involves semantic search, similarity matching, or content-based retrieval, like a fashion retailer searching for similar images, a job recruiter matching skills from a CV, or a discovery platform indexing research studies, a vector index provides an unparallelled advantage. Vector indices are the right tool when your goal is to understand the “meaning” of your data, not just its keywords.

Looking for experts in Natural Language Processing? Post your job openings with us and find your ideal candidate today!

Post a Job

We are excited to introduce the new Harmony Meta platform, which we have developed over the past year. Harmony Meta connects many of the existing study catalogues and registers.

Guest post by Jay Dugad Artificial intelligence has become one of the most talked-about forces shaping modern healthcare. Machines detecting disease, systems predicting patient deterioration, and algorithms recommending personalised treatments all once sounded like science fiction but now sit inside hospitals, research labs, and GP practices across the world.

If you are developing an application that needs to interpret free-text medical notes, you might be interested in getting the best possible performance by using OpenAI, Gemini, Claude, or another large language model. But to do that, you would need to send sensitive data, such as personal healthcare data, into the third party LLM. Is this allowed?

What we can do for you