Explainable AI, or XAI, refers to a collection of ways we can analyse machine learning models. It is the opposite of the so-called ‘black box’, a machine learning model whose decisions can’t be understood or explained. Here’s a short video we have made about explainable AI.

When a new technology emerges onto the business landscape, it is often met by a degree of distrust, confusion, and fear. It is a fundamental part of human nature to be wary of things that we don’t understand. In the past, people have been sceptical of everything from fax machines and touch-tone phones to email and flash drives. That is no less true for AI in business.

The sudden increase in the use of artificial intelligence and machine learning in the past 5-10 years is no different. The AI revolution has given many people a feeling of trepidation about what it is, what it does, how it works, and how likely it is to replace them. Perhaps explainable AI can alleviate these fears.

The fear of AI-driven job replacement might be the most worrisome concept for some people. They point to historical indicators where technological breakthroughs were good for the industry, but a death knell to a subset of its employees, such as robots building cars in the automotive industry. Even the moniker ‘AI’ can carry a certain fear of becoming outdated for some people, particularly anyone who’s ever read Isaac Asimov’s I, Robot or watched any classic science fiction such as the Terminator movies, 2001: A Space Odyssey or the TV series Battlestar Galactica.

To properly use any sort of tool or technology, we must understand it to the point of trusting it to do its job to help us do ours. Mega-corporations like Amazon, Google, and Facebook have been using AI for years, but even most of their employees are not completely familiar with how their processes work.

This brings us to the necessity for explainable AI, often abbreviated to XAI. This is AI that the average person can understand and engage with in order to better use its applications correctly and efficiently. Like teachers with a room full of new students, we don’t just want to know the answer, we want to know how it was reached. Showing your work is essential.

Fast Data Science - London

We need an explanation of how AI is reaching its conclusions and recommendations. Not only does this enhance our trust of said technology, but it lets us improve on it as we can analyse the facts that lead an AI system to make a specific decision or prediction; how it defines confidence in its predictions; what sort of errors can cause it to make erroneous decisions; and so on.

The goal of XAI is to understand how machine learning works, and specifically the steps and models involved in making decisions or recommendations. Machine learning at its heart is the ability of powerful computer processors to scan in large amounts of data and analyse it to detect different patterns. This is particularly useful because the speed at which the computer can analyse data greatly outstrips that of a person or even an entire team of data engineers. Machine learning allows for patterns that might take weeks, months, years, or a lifetime for humans to pick up on. But since this process is going on inside a computer and at speeds that are difficult for human minds to handle, it can be intimidating, to say the least.

The two main goals of XAI are transparency and traceability. In essence, it is the process of holding the system accountable for how it is working and what its results are.

For a real-world metaphor, it would be the equivalent of trusting your local government to make all decisions behind closed doors and with no outside influence or the ability to attend council meetings. Thus, when local governments meet, the public is invited to attend and minutes of the meeting are made available for consumption. Ditto the government’s budget - what its revenues are and its expenditures month by month. Considering the number of processes where AI is now placed in a position of importance - military, financial, public safety - introducing XAI only makes sense to prevent catastrophic errors from occurring.

Not everything will need the same level of transparency. For instance, Amazon has used its product recommendation algorithm for nearly a decade, with considerable improvements, of course. However, there are few security threats or potential critical errors involved in the process that recommends you a cosy sweater if you have just bought Harry Styles’ latest album. While the average Amazon worker could likely benefit from the knowledge of how the algorithm ties one time to the other, it is not essential for most job titles.

Without explainable AI, many machine learning models appear as a ‘black box’

But for companies that turn AI analysis into actionable decisions for their future, it’s natural to know what’s going on behind the curtain. AI is often described as a ‘black box’ technology, by which we mean that information goes in and information comes out without any real explanation of what happened to it when it was “inside”. Now that it is being used in more and more industries by more and more people, it can no longer merely just be a thing, it has to be a tool that allows humans to more easily understand its decision process and be able to make course corrections. Because even now, AI is no longer being used solely for decisions like which stocks to recommend and how much of a product to order for next season. It is being used to help make diagnoses and therapy recommendations for medical patients, it’s being used by barristers to do research for cases, and in other arenas where there are ethical or legal considerations that must be explored and met.

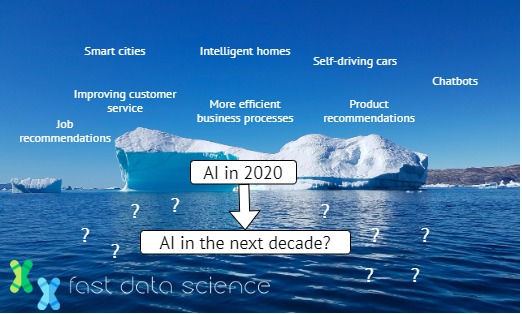

Today’s AI is only the tip of the iceberg when it comes to what machine learning could do in the next decade.

AI is not going away. Today’s commercial applications of machine learning are only the tip of the iceberg when it comes to how AI, decades in the making, will continue to take a larger role in just about every industry imaginable.

Already, academic, technological, and other fields are doing deeper dives into the uses of other AI subsets such as artificial neural networks, deep learning, robotics, and natural language processing that will require oversight and XAI factors of their own in order to keep humans firmly in charge of the transparency and traceability of these powerful tools.

Ready to take the next step in your NLP journey? Connect with top employers seeking talent in natural language processing. Discover your dream job!

Find Your Dream Job

You are probably familiar with traditional databases. For example, a teacher at a school will need to enter students’ grades into a system where they get stored, and at the end of the year the grades would need to be retrieved to create the report card for each student. Or an employee database might store employees’ home addresses, pay grades, start dates, and other crucial information. Traditionally, organisations use a structure called a relational database, where different types of data are stored in different tables, with links between them, and they can be queried using a special language called SQL.

A problem we’ve come across repeatedly is how AI can be used to estimate how much a project will cost, based on information known before the project begins, or soon after it starts. By “project” I mean a large project in any industry, including construction, pharmaceuticals, healthcare, IT, or transport, but this could equally apply to something like a kitchen renovation.

Senior lawyers should stop using generative AI to prepare their legal arguments! Or should they? A High Court judge in the UK has told senior lawyers off for their use of ChatGPT, because it invents citations to cases and laws that don’t exist!

What we can do for you