In 2016, the British computer scientist and Turing Award winner Geoffrey Hinton stated:

We should stop training radiologists now. It’s just completely obvious that within five years, deep learning is going to do better than radiologists.

Radiology is one of the medical specialties that has been most affected by the hype surrounding artificial intelligence (AI).

Ever since Geoffrey Hinton suggested that AI algorithms will replace radiologists, there has been a wave of excitement, fear, and confusion among the radiology community and the general public. However, as more evidence emerges on the actual performance and limitations of AI in radiology, it is time to reassess the realistic expectations and implications of this technology for the future of radiology.

The hype around AI in radiology was largely driven by the impressive results of deep learning neural networks in image recognition tasks. These models, which are able to learn from large amounts of data without explicit rules or features, have shown remarkable abilities to identify and classify objects in natural images, such as faces, animals, and scenes. Some of these models have even surpassed human performance in certain benchmarks, such as the ImageNet challenge.

Naturally, this sparked interest and curiosity among researchers and clinicians in radiology, a field that relies heavily on image interpretation. Could these models also outperform radiologists in detecting and diagnosing diseases from medical images, such as X-rays, [CT scans](https://www.thelancet.com/journals/lancet/article/PIIS0140-6736(18), MRI scans, and ultrasound images? Could they automate the tedious and time-consuming tasks of image analysis and reporting, freeing up radiologists to focus on more complex and rewarding aspects of their work? Could they improve the quality and efficiency of radiology services, reducing errors and costs?

These questions motivated a surge of research and development in radiology AI, with hundreds of papers published every year, dozens of start-ups founded, and millions of dollars invested. The media also contributed to the hype, with sensational headlines proclaiming that AI will soon replace radiologists or that AI can diagnose diseases better than humans. Some prominent figures in AI also made bold predictions about the imminent disruption of radiology by AI, such as Geoffrey Hinton’s infamous quote.

The reality of AI in radiology has proven to be much more nuanced and complex than the hype suggested. While there is no doubt that AI has made significant progress and potential in radiology, there are also many challenges and limitations that need to be addressed before it can be widely adopted and trusted in clinical practice.

One of the main challenges is the quality and availability of data. Deep learning models require large amounts of annotated data to train and validate their performance. However, obtaining such data is not easy in radiology, due to ethical, legal, technical, and practical issues. Medical images are sensitive personal data that need to be protected and anonymized. They also need to be accurately labeled by experts, which can be costly and time-consuming. Moreover, medical images are heterogeneous and diverse, depending on the modality, protocol, device, patient population, disease type, and stage. This means that models trained on one dataset may not generalize well to other datasets or scenarios, leading to poor performance or errors.

Another challenge is the evaluation and validation of AI models. Unlike some other computer vision tasks, where images can be easily categorized into discrete classes (e.g., cat or dog), medical images often contain multiple findings or abnormalities that need to be detected, localized, measured, characterized, and reported. Moreover, there is often variability or uncertainty in the interpretation of medical images among different radiologists or even the same radiologist at different times. This makes it difficult to define a clear ground truth or gold standard for evaluating AI models. Furthermore, most studies on AI in radiology have been conducted in controlled settings or ideal conditions (e.g., retrospective analysis on selected cases), which may not reflect the real-world performance or utility of AI models in clinical practice (e.g., prospective analysis on diverse cases).

A third challenge is the integration and adoption of AI models in a clinical workflow. Even if an AI model can achieve high accuracy or reliability on a specific task or dataset, it does not necessarily mean that it can improve the clinical outcome or efficiency for patients or radiologists. To do so, it needs to be integrated into the existing workflow and systems of radiology departments, which can pose technical and logistical challenges. It also needs to be accepted and adopted by the end-users (e.g., radiologists), who may have concerns or reservations about the trustworthiness, reliability, explainability, accountability, and liability of AI models. Moreover, it needs to comply with the ethical, legal, and regulatory standards and guidelines of the health care system, which may vary across different regions or countries.

Despite these challenges and limitations, AI in radiology is not doomed to fail or fade away. On the contrary, AI has shown great promise and potential to enhance and augment the capabilities and roles of radiologists, rather than replace or threaten them. AI can assist radiologists in various tasks, such as image acquisition, reconstruction, enhancement, segmentation, annotation, detection, diagnosis, prognosis, prediction, recommendation, reporting, and education. AI can also help radiologists to cope with the increasing workload and demand for imaging services, by improving the speed, quality, and efficiency of image analysis and reporting. AI can also enable radiologists to provide more personalized and precise care for patients, by integrating and analyzing multiple sources of data, such as images, genomics, biomarkers, clinical history, and outcomes.

However, to realize these benefits, radiologists need to be actively involved and engaged in the development, evaluation, integration, and adoption of AI in radiology. They need to collaborate with AI researchers, engineers, developers, and vendors, to provide domain knowledge, clinical expertise, and feedback. They need to participate in the collection, annotation, and sharing of high-quality data for training and validating AI models. They need to critically appraise and validate the performance and utility of AI models in clinical practice, using rigorous methods and metrics. They need to advocate for the ethical, legal, and regulatory standards and guidelines for AI in radiology, to ensure its safety, quality, and accountability. They need to educate themselves and their colleagues on the principles, applications, and limitations of AI in radiology, to foster trust, confidence, and acceptance. And they need to adapt and update their skills and roles in the era of AI, to leverage its strengths and mitigate its weaknesses.

Some professions such as lawyers are going to be affected by AI. It is likely that the work of junior paralegals could be done with generative models, such as finding case law, however strategic thinking is going to remain a human domain for a long time. We’ve been experimenting with AI for English insolvency law in our project which we are working on with Royal Holloway University.

The hype and reality of AI in radiology can be compared with the hype and reality of AI in natural language processing (NLP) and large language models ( LLMs). NLP is the field of AI that deals with the understanding and generation of natural language (e.g., text or speech). LLMs are deep learning models that can learn from large amounts of text data (e.g., billions of words) without explicit rules or features, and can generate coherent and fluent text on various topics or tasks.

Similar to AI in radiology, NLP and LLMs have also been subject to a lot of hype and excitement in recent years. Some of the impressive results of LLMs include generating realistic news articles, summarizing long documents, answering complex questions, writing creative stories or poems, translating between languages, mimicking the style or tone of famous authors or celebrities, and even passing the Turing test (i.e., fooling humans into thinking they are talking to another human). Some of the sensational headlines proclaiming that LLMs will soon replace human writers or editors or that LLMs can understand natural language better than humans. Some prominent figures in AI also made bold predictions about the imminent disruption of NLP by LLMs.

However, as more rigorous studies and evaluations have been conducted, the reality of NLP and LLMs has also proven to be much more nuanced and complex than the hype suggested. While there is no doubt that NLP and LLMs have made significant progress and potential in natural language understanding and generation, there are also many challenges and limitations that need to be addressed before they can be widely adopted and trusted in various domains and applications.

One of the main challenges is the quality and availability of data. LLMs require large amounts of text data to train and validate their performance. However, obtaining such data is not easy in NLP, due to ethical, legal, technical, and practical issues. Text data are sensitive personal data that need to be protected and anonymized. They also need to be accurately labeled by experts, which can be costly and time-consuming. Moreover, text data are heterogeneous and diverse, depending on the domain, genre, style, tone, language, dialect, culture, context, audience, purpose, and intent. This means that models trained on one dataset may not generalize well to other datasets or scenarios, leading to poor performance or errors.

Another challenge is the evaluation and validation of LLMs. Unlike natural images, which can be easily categorized into discrete classes (e.g., cat or dog), natural language often contains multiple meanings or ambiguities that need to be resolved or clarified. Moreover, there is often variability or uncertainty in the interpretation or generation of natural language among different humans or even the same human at different times. This makes it difficult to define a clear ground truth or gold standard for evaluating LLMs. Furthermore, most studies on LLMs have been conducted in controlled settings or ideal conditions (e.g., predefined tasks or prompts), which may not reflect the real-world performance or utility of LLMs in various domains or applications (e.g., open-ended tasks or prompts).

A third challenge is the integration and adoption of LLMs in various domains or applications. Even if an LLM can achieve high accuracy or reliability on a specific task or dataset, it does not necessarily

AI Will Change Radiology, but It Won’t Replace Radiologists. https://hbr.org/2018/03/ai-will-change-radiology-but-it-wont-replace-radiologists

Here’s how AI will change how radiologists work. https://www.weforum.org/agenda/2020/10/how-ai-will-change-how-radiologists-work/

AI Vs. Radiologists: Exploring The Future Of Medical Imaging. https://alhosnmedicalcenter.com/blog/ai-vs-radiologists-exploring-future-medical-imaging/

Will AI Replace Radiologists? | Intelerad. https://www.intelerad.com/en/2022/05/13/will-ai-replace-radiologists/

Harvey, Why AI will not replace radiologists, https://medium.com/towards-data-science/why-ai-will-not-replace-radiologists-c7736f2c7d80, 24 Jan 2018

Unleash the potential of your NLP projects with the right talent. Post your job with us and attract candidates who are as passionate about natural language processing.

Hire NLP Experts

Senior lawyers should stop using generative AI to prepare their legal arguments! Or should they? A High Court judge in the UK has told senior lawyers off for their use of ChatGPT, because it invents citations to cases and laws that don’t exist!

Fast Data Science appeared at the Hamlyn Symposium event on “Healing Through Collaboration: Open-Source Software in Surgical, Biomedical and AI Technologies” Thomas Wood of Fast Data Science appeared in a panel at the Hamlyn Symposium workshop titled “Healing Through Collaboration: Open-Source Software in Surgical, Biomedical and AI Technologies”. This was at the Hamlyn Symposium on Medical Robotics on 27th June 2025 at the Royal Geographical Society in London.

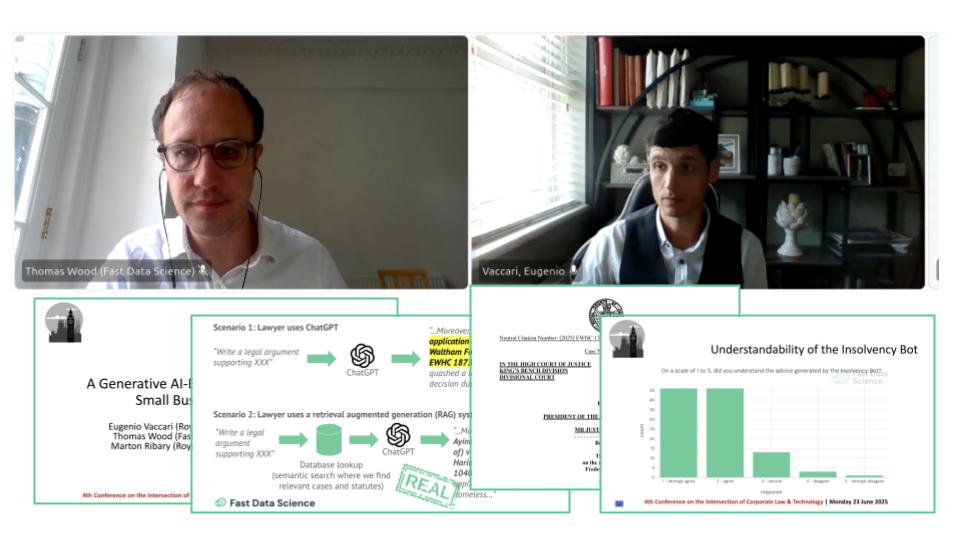

We presented the Insolvency Bot at the 4th Annual Conference on the Intersection of Corporate Law and Technology at Nottingham Trent University Dr Eugenio Vaccari of Royal Holloway University and Thomas Wood of Fast Data Science presented “A Generative AI-Based Legal Advice Tool for Small Businesses in Distress” at the 4th Annual Conference on the Intersection of Corporate Law and Technology at Nottingham Trent University

What we can do for you