There are many differences between deep neural networks and the human brain, although neural networks were biologically inspired.

When was the last time you looked at a picture of an animal, bird or plant, couldn’t place it straight away and were left flabbergasted? When we look at something, our brain fires off a series of neurons that runs that image across thousands to millions of ‘reference images’ stored in the brain. In the case of the unusual animal or bird that we spotted: size, diet, habitat, lifespan, and so on.

However, chances are you’re not an animal or plant expert and your brain is now racing to find the desired information – conducting a super-fast check against the repertoire of animal species information, for example, that it has stored. So, your mind would be comparing ears, paws, tails, snouts, noses, and everything else to come to a conclusion.

The processing you’re trying to do is simply your biological neural network reprocessing past experience or, animal reference information, if you will, to deal with the novel situation at hand.

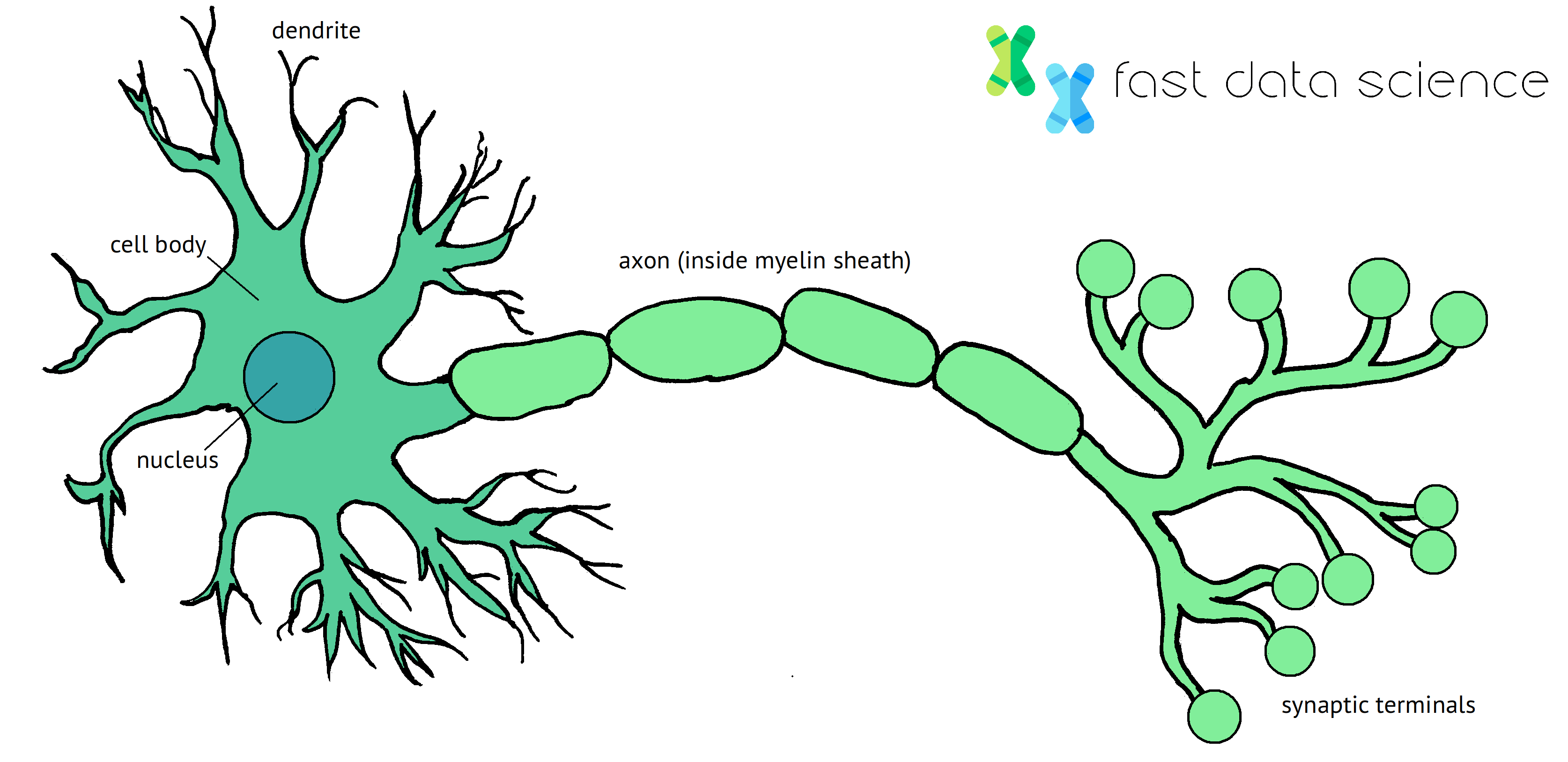

Above: Diagram of the human neuron and its parts.

Decades ago, when AI started to see a lot of popularity, there was much debate among scientists and researchers about making a machine that would have the ability to learn, adapt, and make decisions. The general idea was to have machines replicate the way the human brain learns.

The brain is primarily made up of a collection of neurons, all interconnected and firing electric signals back and forth to help the mind interpret things, reason, make decisions, etc. AI researchers at the time sought inspiration from this and tried to mimic the human brain’s function by creating artificial neurons.

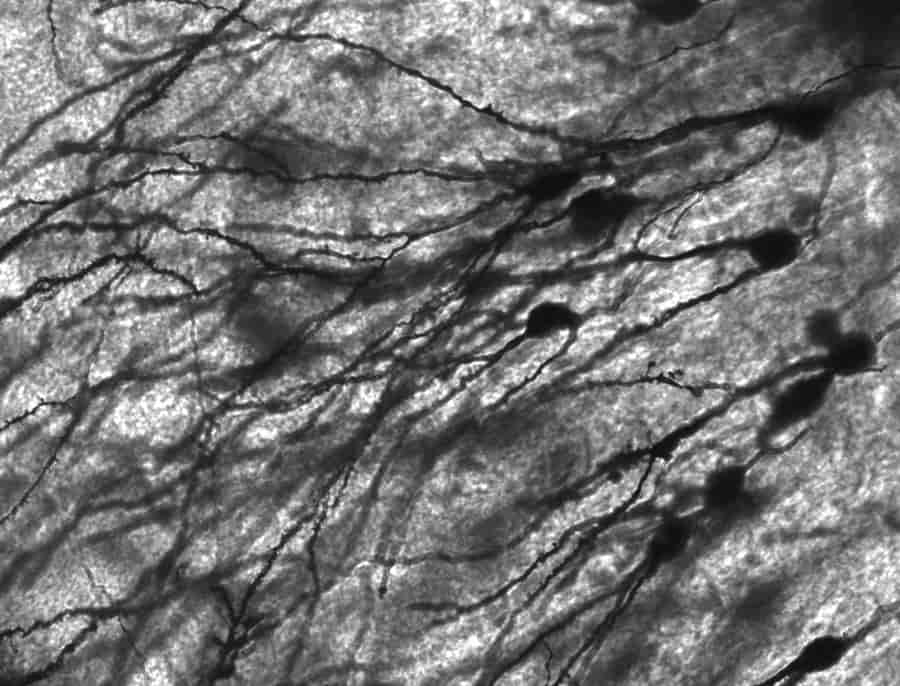

Above: Neurons in the human brain. Image source: Wikipedia. License: CreativeCommons Attribution-ShareAlike 2.5

Eventually, they discovered ways of connecting simple artificial neurons in some very complex ways, and the connections between those neurons in an artificial neural network (ANN) would help them create more complex outputs – if you remember the 1984 Cameron movie, The Terminator, then you can imagine how this technology may be used to produce a machine or perhaps, a robot, which is capable of autonomously making lethal decisions on its own. Well, thankfully, it hasn’t come to that, and hopefully, the technology will be put to good use, like self-driving cars which can “see” and avoid obstacles, or a ‘smart robot’ acting as a hotel concierge.

The concept for artificial neural networks actually dates back to 1943, when neuroscientists were experimenting with a way to replicate how biological neurons worked in the human brain. Just a decade later, Frank Rosenblatt, a respected psychologist at the time, evolved the idea by coming up with a single-layer neural network meant for supervised learning: the Perceptron.

This artificial neural network could “learn” from examples of data, and thus, train its network which would then apply that learning to a new set of data, and so on – much like how a biological neural network works: by learning new things, adapting, building a mind-muscle connection and keeping reference points in the brain (images, sounds, visual references, memories) to learn further.

Unfortunately, the Perceptron soon showed limitations in handling specific problems, mostly nonlinear functions. In 1986, a research paper was published by AI researchers which shed light on the “hidden layers” of neurons that may be used to solve some of the fundamental problems encountered by early builds of the Perceptrons, especially when fed and trained with large volumes of data.

By 2006, researchers had worked out how to overcome the limitations of the Perceptron, by adding one or more hidden layers of neurons in the middle, together with extra functions to alter the values in the hidden nodes. This was the development of deep learning.

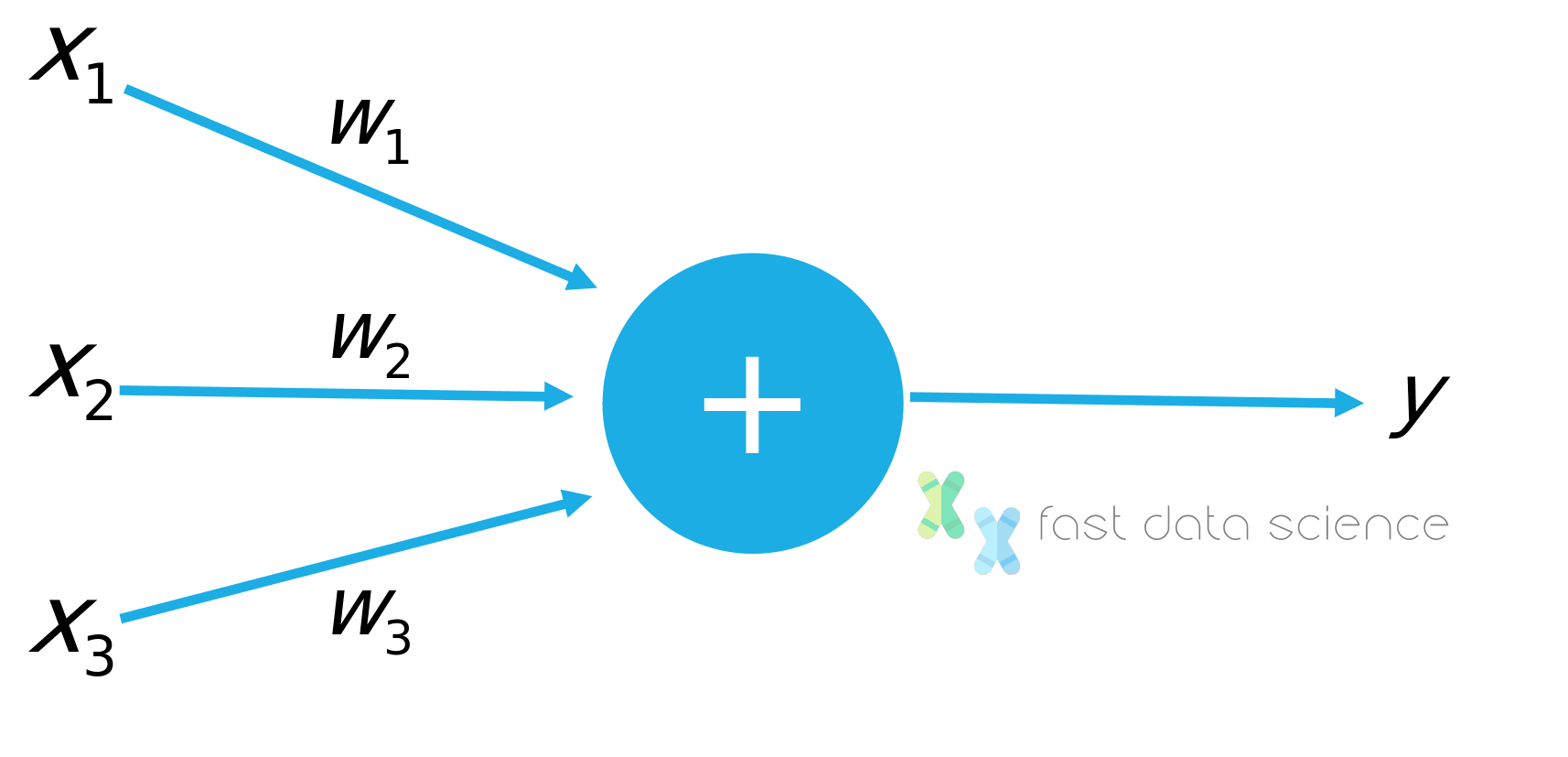

Above: a demonstration of how a hidden layer in a neural network works.

Around the same time, the evolution of cloud computing, heavy-duty GPU processors and big data re-sparked interest in lab-manufactured neural networks. Today, neural networks are the driving force behind many AI-enabled systems: voice assistants, image and facial recognition, online translation services, search engines, and large language models.

The nematode worm Caenorhabditis elegans only has 302 neurons! Despite this, some lucky C. elegans were selected to spend two weeks on the International Space Station in 2009, bypassing better qualified would-be astronauts.

The human brain is a very powerful and highly efficient processing machine, honed through millions of years of evolution. It sorts out massive volumes of information each day which we receive through our respective senses, associating known pieces of information with their respective ‘reference points’.

While the world of science and medicine has yet to fully understand how the human brain works, it is fair to say that we are at the beginning, or perhaps, in the midst of an era where we have the means to create our own version of the brain. Following decades of extensive research and development, neural scientists and researchers have created deep neural networks which can not only match but sometimes even surpass the human brain’s prowess in certain tasks.

If you’ve never heard of an artificial neural network, it happens to be one of the most influential and revolutionary technologies of the last 10-12 years or so – a fundamental piece of the puzzle when it comes to deep learning (DL) algorithms, and at the core of it is cutting-edge artificial intelligence (AI) technology. Of course, what else, right?

You may not realise the frequency with which you interact with neural networks each day – Amazon’s AI-powered assistant, Alexa, Apple’s Face ID smartphone lock for the iPhone and Google’s translation service are all examples of neural network applications. But they’re not just limited to how we interact with devices – neutral networks are behind some of the most prominent AI breakthroughs, including self-driving cars which can “see” and medical equipment capable of diagnosing breast and skin cancer without human intervention or lengthy lab-prescribed tests.

Artificial neural networks are nothing new though. The concept has, in fact, existed for decades, but it’s only been in the last few years that promising and powerful applications have surfaced. This raises the question: are neural networks similar to the human brain? Are they better? And if so, how?

Well, the most glaring similarity between the two is neurons: the basic unit responsible for driving the nervous system. But what’s interesting to note is how those neurons take input in either case. According to the current understanding of the human neural network, input is taken via the dendrites and the required information is output via the axon.

Without getting too technical, the dendrites and axon form the cell body which most neurons have. So, while one is responsible for receiving information, the other is responsible for relaying the information to the correct ‘nodes’ or neurons, so that our brain can process information and rapidly make decisions, when it’s called upon to do so.

Training neural networks

Now, according to research, the dendrites has different ways of processing input and goes through a non-linear function before passing on the information to the nucleus – another component of the cell body. But when we compare this to an artificial neural network, each input gets passed directly to a neuron and output is also taken directly from the neuron, in the same manner.

While the neurons in the human brain can give a non-stop almost never-ending set of outputs, neurons in an AI-powered neural network can only give a binary output – so, a few tens of millivolts every second. Perhaps this is starting to get too technical. Let’s put it this way:

The original motive for the pioneers of AI was to replicate human brain function: nature’s most complex and smartest known creation. This is why the field of AI has derived most of its nomenclature from the form and functions of the human brain, including the term AI or artificial intelligence.

So, artificial neural networks have taken direct inspiration from human neural networks. Even though a large part of the human brain’s functions remain a mystery, we do know this much: biological neural pathways or networks allow the brain to process massive amounts of information in the most complex ways imaginable, and that’s precisely what scientists are trying to replicate via artificial neural networks.

If you think Intel’s latest Core™ i9 processor running at 3.7GHz is powerful, then consider the human brain’s neural network in contrast: 100 billion neurons, which is what the brain uses for the most ‘basic’ processing. There’s absolutely no comparison in that sense between the two! The neurons in the human brain perform their functions through a massive inter-connected network known as synapses. On average, our mind has 100 trillion synapses, so that’s around 1,000 per neuron. Every time we use our brain, chemical reactions and electrical currents run across these vast networks of neurons.

Now, let’s shift our focus towards artificial neural networks or ANNs, in order to better understand the similarities. The core component in this case are artificial neurons, with each neuron receiving inputs from multiple nearby neurons, multiplying them according to assigned weights, adding them and then passing the sum along to one or more neighbouring neurons. But some artificial neurons may apply an activation function to the output before it is passed to the next variable.

At the core of it all, this may sound like an overly complex and trivial math equation. But when hundreds to thousands and even millions of neurons are placed in multiple layers and stacked on top of each other – the end result is an artificial neutral network capable of performing even the most complicated tasks, like recognising speech and classifying images. Maybe the concept of machines taking over in future and looking every bit as human as the Terminator movies isn’t so far-fetched, after all.

Artificial neural networks are generally composed of three or more layers:

Here’s an example:

A neural network programmed to detect cars, persons and animals will have an output layer consisting of three nodes. On the other hand, a neural network designed to distinguish between safe and fraudulent bank transactions will have just one output layer with no nodes.

It should be noted that a major point of distinction between the brain and an artificial neural network is that for the same input, the artificial neural network will provide the same output, but the human brain might falter – it may not necessarily give the same response to the very same input, something we commonly refer to as human error in business terms.

By now, many variations in ANNs have been introduced;

For instance, a convolutional neural network (CNN) is used to process images – each layer applies a convolution process in conjunction with other operations on images – reducing or expanding the image’s dimensions as needed. This allows the network to capture the details which matter and discard everything else. Interestingly, the key functions and algorithmic computations done by convolutional neural networks were inspired by early findings about the human visual system – when scientists discovered that neurons present in the primary visual cortex respond a certain way to specific attributes in the environment, such as edges.

Another evolution of the ANN architecture is capable of connecting various input/output layers in ways to allow networks to learn specific patterns. Recurrent neural networks or RNNs can link outputs from a layer to previous layers. This allows information to flow back into previous parts along a network – so we have a neural network which (in the present) is giving output based on past events. This application can be particularly useful in situations where a sequence is involved: handwriting recognition, speech, pattern and anomaly tracking, as well as other elements of prediction which is based on ‘time sequence’ patterns.

There are also subcategories of RNNs – long short-term memory or LSTM networks are among them. These add capabilities like connecting really distant and recent neurons in rather smart and sophisticated ways. LSTMs are, therefore, best for predicting the next word a user might type online during a search query, text generation, machine translation and various predictive applications.

During this time, two kinds of unique cells were discovered in the human visual system: simple cells and complex cells. While the former responded in a specific orientation only, the latter responded in multiple orientations. And so, it was concluded that the latter pooled over inputs from the former, which led to a spatial invariance in complex cells. This was pretty much what inspired the idea behind convolutional neural networks.

Another major distinction between human neural networks and artificial ones, as we pointed out earlier, are the number of neurons. Some studies argue that it’s close to 86 billion in the human brain, while more recent ones say that number is approximately 100 billion. By comparison, the total number of neurons in regular ANNs is under 1000 – nowhere close to the ‘mental horsepower’ of the human brain!

Plus, according to research, the power consumption of human neural networks is somewhere around 20W, whereas for ANNs, it’s around 300W.

Well, we’ve talked a great deal about the similarities between human and artificial neural networks – it pays to shift the focus towards some of the limitations of ANNs.

In spite of how advanced the concept sounds and the exciting similarities they share with their human equivalent, ANNs are actually quite different. And even though neural networks combined with deep learning are at the cutting-edge of AI-based technology today, they’re a far cry from the intelligence the human brain is capable of producing.

Therefore, ANNs can fail at a number of things where a human mind would excel:

Unlike their biological neural network counterpart, neural networks require lots of data in the form of thousands to millions of examples, in order to even come close to the way the human brain works.

If you recall the example we discussed at the start of the article – about identifying a strange new plant or animal species – an artificial neural network would require thousands, perhaps millions of reference points or data, in order to identify something and give the correct output. Whereas, the human brain would take only a few seconds to process the information and relay the required information which would help us identify the unusual or uncommon plant, bird or animal species.

Even though a neural network will perform a task it has been trained for with nearly pinpoint accuracy, it will perform generally poorly at everything else, even if it’s similar in nature to the original query or problem.

For example, a dog classifier trained to recognise thousands of dog pictures will not detect pictures of cats. For that, it will require completely new pictures of cats, and again, in the thousands. Unlike human neural networks, artificial ones are not capable of developing knowledge in the form of symbols: eyes, ears, snout, tail, whiskers, etc. instead, they process pixel values. This is why they need to be retrained from scratch every time, because they are simply incapable of learning about new objects in the context of high-level features.

Neural networks are capable of expressing their behaviour through neuron weights and activations only. This means that it’s generally very hard to understand the logic behind the decisions they make. This is why ANNs are often referred to as black boxes, which makes it really hard to determine if they are making decisions based on the correct factors. For instance, in the Loomis vs. Wisconsin case, where algorithms were used to determine the outcome of the case – neither the jury nor the defendant were allowed to see the data on which the algorithm was based, and lawmakers criticised the company responsible for developing the algorithm, that it did not reach a decision based on race-neutrality.

Plus, neural networks aren’t, by definition, meant to replace traditional rule-based algorithms which can reason in a clear manner and are codified into fixed rules. So, for instance, neutral networks generally perform poorly when we talk about solving math-based problems.

Another major downside worth noting is that it’s generally hard to visualise what’s going on ‘behind the scenes’ of neural networks. Just like trying to visualise how the human mind makes a decision, it’s practically impossible to examine or uncover how a specific neural net input results in an output – in any transparent, meaningful or explainable way – in order for neural scientists to improve the overall process.

Neural networks tend to be pretty good at classifying and clustering sets of data, but they are not particularly resourceful at making decisions or learning scenarios where reasoning and deduction is involved. In fact, many studies are starting to discover how artificial neurons learn in a very different and distinct way, compared to the human brain.

It’s fair to say that we have our work cut out and a long road ahead of us before we can accomplish human-level AI and deep learning to drive neural network-based applications. But we’re definitely a lot closer than we were, say, 30 years ago.

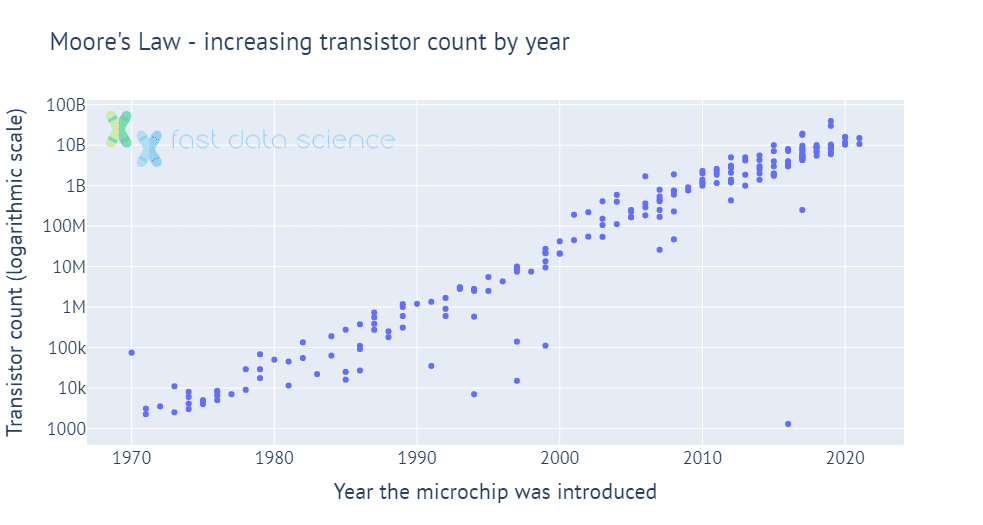

Above: Moore’s law - showing the exponential increase in computing power since the 1970s. Data source: Wikipedia.

As the gap between the human brain and artificial neural networks continues to close in, we may witness an era of artificial intelligence in inconceivable ways. But here’s hoping we don’t have a ‘machine man’ walking around telling us “the end is near”.

On a more positive note, however, the power of AI and neural networks can certainly be used to power self-driving cars or even self-flying air taxis. They can be used in medical applications to detect cancer in the early stages or other debilitating diseases which require early intervention. We may even witness the use of artificial neutral networks in the court of law, to decide cases and hand down sentences, although that remains debatable at the moment, especially from a human rights perspective.

If the technology is used for the greater good, then we may soon witness the next technological revolution – all based on what we know about the human brain.

Ready to take the next step in your NLP journey? Connect with top employers seeking talent in natural language processing. Discover your dream job!

Find Your Dream Job

We are excited to introduce the new Harmony Meta platform, which we have developed over the past year. Harmony Meta connects many of the existing study catalogues and registers.

Guest post by Jay Dugad Artificial intelligence has become one of the most talked-about forces shaping modern healthcare. Machines detecting disease, systems predicting patient deterioration, and algorithms recommending personalised treatments all once sounded like science fiction but now sit inside hospitals, research labs, and GP practices across the world.

If you are developing an application that needs to interpret free-text medical notes, you might be interested in getting the best possible performance by using OpenAI, Gemini, Claude, or another large language model. But to do that, you would need to send sensitive data, such as personal healthcare data, into the third party LLM. Is this allowed?

What we can do for you