The emergence of big data has revolutionized industries, transforming traditional business models and decision-making processes. In this comprehensive exploration, we delve into what big data is, its significant impacts on business strategy, and how companies can leverage vast datasets to drive innovation and competitive advantage.

Natural language processing

Big data involves large volumes of data that traditional data processing tools cannot efficiently process or analyze. It comes from various sources, including sensors for monitoring environmental data, social media interactions, multimedia content, transaction records, and GPS data from smartphones. This exponential increase in data is driven by the widespread adoption of digital technologies, leading to significant challenges and opportunities in data management and analysis. The handling of big data requires specialized technologies and approaches, such as distributed computing frameworks, advanced analytics, and artificial intelligence techniques to extract valuable insights from the data.

Big Data is characterized by Six distinct dimensions commonly known as the 6(six) V’s. Each of these characteristics highlights the challenges and opportunities inherent in handling and processing large datasets.

Volume The volume of data refers to the immense amounts of data generated every second. This is the core characteristic that makes data “big.” In our digital age, countless sources produce large volumes of data, including business transactions, social media platforms, digital images and videos, and sensor or IoT data. Organizations must have the capacity to store, process, and analyze this data to extract useful insights.

Velocity Velocity in the context of big data refers to the speed at which data is created, stored, analyzed, and visualized. In the modern business landscape, data is generated in real-time from various sources such as RFID tags, sensors in industrial machinery, and customer interactions on websites. The ability to manage the velocity of data is crucial for real-time decision-making and operational efficiency.

Variety Big data comes in a myriad of formats. This variety includes structured data (like SQL database stores), semi-structured data (like XML or JSON files), and unstructured data (such as text, email, video, and audio). Handling this variety involves accessing, storing, and analyzing data from disparate sources, often requiring specialized tools and methods.

Veracity Veracity refers to the reliability and accuracy of data. High-quality data must be clean, accurate, and credible to be valuable. However, big data often includes noise, biases, and abnormalities, which can compromise the quality of insights derived. Ensuring veracity involves employing advanced data processing and verification techniques to clean and validate data effectively.

Value The fifth V stands for value. It emphasizes the importance of turning big data into actionable insights that create value for the organization. This involves extracting meaningful patterns, trends, and information that can be used to make business decisions, innovate, and maintain competitive advantages. Without extracting value, the other characteristics of big data (volume, velocity, variety, and veracity) are moot.

Variability The final V is Variability in big data refers to the inconsistency and unpredictability in data types, formats, and sources over time. This characteristic poses significant challenges in data integration and analysis, requiring advanced tools and strategies to manage effectively. Strategies like data standardization, utilizing flexible analytics tools, and adopting adaptable data storage solutions, such as data lakes, can help mitigate the effects of variability.

It’s worth pointing out that big data can often be

| Example | Data type | Challenges |

|---|---|---|

| Foot traffic data | Latitudes+longitudes, velocities, accelerometer readings, device IDs | Data runs to terabytes and cannot fit on a laptop. Specialist big data software such as Athena, runningon the cloud, is needed to process it. Before anything can be done with it, it must be transformed or grouped. Privacy concerns may also apply. |

| Business analytics data | Transactions, customer IDs, dates and times | Volume of data, difficulty of getting valuable business insights from it |

Big data has revolutionized many industries by providing powerful insights that were not previously accessible. Here are some prominent use cases of big data across different sectors:

Improving Customer Service Big data enables businesses to analyze extensive customer interaction and transaction data to understand customer behavior and preferences better. This analysis can lead to more personalized service, where businesses anticipate needs and provide tailored recommendations, enhancing customer satisfaction and loyalty. For instance, e-commerce platforms use big data to suggest products based on browsing and purchase history.

Developing New Products and Services By analyzing trends, preferences, and market demands through big data, companies can innovate and create products or services that align closely with the customers’ needs. This use of big data can significantly shorten the product development cycle, improve market fit, and increase the success rate of new products. An example is streaming services like Netflix using viewing patterns to not only recommend shows but also to create content that is likely to be popular among certain demographics.

Fraud Detection Big data tools can process and analyze millions of transactions in real time to identify patterns that indicate fraudulent activities. This capability is especially crucial in industries like banking and finance, where rapid detection of fraud can prevent substantial financial losses. Techniques such as anomaly detection are used to flag unusual transactions that could indicate fraud.

Risk Management Big data analytics play a crucial role in risk assessment by allowing companies to better understand and mitigate risks. By analyzing large volumes of data from various sources, companies can identify potential risks before they materialize and make informed decisions to manage them. For example, insurance companies utilize big data to set premiums based on predictive models of risk levels associated with individual clients or policies. For pharmaceutical clients such as Boehringer Ingelheim and the Bill and Melinda Gates Foundation, Fast Data Science have set up natural language processing tools to analyse clinical trials and predict the risk level or complexity of a clinical trial.

The term “big data” has become a major aspect of modern business and technology landscapes, but its origins extend back to earlier times when the use of large datasets first began to emerge. Understanding its evolution helps illustrate how critical big data has become in today’s decision-making processes.

The roots of big data can be traced back to the late 20th century when both businesses and researchers began to utilize large datasets to uncover trends and insights that were not apparent from smaller sets. This period marked the beginning of data-driven strategies, but it was largely limited to structured data that could be neatly stored and processed in traditional database systems.

The proliferation of the internet and the digitization of many aspects of daily life in the late 1990s and early 2000s led to an explosion in data generation. This era saw the emergence of vast amounts of unstructured data, including text, images, videos, and data from various sensors and devices connected to the Internet of Things (IoT).

Initially, businesses relied on Business Intelligence (BI) tools to analyze data, which were adept at handling structured, limited-volume data. However, as data grew in volume, variety, and velocity, these tools became insufficient. This limitation spurred the development of more sophisticated data processing technologies capable of handling big data’s complexities.

Today, big data encompasses not only vast amounts of data generated from traditional sources but also from web interactions, mobile devices, wearables, and IoT devices. This shift has fundamentally transformed big data analytics from merely descriptive—telling what happened—to predictive and prescriptive—suggesting future actions and strategies. This transformation is supported by advanced technologies such as machine learning, artificial intelligence, and cloud computing, which enable the processing and analysis of big data at speeds and scales previously unattainable.

Big data can originate from a multitude of diverse sources, each contributing vast volumes of information. Understanding these sources is crucial for leveraging big data effectively. Here are some of the primary sources:

Social Media Social media platforms like Facebook, Twitter, Instagram, and LinkedIn are prolific sources of big data. They generate extensive data through user interactions such as posts, comments, likes, and shares. This data is incredibly valuable for analyzing user behavior, trends, and preferences.

Transaction Data Transaction data is generated every time a purchase is made. This includes swiping credit cards, using online payment systems, or transactions made through loyalty cards in stores. Such data is crucial for understanding consumer purchasing behaviors, preferences, and market trends.

Machine-Generated Data This category includes data automatically generated from machines and sensors, without human intervention. Examples include data from industrial machinery, medical devices, traffic cameras, and smart meters. Machine-generated data is essential for applications such as predictive maintenance and operational optimization.

Web Logs Web logs capture detailed information every time a user visits a website. This includes data like the visitor’s IP address, the pages visited, time spent on each page, and navigation paths. Analyzing web log data helps in understanding user engagement, optimizing website performance, and enhancing overall user experience.

Each of these data sources plays a vital role in the generation of big data, offering unique insights and opportunities for analysis and application in various fields.

Big data is transforming business strategies by serving as a strategic asset that redefines competitive dynamics across industries. Here are several ways in which companies are leveraging big data to enhance their business strategies:

Enhanced Decision Making One of the most significant impacts of big data is its ability to improve decision-making processes. With big data analytics, companies can identify trends, predict customer behavior, and make informed decisions that are backed by data. For example, retailers analyze customer purchase data to optimize stock levels and promotional strategies, increasing efficiency and reducing costs.

Improved Customer Insights Big data allows companies to analyze detailed customer behavior and trends. This analysis helps businesses tailor their products and services to better meet customer needs, thereby enhancing customer satisfaction and loyalty.

Operational Efficiency Big data analytics helps streamline operations, reduce costs, and enhance productivity. It enables businesses to identify inefficiencies and bottlenecks in their operations and to develop strategies to overcome them, leading to more efficient processes.

Transforming Industries Big data has the potential to completely transform industries by enabling the creation of new business models and services. For instance, healthcare providers utilize big data to personalize treatment plans and predict disease outbreaks. Similarly, financial institutions use big data to detect fraud and manage risks more effectively.

Competitive Advantage In today’s fast-paced business environment, having a competitive edge is crucial. Big data provides this edge by enabling organizations to quickly gather, analyze, and act on insights. Companies that can effectively utilize big data insights can outperform competitors who are slower or rely on traditional methods of data analysis.

Big data analytics finds applications across nearly every industry, significantly enhancing operations and decision-making processes. Below are some key sectors where big data is making a substantial impact:

In the healthcare sector, big data is utilized to predict epidemics, cure diseases, improve the quality of life, and prevent avoidable deaths. With the massive volumes of patient data available, analytics can lead to breakthroughs in treatment protocols, enhance patient outcome predictions, and more effectively manage healthcare costs.

Retailers leverage big data for a variety of purposes including customer segmentation, inventory management, sentiment analysis, and personalized marketing. This extensive data analysis helps retailers understand consumer behaviors more deeply, optimize their shopping experiences, and boost customer loyalty and retention.

In finance, big data is crucial for conducting risk analytics, enabling algorithmic trading, detecting fraud, and managing customer relationships. Financial institutions analyze petabytes of data to uncover insights that lead to smarter, data-driven investment decisions and enhance operational efficiency.

Telecommunication companies use big data to enhance customer service, reduce churn rates, optimize network quality, and provide tailored promotions and products. By analyzing data from customer usage and network performance, telecom operators can deliver better services and anticipate customer needs more effectively.

Big data is not just a concept but a practical tool that leading companies utilize to enhance their business operations and customer experiences. Here are two prominent case studies:

Amazon leverages big data to transform the online shopping experience. By analyzing vast amounts of data related to customer browsing and purchasing history, Amazon is able to offer highly personalized shopping experiences. This includes tailored product recommendations that align closely with the user’s preferences and past behavior. This level of personalization not only enhances customer satisfaction but also significantly increases the likelihood of purchases.

Netflix uses big data to revolutionize the entertainment industry. By analyzing viewer preferences and viewing habits, Netflix not only recommends movies and shows that match users’ tastes but also uses this data to make strategic decisions about which new content to produce. This approach ensures that the new shows and movies are likely to be well-received, optimizing their investment in content production and maintaining high engagement levels among subscribers.

Walmart employs big data to enhance inventory management and customer service. By analyzing sales data, weather information, and economic indicators, Walmart can predict product demand more accurately. This predictive capability allows for better stock optimization across stores and minimizes overstock and understock scenarios, improving overall customer satisfaction.

Google uses big data to optimize its search algorithms, improving the relevance and speed of search results. Additionally, Google analyzes vast datasets from user interactions to enhance its advertising models, making them more targeted and effective. This has profound implications for digital marketing, providing advertisers with better tools to reach their desired audiences.

Healthcare providers use big data to implement predictive analytics in patient care. This includes analyzing historical health data to predict patient risks for various conditions and diseases, allowing for earlier interventions. For example, by analyzing patterns from a large number of patients, healthcare providers can identify those at risk of chronic diseases like diabetes or heart disease early and provide preventive measures.

Barclays uses big data for risk management and fraud detection. By analyzing transaction data in real time, Barclays can detect unusual patterns that may indicate fraudulent activities, thus enhancing the security of customer transactions. Additionally, big data aids in risk assessment models that help the bank understand and mitigate financial risks more effectively.

Big data analytics relies on a variety of technologies that enable the processing, analysis, and storage of large volumes of data. Below are some key technologies that support these processes:

Hadoop Hadoop is an open-source framework that supports the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage.

NoSQL Databases Unlike traditional relational databases, NoSQL databases are designed to expand and contract easily, supporting rapid and flexible development and deployment of applications. They are especially useful for working with large sets of distributed data and are adept at handling various data types including structured, semi-structured, and unstructured data.

Machine Learning and AI Machine learning and artificial intelligence are crucial for analyzing big data. These technologies enable computers to predict outcomes and make intelligent decisions based on data patterns, without being explicitly programmed for each step.

Data Lakes Data lakes are storage architectures that allow organizations to store vast amounts of raw data in its native format until it is needed. Unlike traditional databases, data lakes are designed to handle vast amounts of heterogeneous data, from emails and text documents to video and audio.

Spark Apache Spark is an open-source unified analytics engine for large-scale data processing. It provides high-level APIs in Java, Scala, Python, and R, and an optimized engine that supports general execution graphs. Spark is well-known for its speed in analytic applications and its ability to process streaming data.

Kafka Apache Kafka is a distributed streaming platform that lets you publish and subscribe to streams of records, store records in a fault-tolerant way, and process them as they occur. Kafka is widely used for real-time analytics and has become integral in handling the influx of data from various sources like sensors, social media feeds, and scientific applications.

Cloud Computing Platforms Cloud services like AWS, Google Cloud Platform, and Microsoft Azure provide comprehensive ecosystems for big data analytics. These platforms offer vast computing resources and scalability that allow businesses to store and analyze massive data sets efficiently and cost-effectively.

These technologies are at the forefront of big data analytics, providing the tools needed to transform large volumes of complex data into actionable insights that drive decision-making and innovation.

While big data offers significant opportunities for insight and innovation, its implementation is not without challenges. Below are some of the key hurdles organizations face when deploying big data analytics:

Data Privacy and Security With the vast amounts of sensitive information processed through big data systems, ensuring privacy and security is crucial. As data breaches become increasingly common, companies face the challenge of securing data and managing privacy. Compliance with legal frameworks like GDPR in Europe and HIPAA in the United States, which govern the use and protection of data, is mandatory and complex.

Skill Gap The demand for professionals skilled in big data technologies, analytics, and machine learning significantly outstrips supply. This skill gap can hinder the adoption and effective utilization of big data technologies, as organizations struggle to find and retain the necessary talent.

Data Silos and Integration One of the substantial barriers in big data analytics is integrating disparate data sources and breaking down data silos within organizations. These silos can prevent the seamless flow of information, complicating efforts to aggregate and analyze data comprehensively.

Data Quality and Integrity Poor data quality can lead to inaccurate analyses and misguided decisions. Ensuring the accuracy, consistency, and integrity of large datasets is a major challenge. Data must be cleansed and standardized to be useful in analytics, a process that can be both time-consuming and resource-intensive.

Cost of Implementation The cost of implementing big data infrastructure can be prohibitive for many organizations. This includes the cost of technology, as well as the ongoing expenses related to data storage, processing, and analysis.

Technology Integration Integrating new big data technologies with existing IT infrastructures can be challenging. Organizations often face difficulties in merging new solutions with their legacy systems without disrupting existing operations.

Managing Data Volume and Velocity The sheer volume and speed at which data is generated pose significant challenges in terms of storage, processing, and analysis. Managing these aspects requires sophisticated technologies and approaches, which can complicate the architecture of big data solutions.

As technology continues to advance, the scope and impact of big data analytics are expected to grow significantly. Here are several key trends that are poised to shape the future of big data:

Increased Integration of IoT The Internet of Things (IoT) continues to drive big data growth as more devices become connected and generate vast amounts of data. This expansion enhances the variety, velocity, and volume of data, providing deeper insights into consumer behaviors, industrial performance, and environmental monitoring.

Enhanced Predictive Analytics With advancements in analytical tools, predictive analytics is becoming more accurate and accessible. This technology allows for more precise forecasting and decision-making across various sectors, including finance, healthcare, and supply chain management.

Focus on Data Privacy Data privacy remains a critical focus due to increasing regulatory and consumer pressures. Technologies that secure data while enabling robust analysis, such as differential privacy and blockchain, will be crucial.

Quantum Computing Quantum computing is expected to dramatically increase the processing power available for data analytics, enabling the handling of even larger datasets and more complex models efficiently.

Edge Computing By processing data near its source,edge computing reduces the latency associated with big data analytics. This is particularly beneficial for real-time applications in industries such as automotive (self-driving vehicles), manufacturing, and healthcare.

Artificial Intelligence and Automation AI and machine learning algorithms are becoming increasingly sophisticated and are expected to handle more complex data analysis tasks without human intervention. This includes automating data cleaning and preprocessing, which are time-consuming but critical steps in data analysis.

Augmented Analytics Augmented analytics uses machine learning and AI to augment human decision-making with data insights. This trend is expected to democratize data analytics by making insights more accessible across business units, leading to more informed decision-making at all levels of an organization.

Data as a Service (DaaS) The concept of Data as a Service is gaining momentum, where data and analytics are provided as a service through cloud platforms. This allows companies to scale their data initiatives quickly without large upfront investments in infrastructure.

Big data is not merely a technology trend; it represents a paradigm shift that is reshaping how organizations operate and compete in a data-driven world. This shift is evident across various sectors and has significant implications for decision-making, operational efficiency, and customer engagement. From healthcare to retail, finance, and telecommunications, big data is transforming industries by enabling more precise analytics, improving customer experiences, and optimizing operations. It allows companies to predict trends, personalize services, and make informed strategic decisions. Technologies like Hadoop, NoSQL databases, machine learning, and cloud computing are essential enablers of big data analytics. These technologies provide the necessary infrastructure to process and analyze vast amounts of data efficiently. Despite its benefits, the implementation of big data comes with challenges such as data privacy concerns, skill gaps, and integration issues. Addressing these challenges is crucial for organizations to leverage big data effectively. The future of big data includes increased integration of IoT, advances in predictive analytics, and a greater focus on data privacy. Innovations like quantum computing and edge computing are expected to further enhance the capabilities of big data analytics.

The ability to effectively manage and analyze large datasets is increasingly becoming a critical success factor. As we continue to generate vast amounts of data, the tools and techniques for harnessing this data are evolving. Organizations that can adeptly manage and analyze their data gain a competitive edge, innovate more swiftly, and deliver enhanced value to their customers. Embracing big data is not just an option but a necessity in today’s data-driven world. The revolution of big data is just beginning, and its full impact is yet to be fully realized.

Ready to take the next step in your NLP journey? Connect with top employers seeking talent in natural language processing. Discover your dream job!

Find Your Dream Job

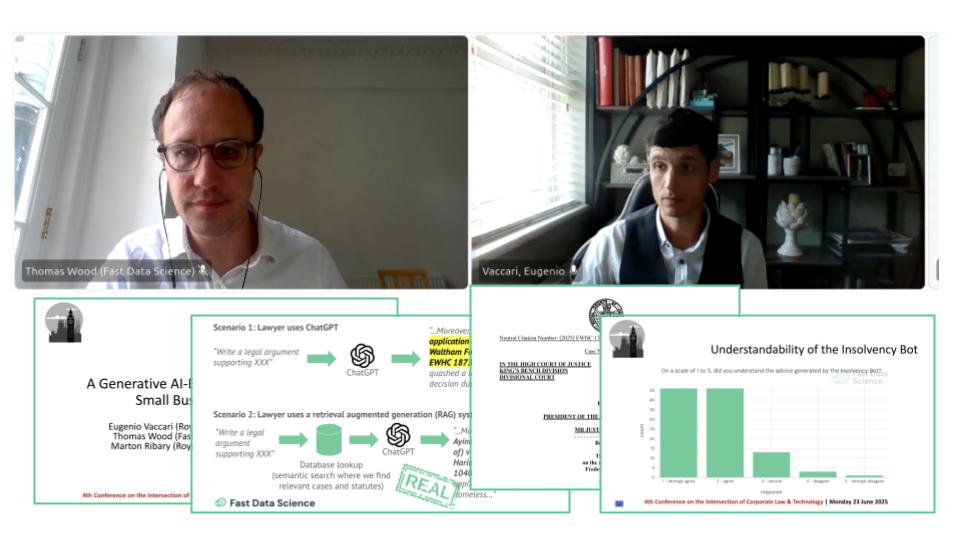

Senior lawyers should stop using generative AI to prepare their legal arguments! Or should they? A High Court judge in the UK has told senior lawyers off for their use of ChatGPT, because it invents citations to cases and laws that don’t exist!

Fast Data Science appeared at the Hamlyn Symposium event on “Healing Through Collaboration: Open-Source Software in Surgical, Biomedical and AI Technologies” Thomas Wood of Fast Data Science appeared in a panel at the Hamlyn Symposium workshop titled “Healing Through Collaboration: Open-Source Software in Surgical, Biomedical and AI Technologies”. This was at the Hamlyn Symposium on Medical Robotics on 27th June 2025 at the Royal Geographical Society in London.

We presented the Insolvency Bot at the 4th Annual Conference on the Intersection of Corporate Law and Technology at Nottingham Trent University Dr Eugenio Vaccari of Royal Holloway University and Thomas Wood of Fast Data Science presented “A Generative AI-Based Legal Advice Tool for Small Businesses in Distress” at the 4th Annual Conference on the Intersection of Corporate Law and Technology at Nottingham Trent University

What we can do for you