Read more about AI for business on fastdatascience.com

What are artificial neural networks and how do they learn? What do we use them for? What are some examples of artificial neural networks? How do we use neural networks?

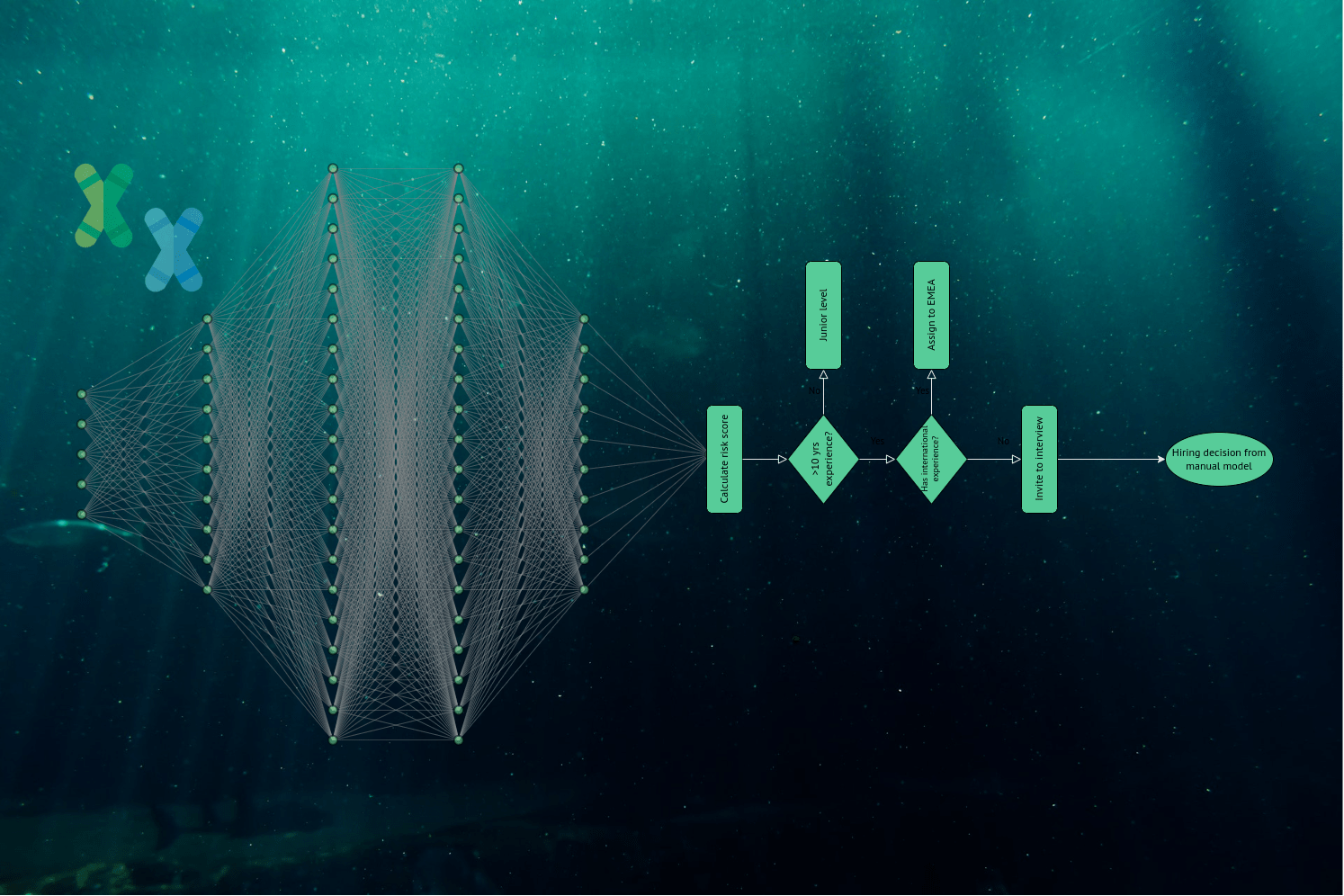

How Hybrid AI can combine the best of symbolic AI and machine learning to predict salaries, clinical trial risk and costs, and enhance chatbots.

Many applications of machine learning in business are complex, but we can achieve a lot by scoring risk on an additive scale from 0 to 10. This is a middle way between using complex black box models such as neural networks, and traditional human intuition.

Natural language processing can distinguish customers from salespeople Is it possible to use natural language processing (NLP) to distinguish between unwanted sales approaches and promising leads for a business’s customer relationship management? If so, this would be a great application of AI in business.

Job postings for data science consultants have increased an amazing 256% since 2013. Why? The need for data collection and processing is everywhere. Nearly all businesses – from large corporations to local companies – need someone to manage and interpret their data. There are also more businesses today that use artificial intelligence, and machine learning to improve or automate tasks like customer personalisation, recommendation engines, churn prediction, cost modelling, and other key business functions. There is also a growing demand for niche areas of data science such as natural language processing or computer vision to enable industries such as insurance, healthcare, legal and pharma to process huge quantities of data in text or image form.

Some ways that we can model causal effects using machine learning, statistics and econometrics, from a sixth-century religious text to the causal machine learning of 2021 including causal natural language processing.

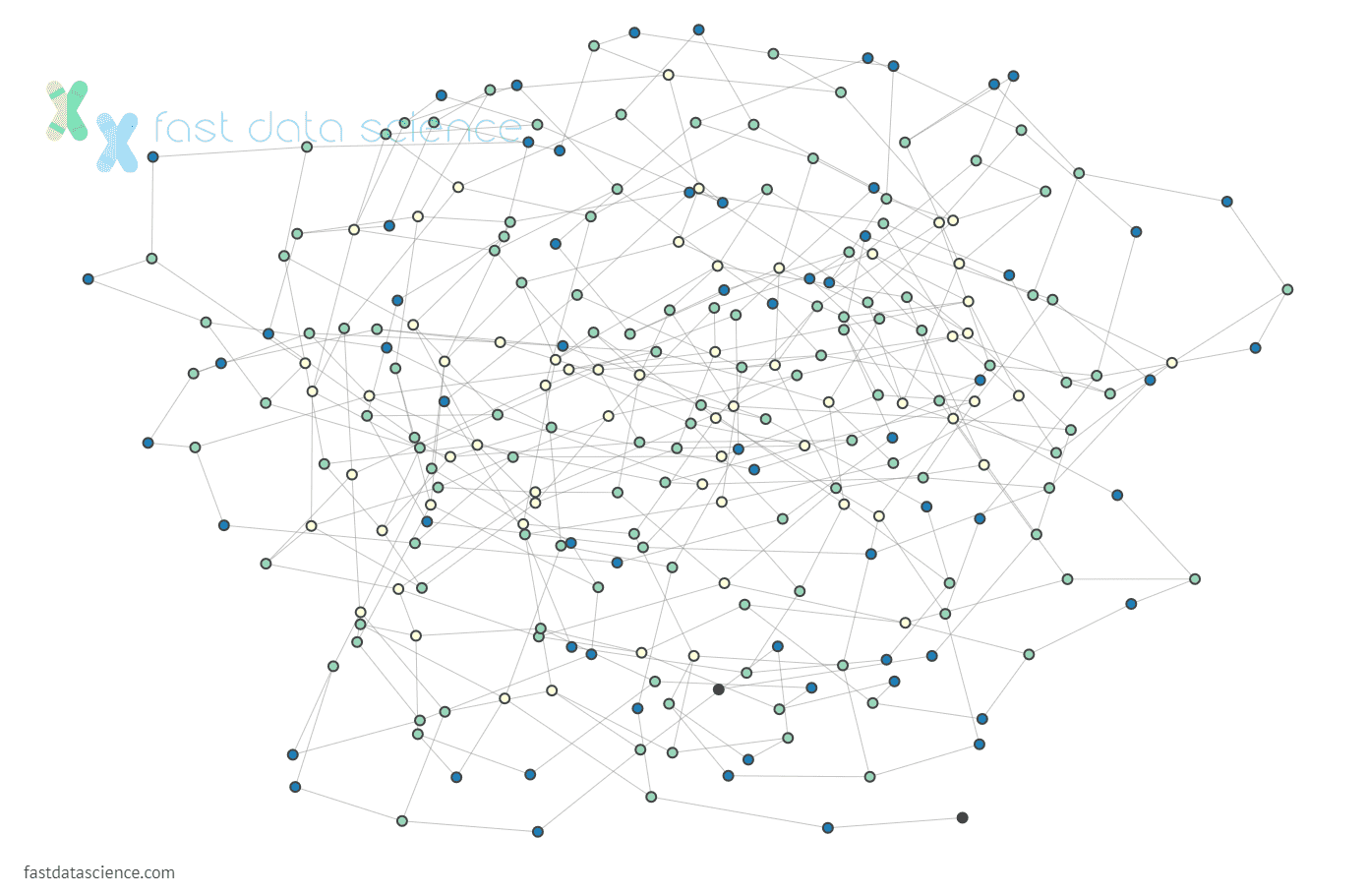

What is unsupervised learning? When we think about acquiring a skill or learning a new subject, most of us see that process involving a teacher passing their knowledge on to us. If you’re teaching a child how to distinguish between different fruits for example, you might show them various images, identifying one as an apple, another as a pear and so on, so that when the child sees these fruits in real life, they can recognise which is which themselves, but initially via the labels you provided. This is known as supervised learning, and is one way in which Artificial Intelligence uses Machine Learning to predict particular outputs, having used data points with known outcomes. However, this is not the only way we, or computers for that matter, learn. Let us introduce to you Unsupervised Learning.

Explainable AI, or XAI, refers to a collection of ways we can analyse machine learning models. It is the opposite of the so-called ‘black box’, a machine learning model whose decisions can’t be understood or explained. Here’s a short video we have made about explainable AI.

Technical Due Diligence on companies with AI products and technologies Are you thinking about making an investment in a startup that allegedly uses AI or machine learning and would like a completely impartial assessment of their actual AI technology or products?

What we can do for you