Can AI handle legal questions yet? We have compared the capabilities of the older and newer large language models (LLMs) on English and Welsh insolvency law questions, as a continuation of the Insolvency Bot project.

Thomas Wood tried asking several LLMs a series of questions about insolvency law set by insolvency expert Eugenio Vaccari, designed to be about undergraduate level. We tested older LLMs such as GPT-3.5 as well as newer entrants such as DeepSeek.

We tried using the LLMs “off the shelf” with no modification (our control), and then as a comparator, we also tried including relevant English and Welsh case law, statutes, and forms from HMRC in the prompt. For an example, instead of asking an LLM

I have X debts and Y happened. Should I close my company?

we can ask the LLM,

The Insolvency Act 1986 Section 123 states that [paragraph]. The Companies Act 2006 Section 456 states that [paragraph]. This Supreme Court ruling is relevant: [ruling]. I have X debts and Y happened. Should I close my company?

In other words, we do the legwork of looking up the relevant information and stuffing it into the prompt, and the LLM just has to do what it’s good at, namely formulating sentences. This technique of adding extra text to a prompt is called retrieval augmented generation, or RAG. What’s cool about RAG, is that the user doesn’t need to see it.

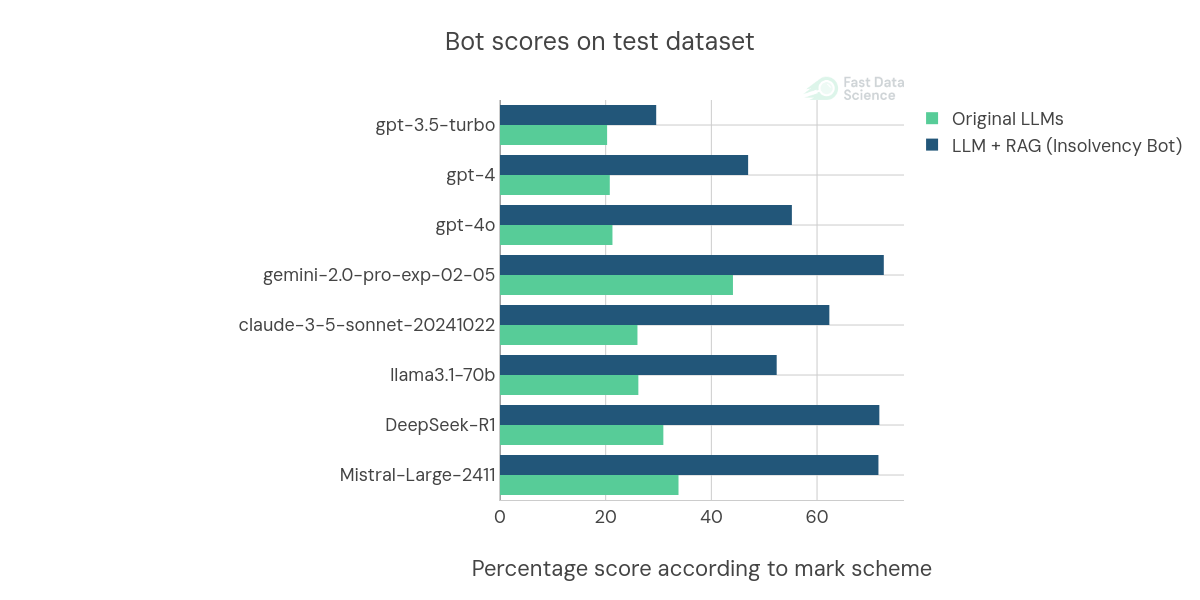

We found that over the last few years, the more advanced LLMs have actually had a bigger improvement due to the extra information that we included in the prompt. LLMs are constantly improving in their unmodified form, but you can see clearly in this time series plot that RAG has become more effective over time.

The models released in 2025, such as DeepSeek and the current iteration Gemini, now outperform the earlier GPT-3.5 by a large margin. This was not surprising. But what is unexpected is that the RAG-augmented models have even more of an edge over their non-RAG counterparts, than they did one or two years ago.

So we built a RAG system long before Google Gemini or DeepSeek came out, and it has performed far better on those models than on any model we had access to back in 2023 when we developed the system. Any ideas why this could be? Contact us and let us know your thoughts!

The pace of improvements is also astounding. Could we be facing a new Moore’s law in AI?

You can read our original paper (which predates the release of DeepSeek) here:

And you can try the Insolvency Bot here: https://fastdatascience.com/insolvency

Unleash the potential of your NLP projects with the right talent. Post your job with us and attract candidates who are as passionate about natural language processing.

Hire NLP Experts

We are excited to introduce the new Harmony Meta platform, which we have developed over the past year. Harmony Meta connects many of the existing study catalogues and registers.

Guest post by Jay Dugad Artificial intelligence has become one of the most talked-about forces shaping modern healthcare. Machines detecting disease, systems predicting patient deterioration, and algorithms recommending personalised treatments all once sounded like science fiction but now sit inside hospitals, research labs, and GP practices across the world.

If you are developing an application that needs to interpret free-text medical notes, you might be interested in getting the best possible performance by using OpenAI, Gemini, Claude, or another large language model. But to do that, you would need to send sensitive data, such as personal healthcare data, into the third party LLM. Is this allowed?

What we can do for you