Would you hire or date this person? There’s a catch: she doesn’t exist! I generated the image in a few seconds using the software StyleGAN. That’s why you can see some small artefacts in the image if you look carefully.

Imagine this scenario: you have encountered a profile online of a good-looking person. They might have contacted you about a job, or on a social media site. You might even have swiped right on their face on Tinder.

There is just one little problem. This person may not even exist. The image could have been generated using a machine learning technique called Generative Adversarial Networks, or GANs. GANs were developed in 2014 and have recently experienced a surge in popularity. They have been touted as one of the most groundbreaking ideas in machine learning in the past two decades. GANs are used in art, astronomy, and even video gaming, and are also taking the legal and media world by storm.

Generative Adversarial Networks are able to learn from a set of training data, and generate new synthetic data with the same characteristics as the training set. The best-known and most striking application is for image style transfer, where GANs can be used to change the gender or age of a face photo, or re-imagine a painting in the style of Picasso. GANs are not limited to just images: they can also generate synthetic audio and video.

Can we also use generative adversarial networks (a kind of generative AI) for natural language processing - to write a novel, for example? Read on and find out.

I’ve included links at the end of the article so you can try all the GANs featured yourself.

A generative adversarial network allows you to change parameters and adjust and control the face that you are generating. I generated this series of faces with StyleGAN.

The American Ian Goodfellow and his colleagues invented Generative Adversarial Networks in 2014 following some ideas he had during his PhD at the University of Montréal. They entered the public eye around 2016 following a number of high profile stories around AI art and the impact on the art world.

How does a generative adversarial network work? In fact, the concept is quite similar to playing a game of ’truth-or-lie’ with a friend: you must make up stories, and your friend must guess if you’re telling the truth or not. You can win the game by making up very plausible lies, and your friend can win if they can sniff out the lies correctly.

A Generative Adversarial Network consists of two separate neural networks:

The two networks are trained together but must work against each other, hence the name ‘adversarial’. If the discriminator doesn’t recognise a fake as such, it loses a point. Likewise, the generator loses a point if the discriminator can correctly distinguish the real images from the fake ones.

A clip from the British panel show Would I Lie To You, where a contestant must either tell the truth or invent a plausible lie, and the opposing team must guess which it is. Over time the contestants get better at lying convincingly and at distinguishing lies from truth. The initial contestant is like the ‘generator’ in a Generative Adversarial Network, and the opponent is the ‘discriminator’.

So how does a generative adversarial network learn to generate such realistic fake content?

As with all neural networks, we initialise the generator and discriminator with completely random values. So the generator produces only noise, and the discriminator has no clue how to distinguish anything.

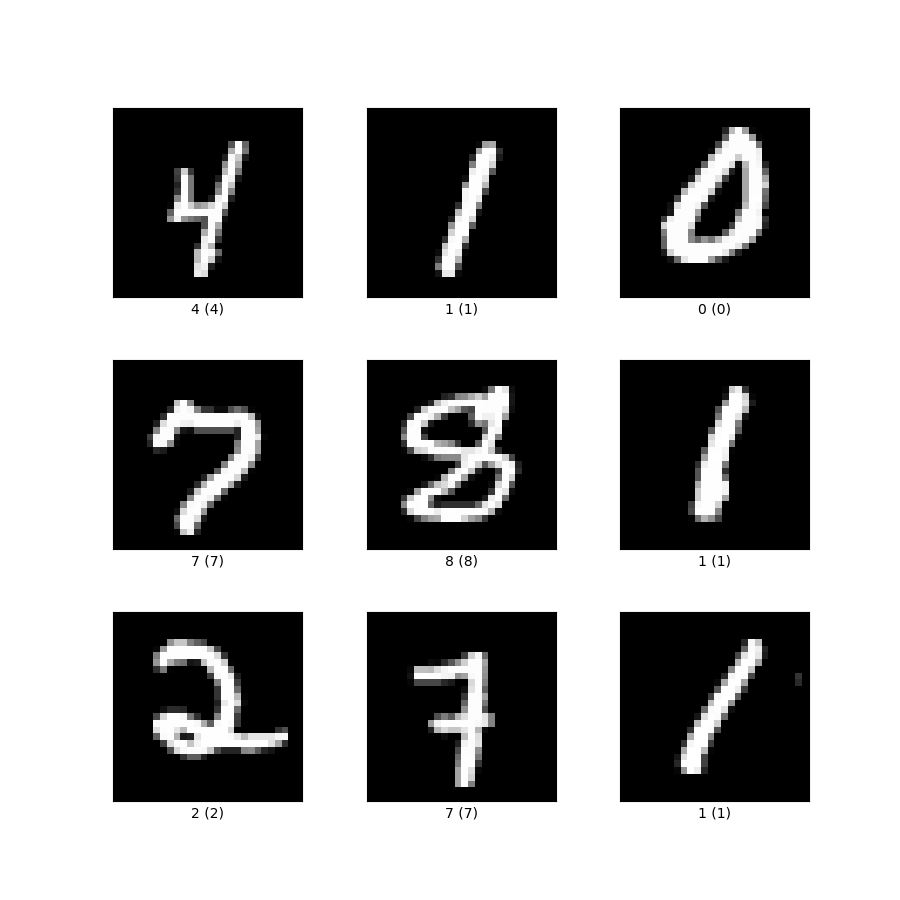

Let us imagine that we want a generative adversarial network to generate handwritten digits, looking like this:

Some examples of handwritten digits from the famous MNIST dataset.

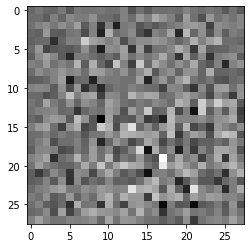

When we start training a generative adversarial network, the generator only outputs pure noise:

The output image of a GAN before training starts

At this stage, it is very easy for the discriminator to distinguish noise from handwritten numbers, because they look nothing alike. So at the start of the “game”, the discriminator is winning.

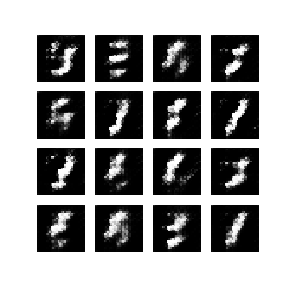

After a few minutes of training, the generator begins to output images that look slightly more like digits:

After a few epochs, a generative adversarial network starts to output more realistic digits.

After a bit longer, the generator’s output becomes indistinguishable from the real thing. The discriminator can’t tell real examples apart from fakes any more.

Generative Adversarial Networks are best known for their ability to generate fake images, such as human faces. The principle is the same as for handwritten digits in the example shown above. The generator learns from a set of images which are usually celebrity faces, and generates a new face similar to the faces it has learnt before.

A set of faces generated by the generative adversarial network StyleGAN, developed by NVidia.

Interestingly, the generated faces tend to be quite attractive. This is partly due to the use of celebrities as a training set, but also because the GAN performs a kind of averaging effect on the faces that it’s learnt from, which removes asymmetries and irregularities.

Fast Data Science - London

As well as generating random images, generative adversarial networks can be used to morph a face from one gender to another, change someone’s hairstyle, or transform various elements of a photograph.

For example, I tried running the code to train the generative adversarial network CycleGAN, which is able to convert horses to zebras in photographs and vice versa. After about four hours of training, the network begins to be able to turn a horse into a zebra (the quality isn’t that great here as I didn’t run the training for very long, but if you run CycleGAN for several days you can get a very convincing zebra).

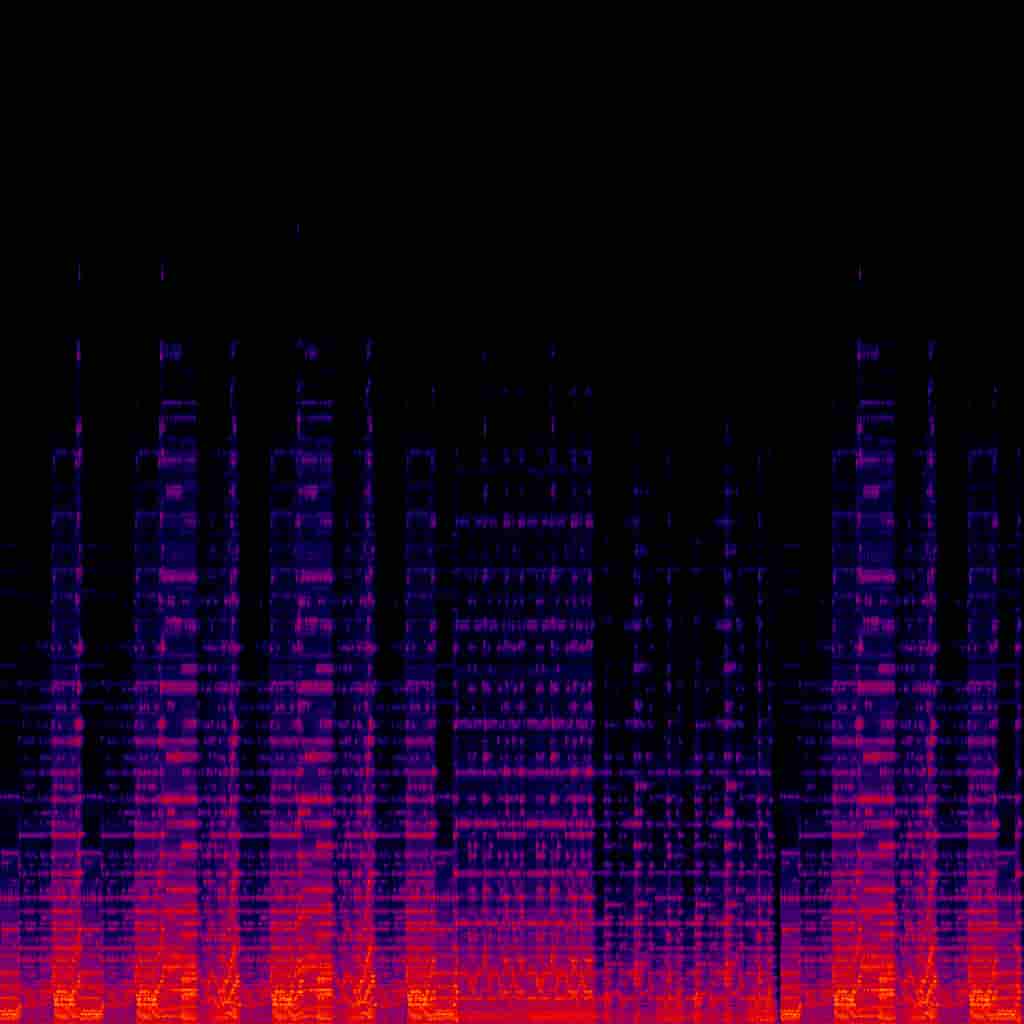

It’s possible to convert an audio file into an image by representing it as a spectrogram, where time is on one axis and pitch is on the other.

The spectrogram of Beethoven’s Military March

An alternative method is to treat the music as a MIDI file (the output you would get from playing it on an electronic keyboard), and then transform that to a format that the GAN can handle. Using simple transformations like this, it’s possible to use GANs to generate entirely new pieces of music in the style of a given composer, or to morph speech from one speaker’s voice to another.

The generative adversarial network GANSynth allows us to adjust properties such as the timbre of a piece of music.

Here’s Bach’s Prelude Suite No. 1 in G major:

Bach’s Prelude Suite No. 1 in G major.

And here is the same piece of music with the timbre transformed by GANSynth:

Bach’s Prelude Suite No. 1 in G major with an interpolated timbre, generated by GANSynth.

After seeing the amazing things that generative adversarial networks can achieve for images, video and audio, I started wondering whether a GAN could write a novel, a news article, or any other piece of text.

I did some digging and found that Ian Goodfellow, the inventor of Generative Adversarial Networks, wrote in a post on Reddit back in 2016 that GANs can’t be used for natural language processing, because GANs require real-valued data.

An image, for example, is made up of continuous values. You can make a single pixel a touch lighter or darker. A GAN can learn to improve its images by making small adjustments. However there is no analogous continuous value in text. According to Goodfellow,

If you output the word “penguin”, you can’t change that to “penguin + .001” on the next step, because there is no such word as “penguin + .001”. You have to go all the way from “penguin” to “ostrich”.

Since all NLP is based on discrete values like words, characters, or bytes, no one really knows how to apply GANs to NLP yet.

Ian Goodfellow, posting on Reddit in 2016

However since Ian Goodfellow wrote this quote, a number of researchers have succeeded in adapting generative adversarial networks for text.

A Chinese team (Yu et al) has developed a generative adversarial network which they used to generate classical Chinese poems, which are made up of lines of four characters each. They found that independent judges were unable to tell the generated poems from real ones.

They then tried it out on Barack Obama’s speeches and were able to generate some very plausible-sounding texts, such as:

Thank you so much. Please, everybody, be seated. Thank you very much. You’re very kind. Thank you.

I´m pleased in regional activities to speak to your own leadership. I have a preexisting conditions. It is the same thing that will end the right to live on a high-traction of our economy. They faced that hard work that they can do is a source of collapse. This is the reason that their country can explain construction of their own country to advance the crisis with possibility for opportunity and our cooperation and governments that are doing. That’s the fact that we will not be the strength of the American people. And as they won’t support the vast of the consequences of your children and the last year. And that’s why I want to thank Macaria. America can now distract the need to pass the State of China and have had enough to pay their dreams, the next generation of Americans that they did the security of our promise. And as we cannot realize that we can take them.

And if they can can’t ensure our prospects to continue to take a status quo of the international community, we will start investing in a lot of combat brigades. And that’s why a good jobs and people won’t always continue to stand with the nation that allows us to the massive steps to draw strength for the next generation of Americans to the taxpayers. That’s what the future is really man, but so we’re just make sure that there are that all the pressure of the spirit that they lost for all the men and women who settled that our people were seeing new opportunity. And we have an interest in the world.

Now we welcome the campaign as a fundamental training to destroy the principles of the bottom line, and they were seeing their own customers. And that’s why we will not be able to get a claim of their own jobs. It will be a state of the United States of America. The President will help the party to work across our times, and here in the United States of uniform. But their relationship with the United States of America will include faith.

Thank you. God bless you. And May God loss man. Thank you very much. Thank you very much, everybody. Thank you. God bless the United States of America. God bless you. Here’s President.

A generated Barack Obama-esque speech, by Yu et al (2017)

GANs have received substantial attention in the mainstream media because of their part in the controversial ‘deepfakes’ phenomenon. Deepfakes are realistic-looking synthetic images or videos of politicians and other public figures in compromising situations. Malicious actors have created highly convincing footage of people doing or saying things they have never actually done or said.

It has always been possible to Photoshop celebrities or politicians into fake backdrops, or show these people hugging or shaking hands with a person that they have never seen in person. The Soviet apparatus was notorious for airbrushing out-of-favour figures out of photographs in a futile attempt to rewrite history. Generative adversarial networks have taken this one step further by making it possible to create apparently real video footage.

A digitally retouched photograph from the Soviet era. Who knows what the authoritarian state could have achieved with generative adversarial networks? Image is in the public domain.

This is an existential threat to the news media, where the credibility of the content is key. How can we know whether a whistle-blower’s hidden camera clip is real, or is it an elaborate fake created by a GAN to destroy the opponent’s reputation? Deepfakes can also be used to add credibility to fake news articles.

The technology poses dark problems. GAN-enabled pornography has appeared on the Internet, created using the faces of real celebrities. Celebrities are currently an easy target because there are already many photos of them on the Internet, making it easy to train a GAN to generate their faces. Furthermore, the public’s interest in their personal lives is already high, so it can be lucrative to post fake videos or photos. However, as technology advances and the size of the required training set shrinks, hackers can use blackmail to make fake clips featuring nearly anybody.

Even bona fide uses of generative adversarial networks raise some complicated legal questions. For example, who owns the rights to an image created by a generative adversarial network?

United States copyright law requires a copyrighted work to have a human author. But who owns the rights to an image generated by a GAN? The software engineer? The person who used the GAN? Or the owner of the training data?

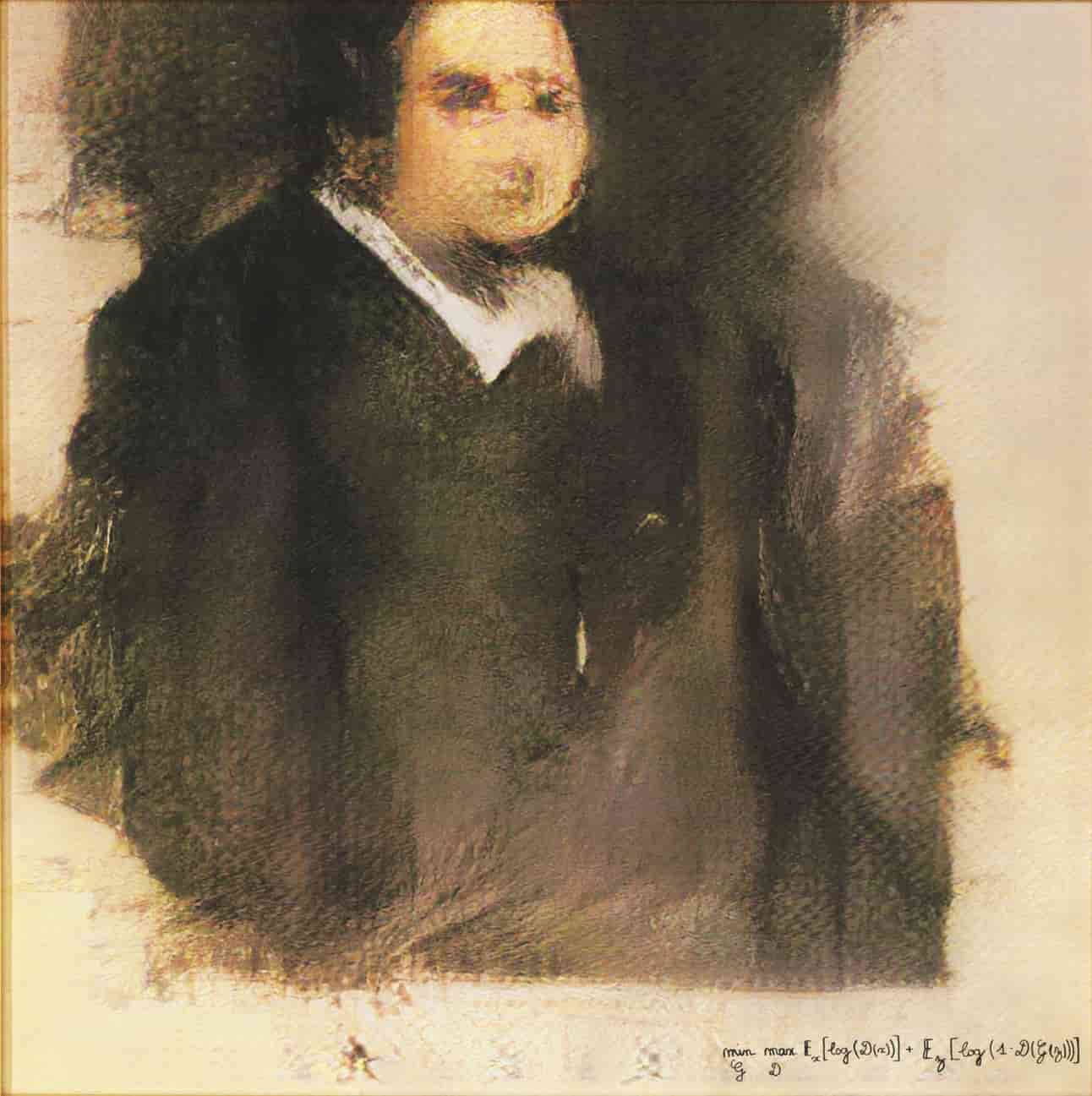

The concept of ‘who is the creator’ was famously put to the test in 2018, when the Parisian arts collective Obvious used a generative adversarial network to create a painting called Edmond de Belamy, which was later printed onto canvas. The artwork sold at Christie’s New York for $432,500. However, it soon emerged that the code to generate the painting had been written by another AI artist, Robbie Barratt, who was not affiliated with Obvious. Public opinion was divided as to whether the three artists in Obvious could rightfully claim to have created the artwork.

The GAN-generated painting Edmond de Belamy, printed on canvas but created using a generative adversarial network by the Parisian collective Obvious. Image is in the public domain

Generative Adversarial Networks are a young technology but in a short time they have had a large impact on the world of deep learning and also on society’s relationship with AI. So far, the various exotic applications of GANs are only beginning to be explored.

Currently, generative adversarial networks do not yet have widespread use in data science in industry, so we can expect GANs to spread out from academia in the near future. I expect GANs to become widely used in computer gaming, animation, and the fashion industry. A Hong Kong-based biotechnology company called Insilico Medicine is beginning to explore GANs for drug discovery. Companies such as NVidia are investing heavily in research in GANs and also in more powerful hardware, so the field looks promising. And of course, we can expect to hear a lot more about GANs and AI art following the impact of Edmond de Belamy.

If you want to run any of the generative adversarial networks that I’ve shown in the article, I’ve included some links here. Only the first one (handwritten digits) will run on a regular laptop, while the others would need you to create an account with a cloud provider such as AWS or Google Colab, as they need more powerful computing.

Unleash the potential of your NLP projects with the right talent. Post your job with us and attract candidates who are as passionate about natural language processing.

Hire NLP Experts

We are excited to introduce the new Harmony Meta platform, which we have developed over the past year. Harmony Meta connects many of the existing study catalogues and registers.

Guest post by Jay Dugad Artificial intelligence has become one of the most talked-about forces shaping modern healthcare. Machines detecting disease, systems predicting patient deterioration, and algorithms recommending personalised treatments all once sounded like science fiction but now sit inside hospitals, research labs, and GP practices across the world.

If you are developing an application that needs to interpret free-text medical notes, you might be interested in getting the best possible performance by using OpenAI, Gemini, Claude, or another large language model. But to do that, you would need to send sensitive data, such as personal healthcare data, into the third party LLM. Is this allowed?

What we can do for you