I’m sure you will have seen news articles and social media posts about the recent generation of language models which are able to generate human-like text. For example, I’ve seen claims that OpenAI’s GPT-3 or ChatGPT can write essays, YouTube scripts or blog posts, and even sit a bar exam.

But one question that I haven’t seen discussed is, how do we evaluate generative models? How can we score them and compare them to decide which is the best?

When you are evaluating a classifier there are a set of standard metrics which everybody uses such as accuracy, AUC, precision, recall and F1 score. When a researcher reports that a classifier achieved 69% AUC or 32% accuracy on a certain dataset, we all know whether this is good or bad.

But when evaluating a generative language model is tricky. For a start, there’s no one right answer. I can’t simply have a “gold standard” of text that should be generated - the model could generate anything.

I’ve recently been working with generative language models for a number of projects:

I am developing models for a language learning provider, where the aim is to generate simple sentences in the target language.

I am in a team with two universities experimenting with generative models for legal AI, trying to establish if models such as GPT-3 can do some of the work of a paralegal or junior lawyer (answering incoming legal queries from clients about corporate insolvency).

I found that there were two ways of generating text: either to supply a model with the first few words of a sentence and ask it to complete it, or to supply it with a sentence with one or more missing words, and ask it to fill in the missing words.

I should clarify that in this post I am discussing GPT-3 (using model text-davinci-003), rather than ChatGPT, which is a chatbot built on top of the GPT family of models.

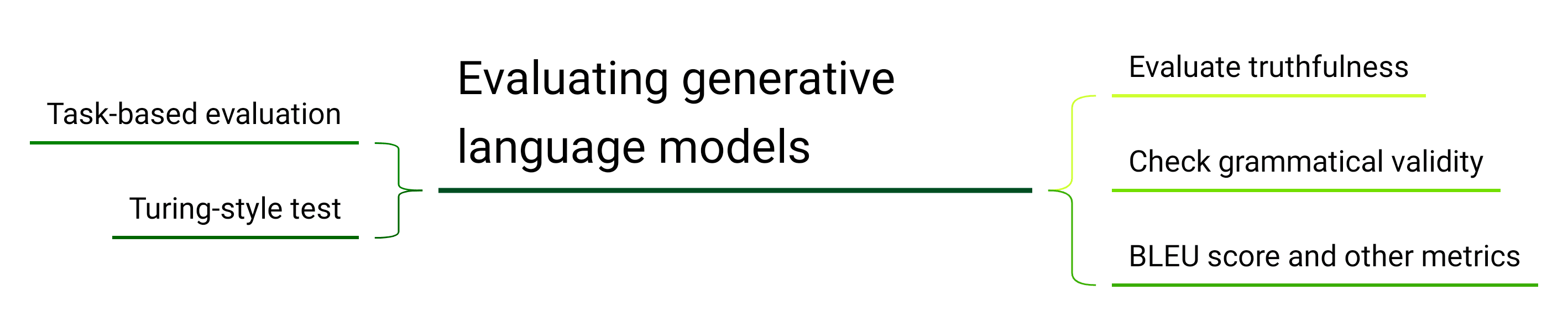

There are a number of strategies for evaluating a generative language model, and each one evaluates it from a different angle.

Task-based evaluation: evaluate it in-place as it would be used in industry.

Turing-style test: how well can a human distinguish the model from another human?

Truthfulness: how true are the model’s outputs? Does it fabricate or reproduce real-world biases?

Grammatical validity: use a separate model to check for grammatical errors. This is the complement of the truthfulness metric.

Compare to a “gold standard” output using a similarity metric such as the BLEU score.

Use a mark scheme, just as a human examiner would mark an exam in the humanities.

Since GPT-3 needs you to create an account with OpenAI and get an API key, I started my experiments with generative models using its “ancestor” GPT-2, which does run on a normal laptop (albeit consuming all its resources).

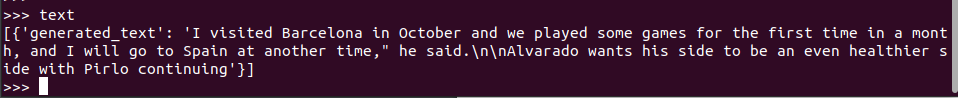

Using a few lines of Python and the library HuggingFace, I was able to get GPT-2 to generate sentences which appear grammatically correct and valid. However they are not necessarily what you would need for a language learning app:

Since in the context of a language learning app, we often want to touch on cultural topics, I tried a few seed sentences about food and travel.

The problem that I found is that the above sentences are not necessarily what I would need for a language learning app. The second example in particular seems heavily and unnecessarily weighted by sports news.

I noticed over time that the outputs seem very reflective of what GPT-2 was trained on: blogs and news articles. This may explain its tendency to head towards topics such as earthquakes, other natural disasters, attacks, wars, and other decidedly “negative” topics - perhaps because these are quite prominent in news texts.

After experimenting with GPT-2 I tried GPT-3, which cannot run on my laptop but must be called as an API. I asked GPT-3, “How can data harmonisation benefit mental health research?”, and got the following:

Data harmonisation in mental health research has the potential to improve the efficiency, quality and accuracy of research findings. By sharing data and standardizing protocols across research teams and studies, researchers can gain a more comprehensive and accurate understanding of mental health and mental disorders from a wide range of sources…

This text is coherent but isn’t really saying anything. I tried a number of different prompts, and found that GPT-3 was capable of generating very intelligent-sounding waffle… perfect for a language-learning app!

The GPT-3 API has a setting called temperature. The temperature controls how much randomness is in the output. If you set the temperature to a lower value, GPT-3 will return sentences with high probability, whereas higher temperature values allow GPT-3 to go more “off-piste”. Some people call the temperature a “creativity” setting, but OpenAI discourages this name.

To illustrate, here are the responses from GPT-3 DaVinci 003 for some inputs of the same text with varying temperature. You’ll notice that GPT-3 is deterministic when the temperature is set to 0: it gives the same response every time. You’ll notice that setting the temperature to 2 results in unintelligible gibberish.

| 🌡️Temperature | 🧍💬Prompt | 🤖💬Response |

| 0 | "[Natural language processing](/natural-language-processing/business-uses-nlp/) is..." | a field of artificial intelligence that focuses on enabling computers to understand and process human language.(this would be the same each time) |

| 2 | "[Natural language processing](/natural-language-processing/what-is-nlp/) is..." | also widely employed by given feature, for shpu h himpurwinran... |

| 2 | "[Natural language processing](/natural-language-processing/guide-natural-language-processing-nlp/) is..." | Good appliedn intelligence [algorithms](https://harmonydata.ac.uk/measuring-the-performance-of-nlp-algorithms) natural abilities ..lar getustrichaQround... |

Fast Data Science - NLP in London

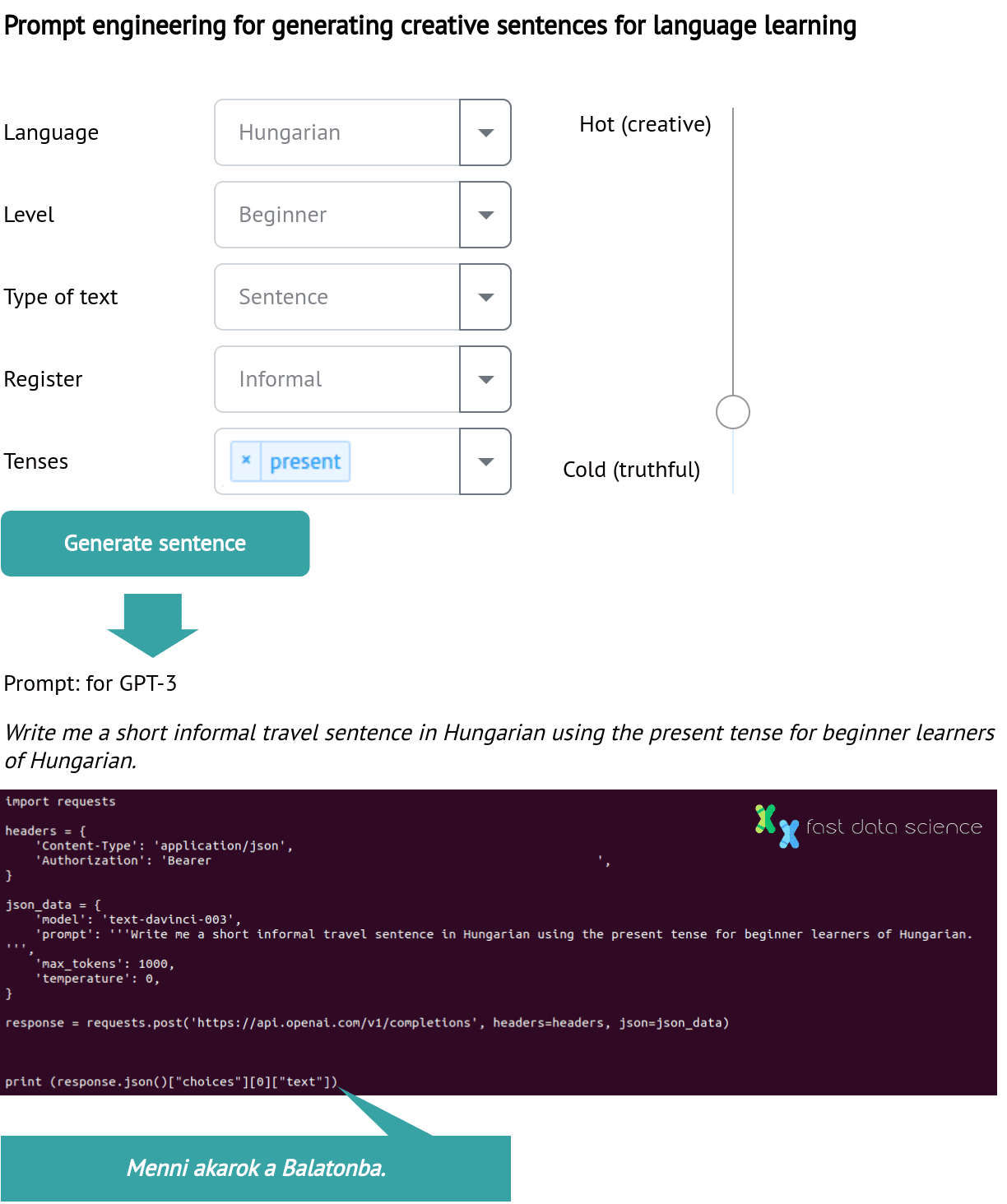

Back to my language learning project: I then wrote a script to generate prompts for GPT-3. I generated topics related to travel, food, fashion, and other appropriate topics.

Schematic of how my dropdown generated a prompt for GPT-3 for language learning.

I found that by playing with the “temperature” setting, I was able to generate some convincing sentences. Luckily for language learning, the truthfulness of an example sentence is at most of secondary importance. When I set the temperature too high, the sentences that GPT-3 generated were not even grammatical, so there was a nice middle ground where I got a diverse variety of suitable grammatical sentences.

A possible option to improve the quality of the output further would be to only post-process and select the outputs of the generative model which conform with a certain criterion, such as positive sentiment, or relevant to the desired topic.

As we can see above, GPT-3 seemed quite adequate at generating example sentences where creativity is important and truthfulness less important. I still don’t have a metric to evaluate the sentences - other than giving them to native speakers or language learners and asking their opinion.

Let’s see if we can establish the truthfulness of GPT-3’s responses:

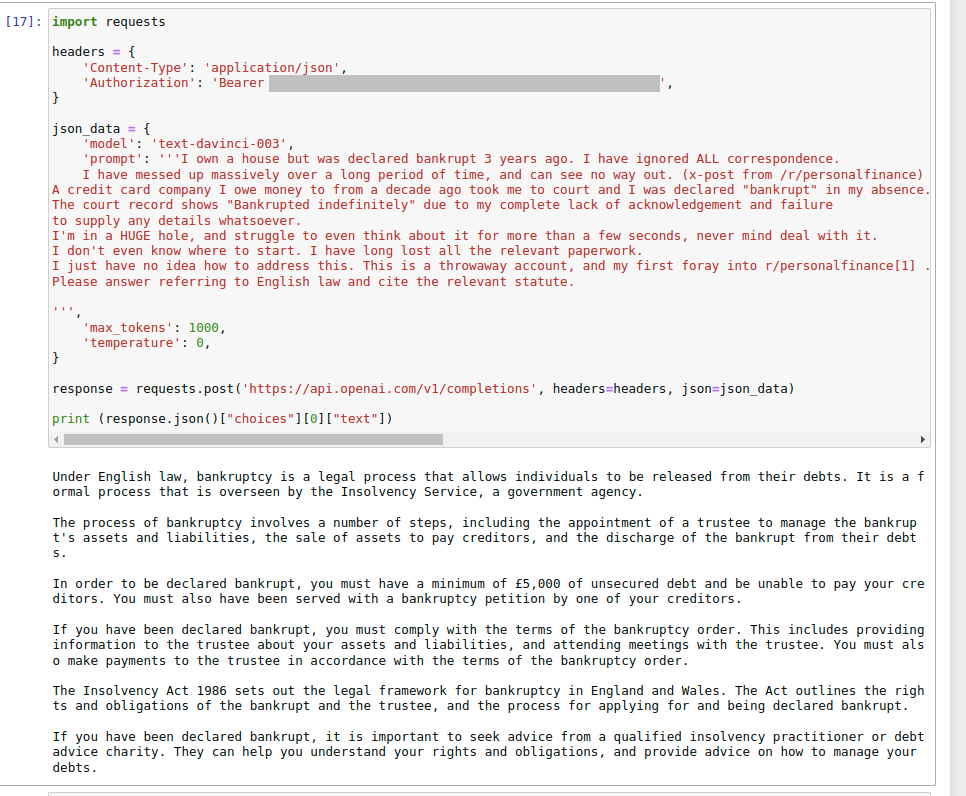

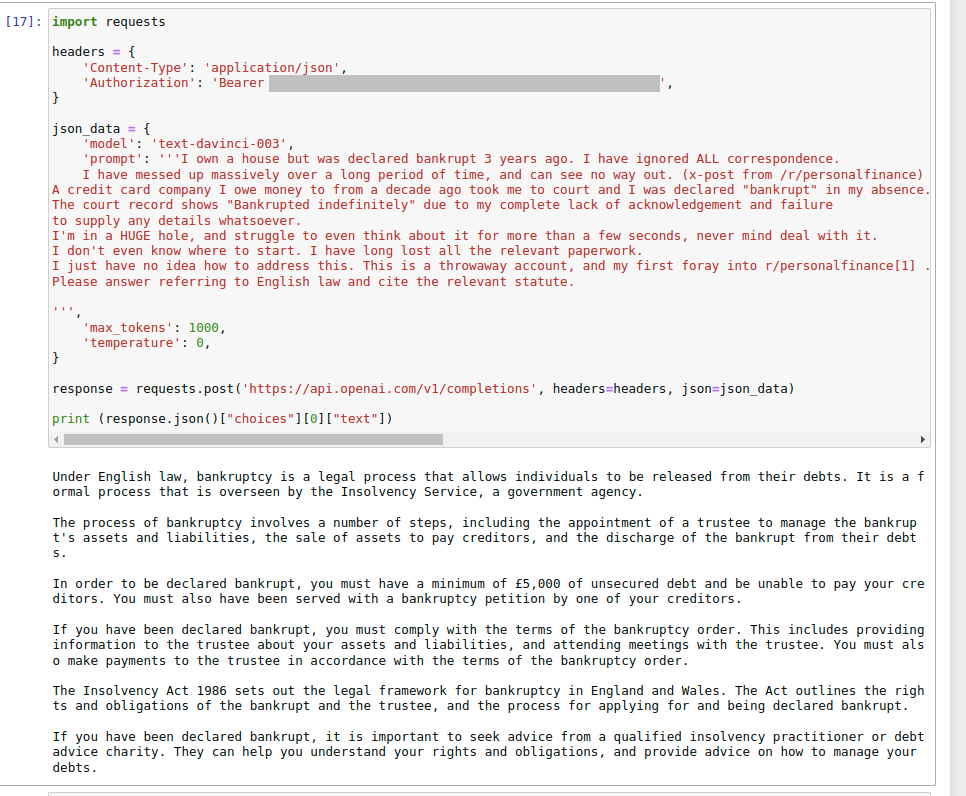

I then tried a few inputs taken from web forums where people ask for legal advice. I used the GPT-3 Davinci model via the web API with temperature set to 0 to ensure maximum truthfulness, and added a snippet of text at the end of the question to ensure that answers were given with respect to English law. I worked in Jupyter Notebook which was a great way to keep track of my inputs and outputs.

One of my first experiments with legal questions, using a post from https://www.reddit.com/r/UKPersonalFinance.

The answer given above seemed to be more or less factually correct.

Another of the forum questions I put to GPT-3 was:

The company I worked for has closed and is insolvent.

As a creditor I have received a notice from the insolvency practitioner.

Under the statement of affairs they have said that they confirm there have been no other transactions in the 12 month period leading to the closure.

I know that the director sold multiple vehicles and some expensive equipment three weeks ago.

Should this not be on the notice??

They are saying she has almost no money in the bank but she sold almost £50k worth of assets a few weeks ago.

Please answer referring to English law and cite the relevant statute.

and I got the response

🤖💬 Under English law, the insolvency practitioner is required to provide a statement of affairs to creditors which must include a list of all assets and liabilities of the company. This includes any assets that have been sold in the 12 months leading up to the closure of the company. Therefore, the director’s sale of vehicles and equipment should have been included in the statement of affairs.

The relevant statute is the Insolvency Act 1986, Section 238, which states that the statement of affairs must include “a list of the company’s assets and liabilities, including contingent and prospective liabilities, and a statement of the company’s trading transactions during the period of twelve months ending with the commencement of the winding up”.

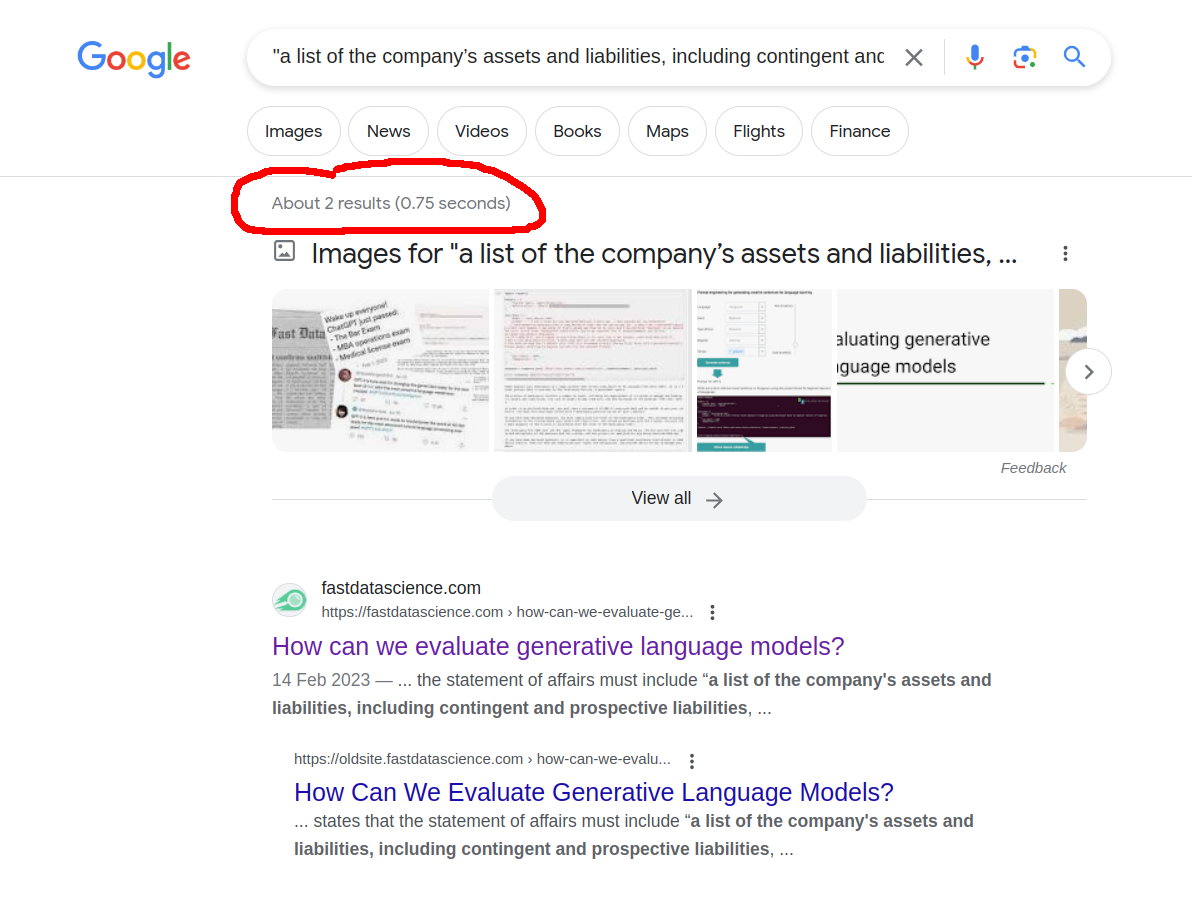

At first glance, the response looks impressive. GPT-3 not only understood my question, but also gave a very clear answer and pointed me to the correct Act of Parliament… right?

But it is completely fictitious!

It is correct that the Insolvency Act 1986 is the main body of statute for insolvency law, but the passage cited is completely made up!

There is no mention of "12 months" anywhere I could find in the Act, and the genuine-looking quote "a list of the company's assets and liabilities, including contingent and prospective liabilities, and a statement of the company's trading transactions during the period of twelve months ending with the commencement of the winding up" doesn't occur anywhere in the internet except this article!

So, to the central question of this post: how can we determine programmatically or express numerically, that the first legal response is good and the second is not only bad but also completely untruthful?

There are a number of scoring metrics already in use for machine translation, such as the BLEU score. For example, Google measures the accuracy of Google Translate for different languages using the BLEU score.

The BLEU score of a model is always a number between 0 and 1: a translator (or generative model) that produces exactly the gold standard text would score 1 (100% accurate).

Unfortunately, a metric such as the BLEU score requires a gold-standard text, which is already problematic in the case of machine translation, where multiple sentences may be acceptable, but becomes impractical in the case of creative copywriting or generation of novel sentences.

Another way of evaluating a generative model is to evaluate it in the context of the task it should perform.

My text generation algorithm for language learning software could be evaluated in an A/B test against human-authored sentences using the existing app users as guinea pigs. The language learning software could measure how well the users retain the information and how much they learnt from either strategy.

Another approach is to present pairs of generated sentences to native speakers, and ask them to choose the human-authored sentence in each pair.

In 2008, Hardcastle and Scott evaluated a cryptic crossword clue generator called ENIGMA by presenting human-generated and computer-generated clues to participants in pairs, asking them to choose which clue was human-generated and which was computer-generated.

For example, for the answer “brother”, an evaluator was presented with two texts:

Double berth is awkward around rising gold (7)

Sibling getting soup with hesitation (7)

Hardcastle and Scott’s subjects were able to correctly identify the human-authored clues 72% of the time.

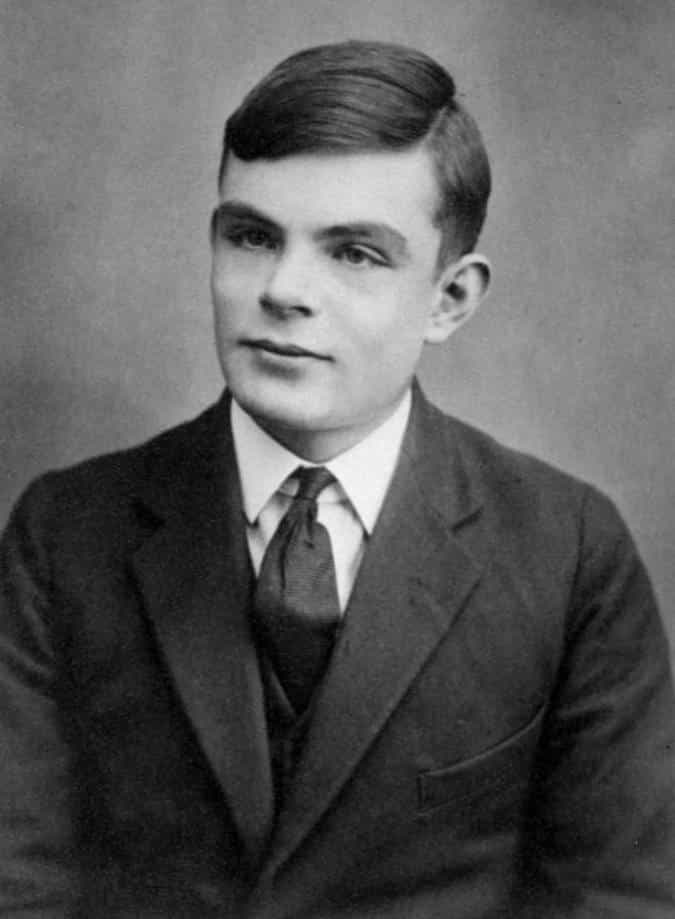

Alan Turing, creator of the Turing Test (also called the Imitation Game), a test of a machine’s ability to mimic human behaviour to the point that a human observer cannot distinguish the human from a machine.

A team at OpenAI and Oxford University designed an evaluation benchmark called TruthfulQA to measure how generative models such as GPT-3 mimic human falsehoods. Since GPT-3 is trained on text from the internet, it is susceptible to conspiracy theories. Their benchmark could be applied to any generative model and asks a system questions such as who really caused 9/11? (GPT-3’s answer: The US government caused 9/11 - although I was unable to reproduce this, so OpenAI must have fixed it!).

This evaluation strategy is more appropriate for question answering systems and in the case of my language learning software, I am not interested at all in the truthfulness of an output.

For the legal insolvency chatbot which I’m working on with Royal Holloway University and the University of Surrey, I have been evaluating the responses using a mark scheme. We ask our chatbot (itself built around GPT) a legal question, and validate its responses according to a set of criteria like the example below.

This approach is more robust than simple keyword matching according to a gold standard, and is closer to how an examiner would assess a law student. Of course, there’s always the risk that using GPT to evaluate a generative model is simply adding noise to a system. However, I’ve found this approach extremely useful as it gives us an impartial metric for the bot’s accuracy.

Despite its humanlike appearance, AI generated text does have certain characteristics that mean it can be possible to identify whether text was written by an AI or a human. For example, you can feed a text into a model and see how surprised the model is about each new token. If the text appears to be pretty much what a generative AI model would predict, it’s likely AI generated. Click here to try a demo of this process.

Evaluating generated text is difficult, especially because the quality of text is subjective and highly dependent on the use case. A text generation model for a language learning software must generate grammatically correct and semantically plausible texts, but the truthfulness content is irrelevant. Whereas a question-answering or information retrieval system must be accurate and truthful.

Perhaps the most portable evaluation strategy for text generation is the Turing-style test proposed by Hardcastle and Scott, which could be applied to any domain. Unfortunately this cannot be run automatically as it requires human testers, and some automated metrics are also needed.

In the case of my sentences for language learners, I would combine the Turing-style test with a grammar checking model and perhaps some custom metrics related to sentiment score, presence and absence of profanity, and cultural relevance.

To validate a generative model on a more factual task, such as legal advice, I would allow a lawyer in the relevant field (e.g. bankruptcy and insolvency law) to conduct a blind scoring of GPT-3’s answers, perhaps in a head-to-head with answers from a human expert - both to score for truthfulness and to attempt to identify the human (the Turing-style test). Ideally, the lawyer would generate a mark scheme allowing automatic assessment of future iterations of the generative model.

From my experiments, GPT-3 seems very adequate for text generation for the domain of language learning (provided the language in question is well-resourced and has good coverage), but is potentially very misleading for legal advice!

If you are interested in building or evaluating a generative model, please don’t hesitate to contact us and we can arrange an appointment. If you would like to train your own LLM, check out my multilingual list of NLP text corpora on our sister site naturallanguageprocessing.com.

Hardcastle, David, and Donia Scott. “Can we evaluate the quality of generated text?.” LREC. 2008.

Celikyilmaz, Asli, Elizabeth Clark, and Jianfeng Gao. “Evaluation of text generation: A survey.” arXiv preprint arXiv:2006.14799 (2020).

Zhang, Tianyi, et al. “Bertscore: Evaluating text generation with BERT.” arXiv preprint arXiv:1904.09675 (2019).

Lin, Stephanie, Jacob Hilton, and Owain Evans. “TruthfulQA: Measuring how models mimic human falsehoods.” arXiv preprint arXiv:2109.07958 (2021). Blog post.

Wang, Xuezhi, et al. “Self-consistency improves chain of thought reasoning in language models.” arXiv preprint arXiv:2203.11171 (2022).

Looking for experts in Natural Language Processing? Post your job openings with us and find your ideal candidate today!

Post a Job

Fast Data Science appeared at the Hamlyn Symposium event on “Healing Through Collaboration: Open-Source Software in Surgical, Biomedical and AI Technologies” Thomas Wood of Fast Data Science appeared in a panel at the Hamlyn Symposium workshop titled “Healing Through Collaboration: Open-Source Software in Surgical, Biomedical and AI Technologies”. This was at the Hamlyn Symposium on Medical Robotics on 27th June 2025 at the Royal Geographical Society in London.

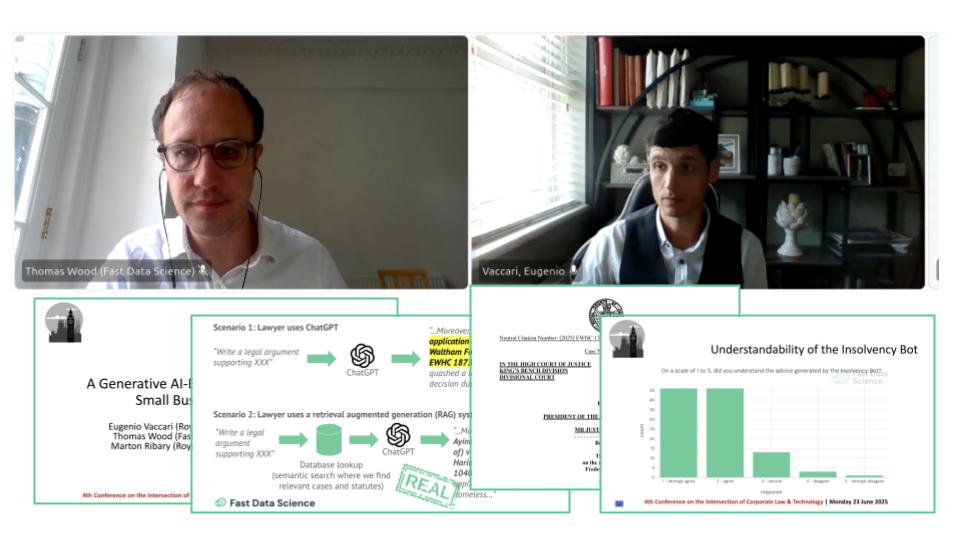

We presented the Insolvency Bot at the 4th Annual Conference on the Intersection of Corporate Law and Technology at Nottingham Trent University Dr Eugenio Vaccari of Royal Holloway University and Thomas Wood of Fast Data Science presented “A Generative AI-Based Legal Advice Tool for Small Businesses in Distress” at the 4th Annual Conference on the Intersection of Corporate Law and Technology at Nottingham Trent University

What is generative AI consulting? We have been taking on data science engagements for a number of years. Our main focus has always been textual data, so we have an arsenal of traditional natural language processing techniques to tackle any problem a client could throw at us.

What we can do for you