AI has seen remarkable advancements in recent years and one of its most notable ones is the development of LLMs or large language models. LLM and NLP (natural language processing) are two terms often used together as LLM models have pretty much revolutionised the field of NLP – machines can now understand and generate text at an almost human-like level and at an unprecedented scale too.

At the basic level, LLMs may be seen as highly sophisticated AI systems which are trained on the basis of large text documents. If we go into a little more depth, however, we will discover that a large language model is a type of AI algorithm which utilises DL (deep learning) methods along with huge data sets to understand, summarise, generate, and predict new content. That is its most basic function and, by definition, that’s what it was designed to do.

However, we should understand the term ‘generative AI’ as it is quite closely related to LLMs – the latter are, in fact, a kind of generative AI specifically designed to generate text-based content.

Over many centuries, we have developed different languages as human beings to facilitate communication. If we stop to think for a moment, language is at the heart of all kinds of human and technological communications – it contains the words, semantics, and grammar we need to relay ideas and concepts, for example.

Now, in the world of AI, a language model serves a rather similar purpose – it provides the basis for commutation and generating new concepts.

The very first AI language models can be traced back to the early days of AI when researchers and scientists were just starting to experiment with it. In 1966, the Eliza language model debuted at MIT and happens to be one of the earliest examples we can think of in terms of an AI language model. All language models must undergo training through a set of data, so that they can employ different techniques to infer relationships between words and phrases, and then generate new content based on that trained data.

You’ll often hear data scientists refer to “LLM NLP” in the same sentence as language models are often used in natural language processing applications to help users input a query in natural language for the sake of generating a result.

Large language models or LLMs are a natural evolution of the language model concept in AI, significantly expanding the data that is needed for training and inference. In turn, this has provided monumental boosts in the capabilities of the underlying AI model. There isn’t a universally recommended figure for how large this data set for training should be, although an LLM can have, at the minimum, one billion parameters or more – parameters in this case is essentially a machine learning (ML) term for the variables being used to train the AI model so that it can infer new content.

By now, we understand that LLM and NLP often go hand in hand as an LLM, which is a type of ML or AI model, can be used to perform a range of NLP tasks – for example, answering questions in a conversational manner, generating and classifying text, or translating text between two different languages. We also know that the “large” in LLM refers to the total number of values or parameters the language model is capable of autonomously changing as it learns or trains itself. The most successful LLMs in use today actually boast hundreds to billions, even trillions of parameters.

We know by now that LLMs need to be trained with huge amounts of data and then put through self-supervised learning or “training” to predict the next token in a sentence, as per the surrounding context. This process must be repeated multiple times until the model reaches the recommended or ‘acceptable’ level of accuracy.

Once the LLM has been successfully trained, it can be tweaked for many different NLP tasks, hence, the terms ‘LLM NLP’ are often mentioned together. These LLM and NLP tasks include:

Some of the most popular large language models and NLP today include:

Natural language processing

Large language models are often called NNs or neural networks because they function as computing systems which are inspired by the human mind. These NNs work by utilising an entire network of nodes (much like the human brain) which are layered – again, much like the neurons in our minds!

Apart from LLMs’ ability to teach human languages to AI applications or models, they can also be trained to perform many other tasks – such as, for example, writing software code, understanding protein structures, and more.

As we briefly discussed at the opening of the article, in order for LLM and NLP to work harmoniously, the former has to be pre-trained like a human brain and then tweaked so that they can easily address everything from question answering and document summarisation to text generation problems and text classification. LLMs’ problem-solving capabilities are already being applied to fields like entertainment, finance and healthcare, where LLM models serve many different NLP applications like AI assistants, chatbots, translation, and more.

In the context of these applications, we want to quickly touch upon one of the earlier points – which is that LLMs work with billions of parameters in most cases – these may be seen as memories that the model collects as it learns different things via training. An easy way to visualise this is by viewing the parameters as the model’s central knowledge bank.

An LLM functions on the basis of a transformer model – it works by first receiving an input and encoding it, and then decoding it to produce the output prediction. However, an LLM can only receive text input to generate output prediction if it gets the appropriate training. This allows it to fulfill not only general functions but also fine-tune or tweak itself, so that it can then perform specific tasks – some of which we have already discussed.

On a quick side note, a transformer model is the most commonly used architecture within an LLM, consisting of an encoder and decoder. It processes data by ‘tokenising’ the input, and then concurrently computing mathematical equations to uncover relationships between the tokens. This then allows the computer to identify the common patterns just like a person would attempt to see if he/she were given the same query.

Transformer models have self-attention mechanisms, enabling the model to learn a lot quicker than traditional models, such as long short-term memory models (LSTM). The ‘self-attention’ is what allows a transformer model to consider unique parts of the sequence or the complete context of a sentence, for example, in order to generate accurate predictions.

Back to how large language models work:

The first phase is training. LLMs are pre-trained through large textual datasets from sites like GitHub, Wikipedia, and other similar ones. The datasets have trillions of words, where their quality has a direct impact on how well the language model performs. At this point, the LLM engages in unsupervised learning – that is, it processes all the datasets fed into it without any specific instructions. During this stage, the AI algorithm in the LLM will learn the meaning of words, in addition to the relationships between those words. It will also learn how to distinguish words according to context. For example: it would learn how to distinguish between whether “right” means the opposite of “left” or “correct”.

The next phase is fine-tuning. If an LLM must perform a specific task (like translation, e.g.), then it needs to be fine-tuned or tweaked for that specific activity. This fine-tuning will optimise the performance of the given task or activity.

In the final phase, prompt-tuning, a similar function to fine-tuning is fulfilled in that it trains a large language model to perform a specific activity through few-shot prompting – also known as zero-shot prompting. A prompt is simply an instruction fed into an LLM. Few-shot/zero-shot prompting teaches the LLM to predict outputs by way of examples.

Let’s look at an example of a sentiment analysis exercise and how a few-shot prompt would look like:

Review by customer: This burger is so delicious

Customer sentiment: Positive

Review by customer: This burger was awful

Customer sentiment: Negative

Here, as you can see, the LLM would understand what the semantic meaning of “awful” is because an opposing or exact opposite example was already provided – and so, the customer sentiment in the second example turned out to be “negative”.

However, zero-shot prompt cannot teach the LLM how to respond to inputs via examples. Instead, what happens is it formulates the question as “The sentiment in ‘This burger awful’ is…” – so, it clearly indicates the task the LLM should perform, but at the same time, does not provide any problem-solving examples.

We’ve already discussed common applications of LLM NLP or ‘LLM and NLP’, to be more specific. Here are a few more:

Sentiment analysis – Since large language models and NLP work hand in hand with the former working as applications of the latter, LLM can allow companies to analyse customer sentiment through large volumes of textual data.

Code generation – Just like text generation, code generation is also an application of generative AI. Since LLMs have the ability to understand patterns, they can also generate code, no matter how large or small.

Text generation – LLM and NLP are largely responsible for generative AI, such as ChatGPT, with the ability to generate text-based inputs. They are also able to produce text when prompted; for example – “Write me a video script about pollution on Earth.”

Information retrieval – Visualise Google or Bing; every time you use a search feature within these search engines, you are essentially depending on a large language model and NLP to produce information in response to your query. So, the LLM retrieves the required information, after which it summarises and communicates the answer to you in a conversational style.

Chatbots & conversational AI – LLMs enable customer service chatbots, also known as conversational AI, in order to engage customers, interpret what their queries or responses mean and, in turn, offer appropriate responses or answers.

Content summarisation – LLMs can summarise long articles, research reports, corporate documentation, news stories, and also customer history into very thorough and specific texts which can be tailored to match the length of the output format.

Language translation – The ability for large language models and NLP to conduct language translation provides a much wider coverage to organisations looking to transcend language and geographical boundaries, thanks to the LLM’s fluent and accurate translations as well as multilingual capabilities.

As evidenced from the above use cases, LLM and NLP are able to complete entire sentences, provide answers to questions, and summarise text with ease and unmatched accuracy. Having such a broad range of applications, you’re sure to find LLM applications across multiple fields, including:

Legal – Legal staff, paralegals and solicitors are using LLMs for everything from searching massive textual datasets to generating legalese, in order to uncover crucial evidence and process cases faster.

Marketing – Marketing teams of all scales use LLMs to perform sentiment analysis so that they can quickly generate lots of campaign ideas or text to be used as pitching examples, and a lot more.

Banking & finance – LLM NLP has been supporting credit card companies, banks, financial institutions, and fintechs in detecting fraud early and mitigating risks.

Customer service – Perhaps, one of the most common examples of LLM and NLP at work is customer service where LLMs have been utilised across multiple industries for customer service through chatbots/conversational AI.

Healthcare & science – Large language models can be trained to understand molecules, proteins, DNA, and RNA, which means they can assist in finding cures for illnesses, developing vaccines, and improving medicines used in preventive care. Additionally, LLMs are also being used in the form of medical chatbots to perform basic diagnoses or patient intakes.

Technology – LLMs have been used throughout the technology spectrum, from enabling search engines to provide highly accurate responses to assisting developers in writing lengthy lines of code, again, with unmatched accuracy.

LLM and NLP have impacted nearly every industry, from human resources and finance to insurance, healthcare and beyond – by automating customer self-service, improving response times on more and more tasks while offering superb accuracy, enhancing query routing, and encouraging intelligent context gathering for a wide array of business applications.

While large language models may lead us to believe that they can understand meaning easily in order to respond to inputs accurately, they are still very much a technological tool at best. As such, the use of LLMs involves several challenges, including:

Consent – LLMs are trained on billions to trillions of datasets. Some of these datasets may not have been obtained consensually. The process of scraping data off the internet through an LLM means that certain models may ignore copyright licenses, copy or plagiarise published content, and repurpose proprietary content without obtaining permission from the original artists or owners. So, when the LLM provides the desired output or results, there is no way of tracking data lineage – meaning that typically no credit is given where it’s due, exposing users to copyright infringement issues and even lawsuits.

In fact, that’s precisely what Getty Images did, where a lawsuit was registered against LLMs for violating intellectual property rights. These lawsuits are also largely the result of LLMs attempting to scrape personal data, such as the names of photographers or subjects from photo descriptions, which is an infringement on privacy.

Deployment – Deploying LLMs and NLPs requires extensive technical expertise, including utilising the correct transformer model, deep learning model, predictive AI model, and distributed software & hardware. Only data scientists and researchers with extensive experience as well as technical knowhow in the above can assist in the proper application of large language models and NLP.

Scaling – Scaling and maintaining LLMs can be a difficult, time-consuming, and resource-hogging task. Again, the right technical expertise must be sought from data scientists and LLM NLP consultants to reap the full benefits.

Bias – The data required for training LLMs can affect the outputs a certain model produces. Therefore, for example, if the data represents a single demographic only or lacks diversity, the outputs produced by the LLM will also lack diversity.

Security – The security risks involved with LLMs when they are not managed and surveilled properly should never be overlooked. This is a pressing security risk as LLMs may inadvertently leak individuals’ private information, produce spam, and participate in phishing attempts. This makes it easy for hackers, for example, or users with malicious intent to reprogram AI according to their ideologies or biases, thus, contributing to the spread of misinformation. Repercussions of such an event can lead to devastation and turmoil on a global scale.

Hallucinations – A hallucination within an LLM is when it produces a ‘false’ output or where the output is not in line with the user’s intent or input. For instance, an output where the LLM claims that it is human and has emotions, or that it has fallen in love with the human. The issue here is that LLMs are capable only of predicting the next syntactically correct word/phrase, which means they cannot wholly interpret human meaning. This confused or false output is called a “hallucination” in LLM NLP terms.

With such a wide range of applications, LLMs are highly beneficial for problem-solving as they can provide information in a clear and conversational style, making it really easy for users to understand the response or output. In addition:

They can be used for a broad set of applications – From sentiment analysis and sentence completion to language translation, mathematical equations, question answering, and more.

They are constantly improving – The performance of the average LLM is constantly improving. It is capable of growing as more data and parameters are fed into it. So, the more it learns or gets “trained”, the better it gets at performing its specific task. Not only that, but LLMs can exhibit in-context learning – once a large language model has been pretrained, few-shot prompting essentially allows the model to learn from the prompt without adding any more parameters. So, the LLM NLP is in a perpetual state of learning.

They are very fast learners – As demonstrated by in-context learning, LLMs are capable of learning very quickly because they do not need additional resources, weight, and parameters for training. So, they are fast in the sense that they do not require too many examples to do their job effectively.

ChatGPT is merely one example of how LLM and NLP has shaped the way organisations function across multiple industries. As large language models continue to be more actively integrated across organisations, speculation around it and a heated debate on what the future might be with its applications more widely adopted, have also increased.

Large language models are growing at a rapid pace and improving their command over natural language processing and predictive AI – in the right hands, LLM NLP can potentially boost business productivity and process efficiency on a massive scale, all the while lowering costs and overheads. To discuss how large language models and NLP can change the way you do business, consult us now.

Tags

Large language models and NLP; LLM NLP; LLM and NLP; large language models

Dive into the world of Natural Language Processing! Explore cutting-edge NLP roles that match your skills and passions.

Explore NLP Jobs

Fast Data Science appeared at the Hamlyn Symposium event on “Healing Through Collaboration: Open-Source Software in Surgical, Biomedical and AI Technologies” Thomas Wood of Fast Data Science appeared in a panel at the Hamlyn Symposium workshop titled “Healing Through Collaboration: Open-Source Software in Surgical, Biomedical and AI Technologies”. This was at the Hamlyn Symposium on Medical Robotics on 27th June 2025 at the Royal Geographical Society in London.

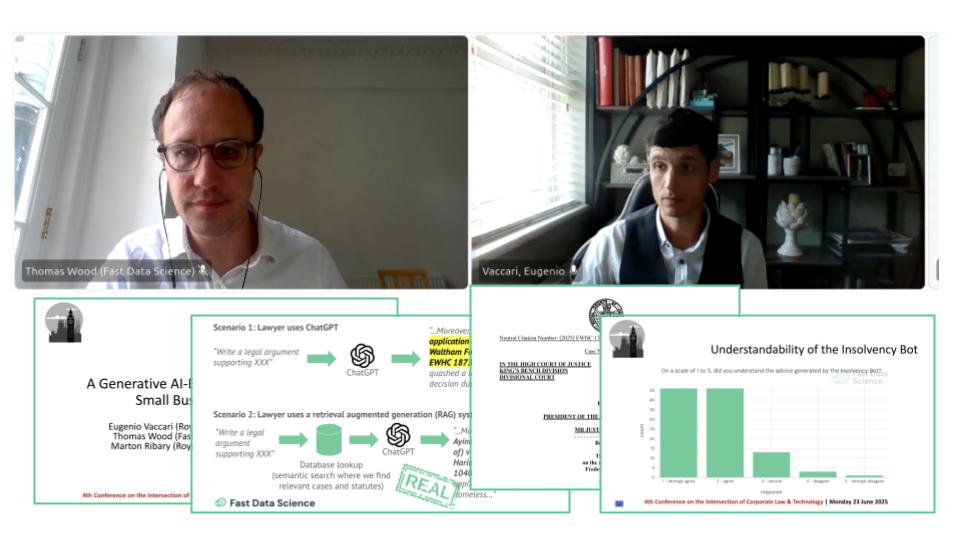

We presented the Insolvency Bot at the 4th Annual Conference on the Intersection of Corporate Law and Technology at Nottingham Trent University Dr Eugenio Vaccari of Royal Holloway University and Thomas Wood of Fast Data Science presented “A Generative AI-Based Legal Advice Tool for Small Businesses in Distress” at the 4th Annual Conference on the Intersection of Corporate Law and Technology at Nottingham Trent University

What is generative AI consulting? We have been taking on data science engagements for a number of years. Our main focus has always been textual data, so we have an arsenal of traditional natural language processing techniques to tackle any problem a client could throw at us.

What we can do for you