Natural language processing (NLP) is revolutionising how businesses interact with information. But large language models, or LLMs (also known as generative models or GenAI) can sometimes struggle with factual accuracy and keeping up with real-time information.

If ChatGPT was trained on data until a certain year, how can it answer questions about events that happened after the cutoff point?

Retrieval-augmented generation (RAG) allows LLMs such as ChatGPT to stay up to date in their responses.

Natural language processing

Remember the old mobile phones which completed a sentence by taking into account the previous words? That’s all the LLMs are doing.

An LLM is a super-powered autocomplete. It excels at understanding language patterns but can lack domain-specific knowledge. LLMs are notorious for hallucinating when they don’t know the answer.

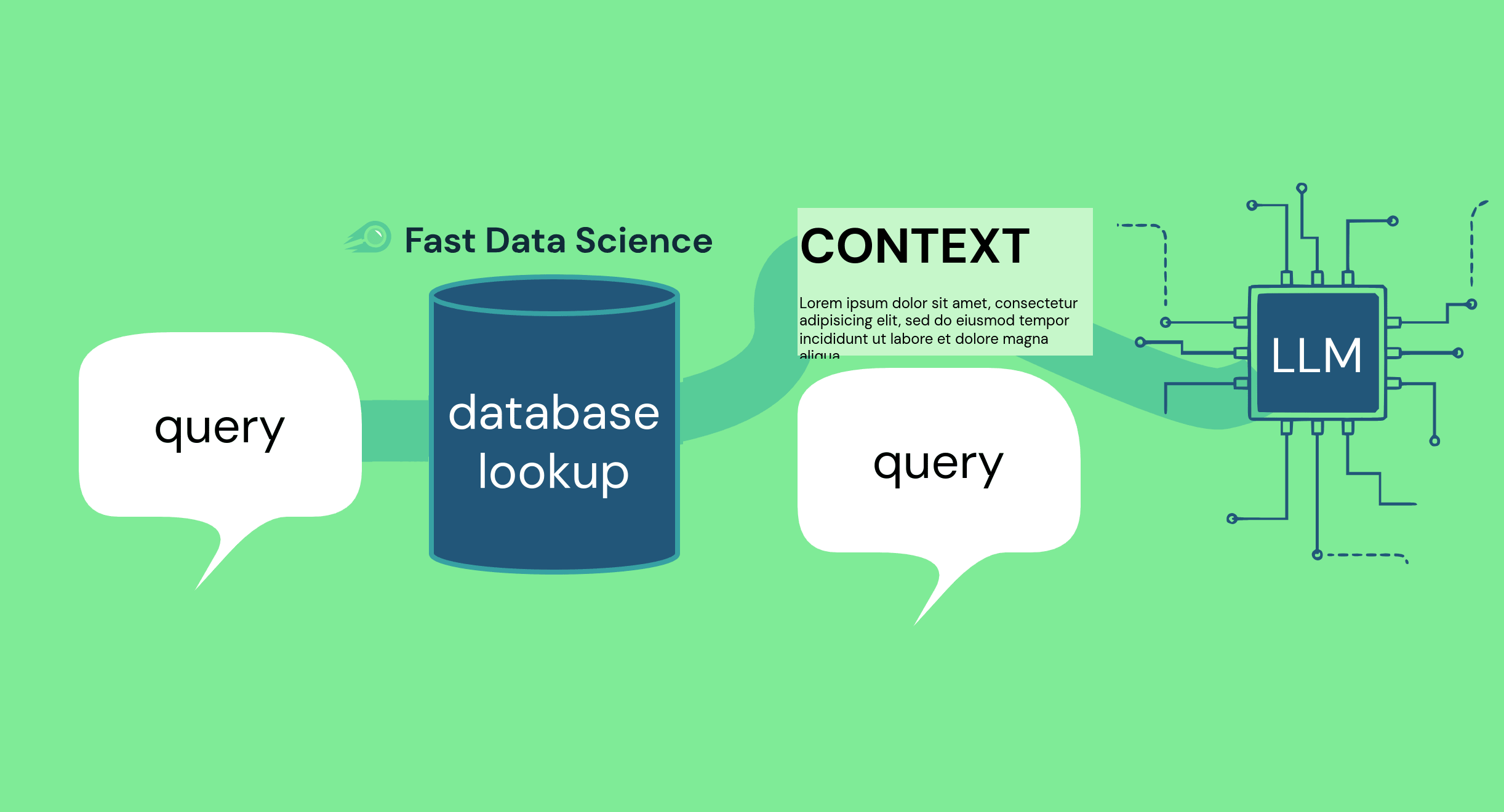

We can mitigate the problem of hallucinations and inaccuracies by taking the user prompt, and leveraging an external knowledge base and prepending or appending some useful information which we think the LLM should know, before we pass the prompt to the LLM. For example, if the user has a query about English insolvency law, we can send the user’s original question, together with some relevant information retrieved from a database.

Modifying the prompt sent to an LLM is also called prompt engineering.

With RAG, we augment the request by retrieving relevant documents from the knowledge base and feeding them to the LLM along with the original prompt. This empowers the LLM to generate more accurate and up-to-date responses.

A demonstration of the Insolvency Bot, a use case of RAG (retrieval augmented generation) in the legal domain.

Here’s how RAG and prompt engineering can benefit businesses:

Real-world applications of retrieval augmented generation

The Future of NLP

RAG represents a significant step forward in NLP. By combining the power of LLMs with external knowledge, businesses can unlock new levels of efficiency, accuracy, and cost-effectiveness in information retrieval. As technology evolves, RAG is poised to play a central role in the future of human-computer interaction.

Looking for experts in Natural Language Processing? Post your job openings with us and find your ideal candidate today!

Post a Job

We are excited to introduce the new Harmony Meta platform, which we have developed over the past year. Harmony Meta connects many of the existing study catalogues and registers.

Guest post by Jay Dugad Artificial intelligence has become one of the most talked-about forces shaping modern healthcare. Machines detecting disease, systems predicting patient deterioration, and algorithms recommending personalised treatments all once sounded like science fiction but now sit inside hospitals, research labs, and GP practices across the world.

If you are developing an application that needs to interpret free-text medical notes, you might be interested in getting the best possible performance by using OpenAI, Gemini, Claude, or another large language model. But to do that, you would need to send sensitive data, such as personal healthcare data, into the third party LLM. Is this allowed?

What we can do for you