Large language models and neural networks are powerful tools in natural language processing. These models let us achieve near human-level comprehension of complex documents, picking up nuance and improving efficiency across organisations.

You may have read about LLMs and other neural networks being trained by big tech companies on terabytes of data.

However, what do we do when we have only a small dataset to work with? I’ll define a small dataset as one of fewer than 100 documents, or a dataset where the documents must be manually tagged one by one, perhaps by offshoring the work to remote freelancers. With such a dataset, each document has a finite cost, so it will never grow to the sizes that are needed to train an LLM.

There are a number of approaches which we can take, depending on our use case, performance requirements, and sensitivity restrictions.

| Approach | Pros | Cons |

|---|---|---|

| Zero-shot learning | You can get off the ground straight away. LLMs are smart and can handle nuance in sentences. | Inaccurate, especially on highly domain specific tasks. Slow. May involve sending data to third parties. |

| Few-shot learning | Needs few training examples. Leverages the smartness of an LLM. | Still expensive to deploy and hard to explain. Very difficult to do in practice. |

| Convolutional neural network | Better at handling nuanced sentences, constructions involving “not”, etc | May need an expensive webserver deployment. Expensive to train. Harder to adjust features manually (“feature engineering”). Needs more training examples than Naive Bayes. |

| Naive Bayes classifier | Fast to train. Explainable. Easy and cheap to deploy. Adjustable (you can add/remove features manually). Needs very few example documents to train. | Doesn’t understand sentence structure - only a bag of words approach. Doesn’t understand grammatical inflections. |

The commonest use of large language models appears to be in prompt engineering, also known as retrieval augmented generation (RAG) applications, where an application is developed as a thin wrapper around the powerful LLM such as GPT by OpenAI, retrieving information from a domain-specific database and prepending this to a user’s prompt.

If a user asks, “My company Bill’s Fish Ltd went bust in 2023, and I want to open a new company called William’s Fishing Ltd. I live in England.” ChatGPT alone cannot answer this question reliably, but if we were to look up relevant statute and case law in a database and make a longer prompt containing legal information.

For example, we can construct the augmented prompt: “The Insolvency Act 1986 Section 216 contains rules about phoenix companies, and restricts the re-use of a name of a liquidated company, stating [content of relevant paragraph]. The user has asked [original query]. Please answer the user’s query taking into account the Insolvency Act 1986 Section 216.”. GPT can answer this question much more reliably than the original query from the user.

We have built a RAG based insolvency QA system which you can try at https://fastdatascience.com/insolvency.

Another case where LLMs can help is if we need to classify documents according to topic.

Let’s imagine, you need to develop an email triage system which will categorise incoming emails to a council as “change of address”, “query about fines”, “request for Covid advice”, “query about rubbish collection”, and 10 other categories.

You can send your incoming text them to a large language model and list the categories and ask it to categorise each one.

Or you can use the LLM (which could be running locally on your computer) to convert each email to a vector, and compare these vectors to the vectors of your document categories.

I’ve tried this on a number of projects - for example, to categorise healthcare demands for NGOs - however I have found personally that asking an LLM to categorise texts in this way is not very accurate and the LLM’s categorisations had to be redone by a human. I think this is because the LLMs are so general, and could easily categorise items between “politics” vs “sport” vs “finance”, whereas each business applications are much more nuanced and would involve multiple subcategories (e.g. randomised controlled trials vs observational studies vs meta-analyses, which are all within the clinical domain).

If you do use LLMs for a purpose like categorising incoming emails, it’s important to check you’re not sending sensitive data outside your organisation - you may want to use an on-premises hosted LLM. Check our blog post about machine learning on sensitive data.

The next step up from zero-shot learning, still using LLMs, would be to take an existing LLM that was developed for a similar but different domain from your use case, and retrain a part of it, or develop a way to use it and transform its output for your use case.

Few shot learning requires less training data compared to traditional machine learning methods, and can be quickly adapted to new tasks or domains with minimal data.

However, this approach might not achieve the same level of accuracy as models trained on large datasets. It can also be very difficult to adapt an existing LLM in this way.

A convolutional neural network (CNN) is a kind of model which can take into account the context of words in a sentence. So terms such as “not” can be handled correctly.

The main advantage of CNNs over simpler tools such as the Naive Bayes model is its contextual understanding: the CNN can capture relationships between words in a sentence. CNNs are robust and perform well on noisy or ambiguous text.

However, CNNs require a larger amount of labelled data compared to few-shot learning. They can be expensive to train and run, and it could difficult to modify them manually (e.g. tell them to ignore certain words or phrases), short of simply preprocessing all text as it comes in.

In practice, where data is sparse, I usually go back to the 90s and use a Naive Bayes classifier. This is what we could call a “small language model”. Typically a Naive Bayes classifier could be a list of 1000 keywords, with associated weights for each one. So a document which contains the word “observation” or “observational” might be assigned to the category “observational studies”.

On the surface, a Naive Bayes classifier might appear to be an overly crude and outdated tool in the age of large language models. Yet they are quick to train, they occupy next to no disk space, and can be deployed on a serverless solution such as AWS Lambda, Azure Functions, Google Cloud Functions, or similar (this means that you pay very little to have them running in a business solution). And they are explainable in a way that LLMs are not: you can easily find out what weight and importance a Naive Bayes classifier assigns to each weight, and actively remove words that you disagree with.

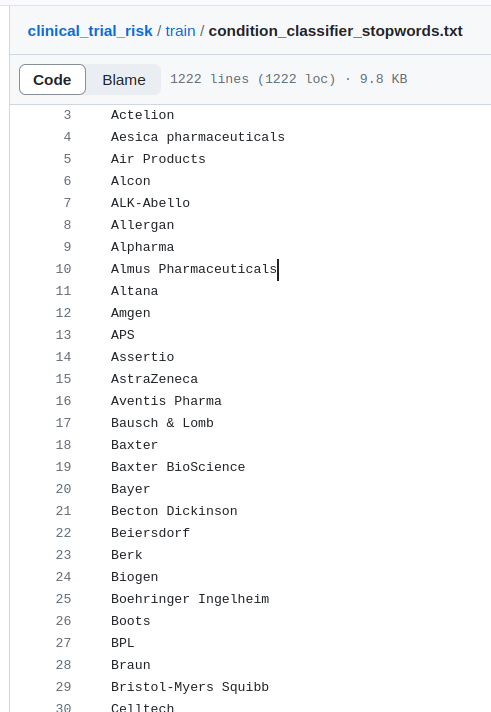

For example, for the development of the Clinical Trial Risk Tool, I needed to develop a “condition classifier”, that is, a Naive Bayes model that would “read” an incoming clinical trial protocol and identify whether it was about HIV, TB, or another condition. Since my data was hand-tagged, I only had about 50 example documents in each category. So the best thing I could do was to train a Naive Bayes classifier on those documents, and then manually exclude certain words. For example, if one pharma company consistently ran HIV trials and no TB trials, my Naive Bayes classifier “learnt” that the mention of that company’s name means that the trial is HIV.

This is obviously undesirable, like when a small child generalises that all furry animals are cats. So I was able to add all pharma company names, all months of the year, all country names, etc, to a list of stopwords (words that my classifier should ignore).

This helped my classifier to become a lot smarter. After spending a little bit of time adjusting my stopwords lists, I was able to make my classifier output the top words in each category:

Strongest predictors for class 0 CANCER

0 atezolizumab

1 tumor

2 pet

3 nci

4 chemotherapy

5 lesions

6 radiation

7 cancer

8 biopsy

9 cycle

Strongest predictors for class 5 HIV

0 hvtn

1 taf

2 hptn

3 ftc

4 arv

5 impaact

6 hiv

7 antiretroviral

8 tenofovir

9 tdf

I found this hybrid human+machine approach very helpful and for many tasks where there is very little training data, it has outperformed anything I have been able to achieve with LLMs or more advanced models.

When you have only a very small training dataset, I strongly recommend to try Naive Bayes as the first port of call. If you’re able to improve on the Naive Bayes’ accuracy in important metrics using more advanced models, that’s great. However, it’s important to compare your more advanced models to the Naive Bayes baseline.

I’ve lost count of the number of projects where I’ve been brought on board and the previous data scientist built something fancy using neural networks (perhaps on a client’s or boss’s insistence), and the end result performs worse than Naive Bayes, is more expensive and error prone to run, and is less explainable.

Looking for experts in Natural Language Processing? Post your job openings with us and find your ideal candidate today!

Post a Job

We are excited to introduce the new Harmony Meta platform, which we have developed over the past year. Harmony Meta connects many of the existing study catalogues and registers.

Guest post by Jay Dugad Artificial intelligence has become one of the most talked-about forces shaping modern healthcare. Machines detecting disease, systems predicting patient deterioration, and algorithms recommending personalised treatments all once sounded like science fiction but now sit inside hospitals, research labs, and GP practices across the world.

If you are developing an application that needs to interpret free-text medical notes, you might be interested in getting the best possible performance by using OpenAI, Gemini, Claude, or another large language model. But to do that, you would need to send sensitive data, such as personal healthcare data, into the third party LLM. Is this allowed?

What we can do for you