We used natural language processing to uncover the clues that pointed to a rogue journalist’s history of submitting fake news

Der Spiegel is Germany’s most respected news magazine. In 2018, the German media sector was shocked by the news that Der Spiegel’s rising star, Claas Relotius, had “falsified his articles on a grand scale”. Critics of the publication seized the chance to accuse the publication of peddling “fake news”, and the German far-right party Alternative for Germany (AfD) wrote that the scandal confirmed their view of the media as die Lügenpresse (lying press). The consequences are still felt today. Juan Moreno, the whistleblower who discovered the fake articles, wrote that participants at one journalist event referred to Relotius as “Lord Voldemort”.

Trailer of Tausend Zeilen (Thousand Lines), a film by Warner Bros about the Relotius affair, starring Elyas M’Barek.

The other comparable fake news scandal in German journalism is arguably the Hitler Diaries, a set of forged diaries which were purchased by the magazine Stern in 1983. The magazine cover on the right reads “Hitler’s diaries discovered”, and was a major scoop for Stern at the time.

Across the Atlantic, the film Shattered Glass tells the story of the fall from grace of Stephen Glass, who had falsified articles for The New Republic.

Glass had worked for The New Republic from 1995 to 1998 until it was discovered many of his articles were completely fabricated.

The Stern magazine front cover from 28 April 1983. Fair use (low resolution image).

Is it possible to identify when somebody is not telling the truth? You may be aware of the subtle body language, tics and signals that give away a liar, but what about the written word? How about fake news?

When I read about the Relotius affair, I couldn’t help wondering whether natural language processing could have helped detect Relotius sooner. Natural language processing can be used to analyse the style of a text and identify candidate authors, so what about distinguishing real from fabricated texts, such as fake news?

Let’s try applying some data science magic on Relotius’ articles, to see what we can learn.

In 2018, a caravan of several thousand central American migrants were making their way from Honduras through the Sonora desert in Mexico and onwards to the final goal of the United States.

Juan Moreno, a 45 year old freelance reporter, was travelling alongside the migrant caravan and gathering some material for a feature piece for Der Spiegel.

Moreno had been tasked with covering the caravan as they travelled through Mexico. He had spent several gruelling weeks in the desert and had already identified two young women who were willing to let him shadow them for a few days.

He was not happy to receive an email from the Spiegel editors saying that his young, successful colleague, Claas Relotius would now be working on the article with him and would take editorial control over the final version.

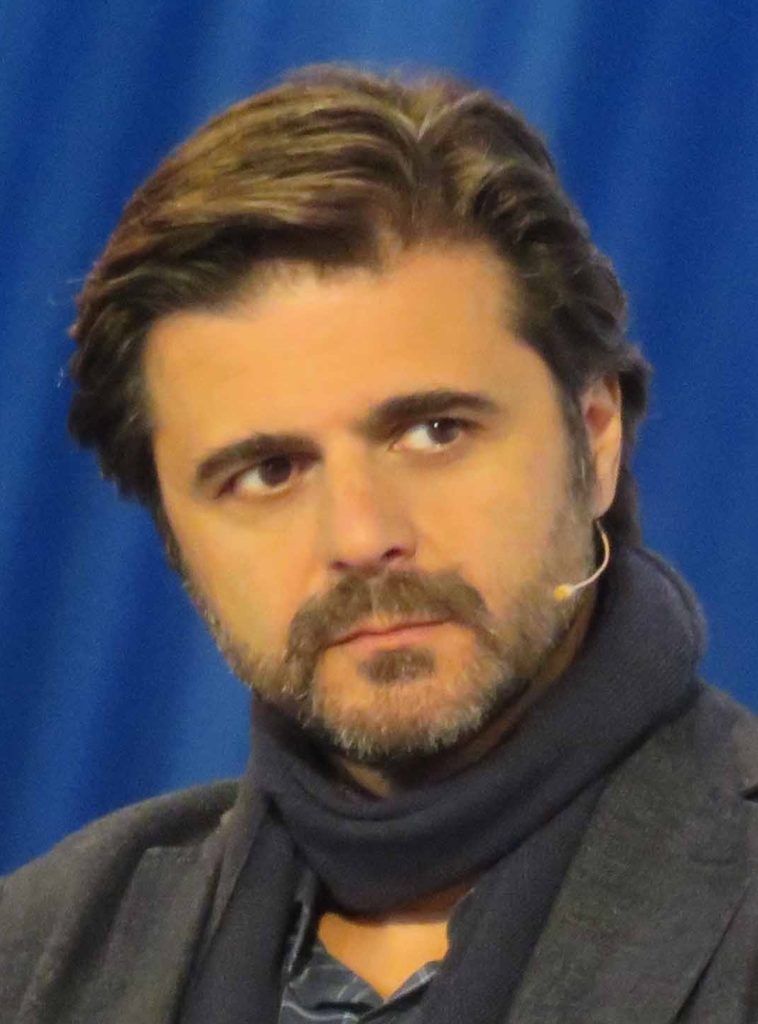

Juan Moreno (image source: Wikimedia Commons)

Claas Relotius (image source: Wikimedia Commons. CC BY-SA 4.0)

Relotius had won more than 40 prizes in journalism and was widely regarded as a rising star in the field.

Relotius was to travel to Arizona and track down a militia, a group of volunteers who spend their time and money defending the US southern border from the perceived threat of illegal migration, while Moreno would stay in Mexico and continue to report on the migrants.

After the assignment was finished, Moreno flew back to Germany.

When Moreno received Relotius’ drafts and final article, titled Jaeger’s border (German: Jaegers Grenze), something just didn’t feel right. Relotius claimed to have spent a few days in the company of a militia called the Arizona Border Recon. In Relotius’ articles, the members of Arizona Border Recon were armed and went by colourful nicknames such as Jaeger, Spartan and Ghost. Relotius claimed to have witnessed Jaeger shooting at an unidentified figure in the desert. The militia were portrayed as a stereotypical band of hillbillies, and some details seemed hard to believe.

Moreno began looking into Jaeger’s Border and Relotius’ articles. He spent his savings on his own private investigation. He travelled in Relotius’ footsteps to the USA and went to Arizona and other locations. It quickly became clear that Relotius had been fabricating stories rather than interviewing the subjects he claimed to have interviewed.

Many of Relotius’ articles relied on stereotypes and the stories seemed far-fetched and too good to be true. For me, the most absurd story centres on a brother and sister from Syria who were working in a Turkish sweatshop. Relotius invented a Syrian children’s song about two orphans who grow up to be king and queen of Syria. According to the article, every Syrian child “from Raqqah to Damascus” is familiar with this traditional song. Moreno spoke to a Syrian mechanic, university lecturers in Arabic and Middle Eastern Studies, and as many Syrian experts as he could find. Nobody had heard of the song!

After much persistence on behalf of Moreno, the management at Der Spiegel reluctantly investigated Relotius’ articles, and concluded that he had indeed fabricated the majority of his articles during his 8 year tenure.

Relotius had invented interviews that never took place, and people who never existed. He even wrote an article about rising sea levels in the Pacific island of Kiribati without bothering to take his connecting flight to the country.

Der Spiegel issued a mass retraction of the affected fake news articles and the ‘Relotius Affair’ became a nationwide scandal, making news worldwide and prompting an intervention by the US ambassador to Germany who objected to the “anti-American sentiment” of some of the articles.

The article Jaeger’s Border and Relotius’ other texts can be downloaded as a PDF from Der Spiegel’s website. In total, 59 articles are available for download, together with annotations by Der Spiegel indicating what content is genuine and what is pure invention or fake news.

There is a large amount of English language content available online on the Relotius scandal, including English translations of many of the articles.

Seinen vierten Reporterpreis gewann er… weil er vor dem Konjunktiv, anders als viele andere Schreiber, eben nicht zurückwich, sondern den Zweifel immer mitschwingen ließ.

He won his fourth reporter prize… because, unlike other authors, he did not shy away from using the subjunctive, but always allowed some room for doubt.

Juan Moreno, Tausend Zeilen Lüge

In formal written German, a different verb form is used for events that the writer heard second hand or is quoting. For example, an article might say Er hätte mit der Niederlage nicht gerechnet (he had not counted on defeat) if this is part of reported speech or conjecture, whereas Er hat mit der Niederlage nicht gerechnet is more certain. The hätte form is the subjunctive, and Moreno remarks in the above quote that Relotius used it more liberally than other authors.

What we can do for you

I downloaded all 59 available Relotius articles and Der Spiegel’s annotations and tried a few data science experiments on them.

First of all I checked the truth/falsehood status of the articles. You can see that more than half are fictitious, although there are some articles where it was not possible for Der Spiegel to determine if the article was genuine or not. I excluded the latter from my analysis.

](https://fastdatascience.com/images/relotius_summary.png)

Of Relotius’ 59 articles, 32 are definitely fake news, while the remainder are true or unclear. We will analyse the genuine and fake news with natural language processing.

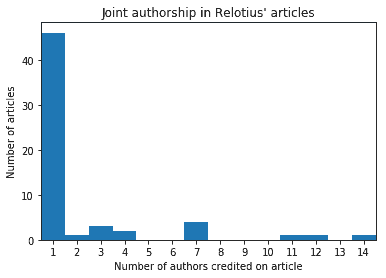

The vast majority of Relotius’ articles were written by him alone. Moreno later stated that this was quite unusual at Der Spiegel for a reporter to take on so many lone assignments, but Relotius was the star reporter at the publication and seemed to have acquired a certain privilege in this regard.

The fake news articles tended to be written by Relotius alone. 44 articles were under sole authorship.

Of course we know now it was easier for him to fabricate content when working alone.

There is something else interesting about the above graph. Relotius wrote only one article in a team of two. The other collaborative articles all involved larger teams of up to 14 authors.

The sole two-author article is Jaeger’s Border, the article which got Relotius caught out!

This shows that Relotius had a pattern of either writing articles alone, or in a large team. He managed to get away with this strategy for years until the Jaeger’s Border assignment. Perhaps when you are collaborating in a large group it is also easier to avoid scrutiny.

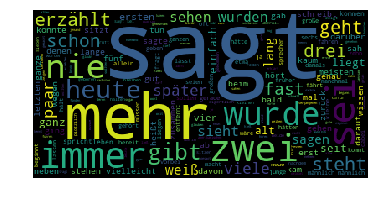

I tried generating a word cloud of the genuine and fake news articles, to see if there is any discernible difference. A word cloud shows words in different font sizes according to how often they occur in a set of documents.

Word cloud for the genuine news articles. The largest (most common) word is sagt (says).

Word cloud for the fake news articles.

Unfortunately there is not a huge difference between the two sets.

However I can see some patterns.

There is more use of sei, würde in the genuine news articles, which are special verb forms (subjunctive, see Moreno’s quote above) that are used often in reported speech. It appears that the fake news involved more description of direct action and less tentative reporting or reported speech. This is in concordance with Moreno’s remark that Relotius used the subjunctive relatively often.

The word deutschen (‘German’) is more common in the genuine news articles. In Moreno’s book he explained that Relotius only faked his articles that involved travel outside Germany, as it would presumably be harder to make up fake German news for a German audience and make it sound convincing.

I then tried a more scientific approach. I used a tool called a Naive Bayes Classifier to find the words which most strongly indicate that an article is genuine or fictitious.

The Naive Bayes approach assigns a large negative number to words that strongly indicate fake news and a smaller negative number to words that indicate genuine news.

Here are the top 15 words that indicate that an article is genuine, with English translations and the scores from the Naive Bayes classifier:

| sagt | says | -8.37 |

| sei | is (reported speech) | -8.64 |

| mehr | more | -8.69 |

| immer | always | -8.77 |

| geht | goes | -8.79 |

| schon | already | -8.84 |

| deutschen | German | -8.85 |

| später | later | -8.88 |

| nie | never | -8.89 |

| sagte | said | -8.89 |

| seit | since | -8.90 |

| gibt | gives/there is | -8.92 |

| bald | soon | -8.92 |

| kommen | come | -8.92 |

| gut | good | -8.93 |

and here some of the top 15 words that indicate that an article is fictitious:

| enthaupteten | beheaded | -9.29 |

| verstümmelten | mutilated | -9.29 |

| abgeladen | unloaded | -9.29 |

| gegenwärtig | current | -9.29 |

| abschrecken | scared off | -9.29 |

| richteten | directed | -9.29 |

| glieds | member | -9.29 |

| öffentlichten | published | -9.29 |

| umfangreiche | extensive | -9.29 |

| preisgeben | divulge | -9.29 |

| zurückgezogen | withdrawn | -9.29 |

| hackten | hacked | -9.29 |

| korrupte | corrupt | -9.29 |

| bloggenden | blogging | -9.29 |

| lebensbedrohlich | life threatening | -9.29 |

This is just a snapshot but we can see some more patterns now. The fake news seems to be quite heavy in strong, emotive or very graphic language such as corrupt or mutilated. When I took the top 100 words this effect is still noticeable.

I then tested to see if it was possible to use the Naive Bayes Classifier to predict if an unseen Relotius text was fake or genuine, but unfortunately this was not possible to any degree of accuracy.

It is not possible to build a fake news detector given that we only have 59 articles to work from, but knowing in retrospect that Relotius falsified some texts, it is definitely possible to observe patterns and significant differences between his genuine and fake articles:

Perhaps knowing these effects it may be possible to flag suspicious texts in the future. If a reporter seems overly keen on working alone, travelling abroad, and seems to interview few subjects, but writes using colourful language that would be more appropriate in a novel, then perhaps something is amiss?

Naturally Relotius’ prizes were revoked and returned one by one, and he resigned from his position at Der Spiegel.

Juan Moreno, the whistleblower who discovered Relotius’ fraud, wrote a tell-all book about the Relotius Affair, titled A Thousand Lines of Lies (Tausend Zeilen Lüge). The book is a fascinating exposé of the world of print journalism in the digital age as well as a first hand account of how Relotius’ system unravelled.

In 2019, Relotius started legal proceedings against Moreno for alleged falsehoods in the book.

Since the Relotius affair, the topic of fake news and fake content has become ever more important. The last few years have seen the release of large language models (LLMs), such as ChatGPT and GPT-3, and their prevalence in content generation. The Guardian reported in 2023 that nearly 50 news websites are AI-generated, with bizarre headlines perplexing readers such as “Biden dead. Harris acting president, address 9am ET”.

In another blog post I explore the possibilities of evaluating generative models and LLMs such as ChatGPT numerically. As part of a research project with Royal Holloway University, we are experimenting with generative models for legal NLP and combining generative models to answer questions about corporate insolvency. LLMs are incredibly versatile and can be adapted to a number of tasks - so what about fake news detection with LLMs?

If a document contains “I cannot complete this prompt”, or “as an AI language model,” the chances are that ChatGPT or another LLM wrote it! But this red flag is not always visible.

If you suspect a text was written by a generative language model such as ChatGPT, you can pass the sequence of tokens through an LLM to calculate how “surprised” the LLM is to see that exact sequence. A text that was computer generated would not appear very surprising to an LLM. The number calculated is perplexity, which is a measure of the amount of randomness in a text. Human generated texts tend to have higher perplexities. Universities are now using perplexity-based tools to check if students’ essay submissions could have been written by ChatGPT.

I would also be interested to explore the possibility of using perplexity and an LLM to identify fake news with Relotius’ texts. Moreno remarked that the fake news articles were often formulaic: a protagonist with a backstory overcomes an emotive challenge. This is definitely something to explore. Watch this space!

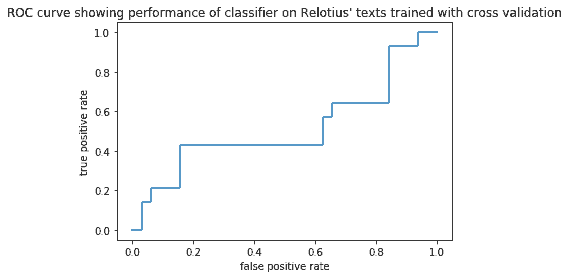

I used a multinomial Naive Bayes classifier with tf*idf scores. I evaluated it below using a ROC curve:

This is a ROC curve showing the performance of my Naive Bayes classifier under cross validation for predicting unseen Relotius texts. A good classifier would have a line close to the top left hand corner.

The fact that the line is on the diagonal shows that my predictions were no better than rolling a dice. That means that if Relotius were still writing for Der Spiegel today, I would have no way of knowing if his latest article were fictitious or not.

It appears that fake news, plagiarism and AI generated content will still be a problem for the near future. Let’s hope that NLP fake news detection improves in the near future.

Interview with Juan Moreno after the Relotius affair (in German).

Juan Moreno, Tausend Zeilen Lüge: Das System Relotius und der deutsche Journalismus (A thousand lines of lies: the Relotius system and what it means for German journalism) (2019). Tells the story of the Relotius scandal and the growing problem of fake news in journalism.

Claas Relotius, Bürgerwehr gegen Flüchtlinge: Jaegers Grenze (Militia against refugees: Jaeger’s Border), and all other Claas Relotius texts, Der Spiegel (2018).

Philip Oltermann, The inside story of Germany’s biggest scandal since the Hitler diaries, The Guardian (2019).

Ralf Wiegand, Claas Relotius geht gegen Moreno-Buch vor (Claas Relotius takes action against Moreno’s book), Sueddeutsche Zeitung (2019).

(Multinomial Naive Bayes) C.D. Manning, P. Raghavan and H. Schuetze, Introduction to Information Retrieval, pp. 234-265 (2008).

Dive into the world of Natural Language Processing! Explore cutting-edge NLP roles that match your skills and passions.

Explore NLP Jobs

We are excited to introduce the new Harmony Meta platform, which we have developed over the past year. Harmony Meta connects many of the existing study catalogues and registers.

Guest post by Jay Dugad Artificial intelligence has become one of the most talked-about forces shaping modern healthcare. Machines detecting disease, systems predicting patient deterioration, and algorithms recommending personalised treatments all once sounded like science fiction but now sit inside hospitals, research labs, and GP practices across the world.

If you are developing an application that needs to interpret free-text medical notes, you might be interested in getting the best possible performance by using OpenAI, Gemini, Claude, or another large language model. But to do that, you would need to send sensitive data, such as personal healthcare data, into the third party LLM. Is this allowed?

What we can do for you