Imagine that you work for a market research company, and you’ve just run an online survey. You’ve received 10,000 free text responses from users in different languages. You want to quickly make a pie chart or bar chart showing common customer complaints, broken down by old customers, new customers, different locations, different spending patterns, and demographics.

This might seem like a straightforward answer - “just drag and drop your survey responses into OpenAI” - but it’s a little more complex. This is a common problem in market research projects, where data is gathered in both numerical form (e.g. Likert scales) and free text (e.g. What do you think could be improved about the service?).

ChatGPT has a limit on the file sizes that it can process. If you were to just upload an Excel of too many survey responses to GPT, you would also find that GPT’s context window would start to cause problems. GPT may assign a disproportionately high importance to the last responses in the list. There’s also the issue of reproducibility: if you did this multiple times in ChatGPT, you would get different summaries each time. Furthermore, GPT’s summary might exhibit semantic leakage, and fail to accurately separate the effects of the different survey response inputs.

We recently did a market research analysis for a company in the finance space. We had an online survey with free text responses from existing customers of the company.

I will talk through how we approached the survey analysis as I think this is a very useful technique for analysing unstructured survey data. I will use synthetic data from a fictional supermarket customer survey which I have put on a public repository in Github here: https://github.com/harmonydata/harmony_examples/blob/main/tw_analyse_clusters_with_harmony.ipynb

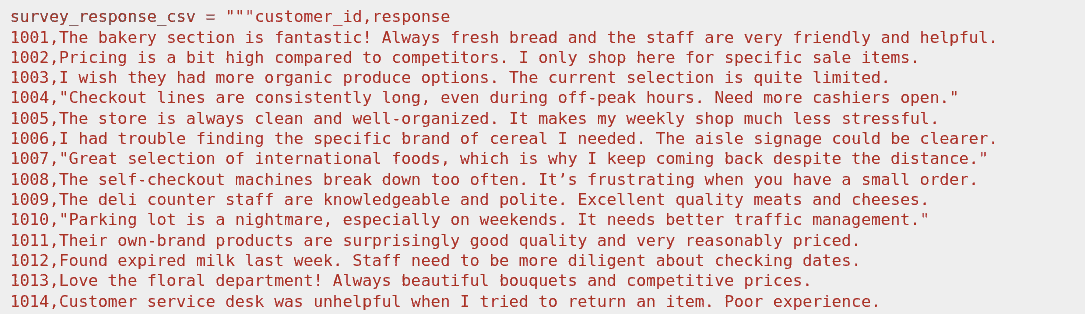

Above: the first few lines of the free text data from a customer survey for customers of a supermarket. This is synthetic data that I generated with Gemini for the purpose of this walkthrough.

The client company had already sanitised and preprocessed the texts before passing them to us, which makes our task a little easier: the responses had already been anonymised and translated to English if they were not originally in English. However, this analysis would still be possible on raw unprocessed text data.

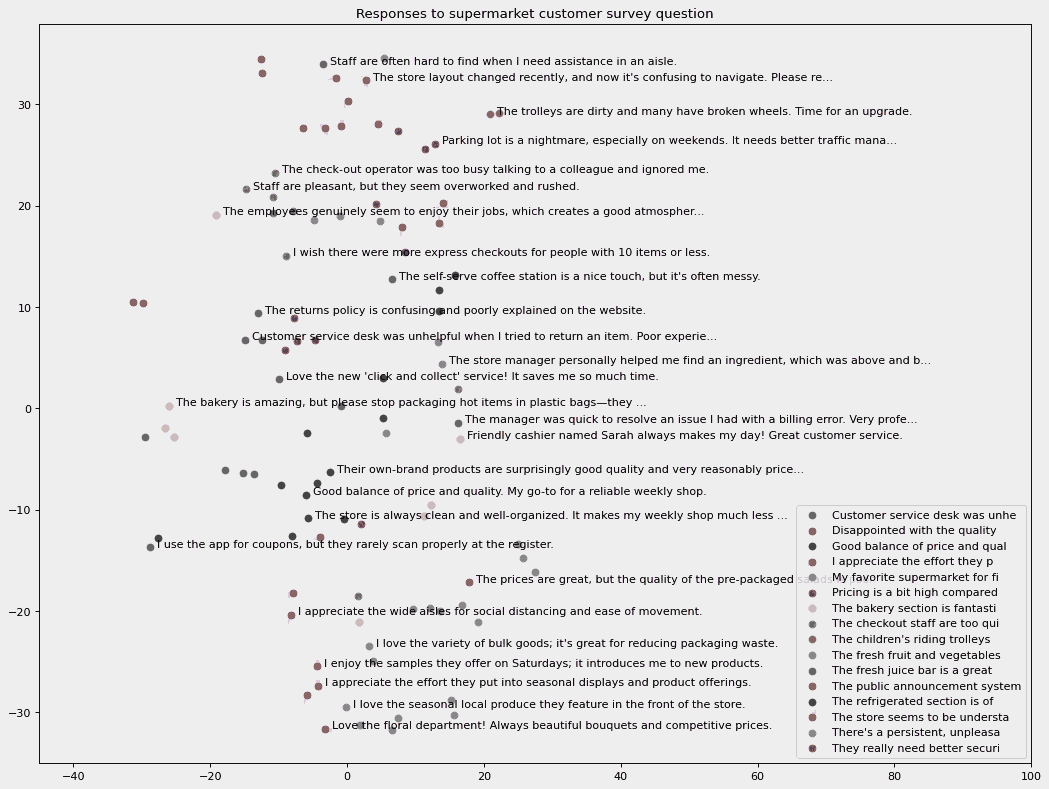

We used the Python library Harmony to identify clusters and topics in the input responses from the survey. Harmony converts all the text items to a vector representation. It then clusters them in multi-dimensional space and identifies the centroids of each cluster. (We have been involved in the development of the Harmony library; you can find its Github repository here: https://github.com/harmonydata/harmony )

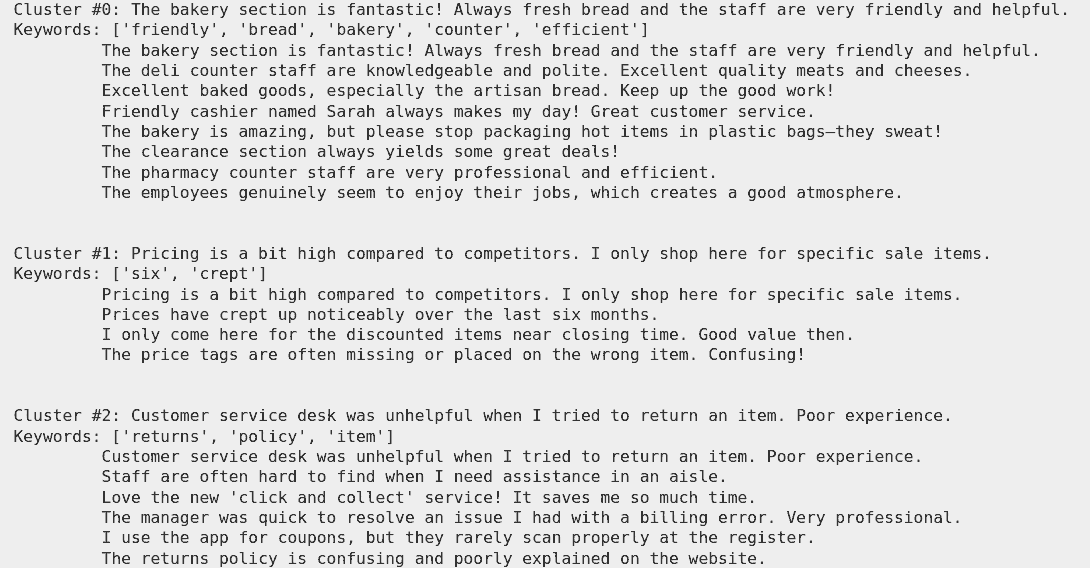

Each cluster is defined as the vectors and corresponding texts that it contains, however we don’t yet have an accurate topic summary of the classes.

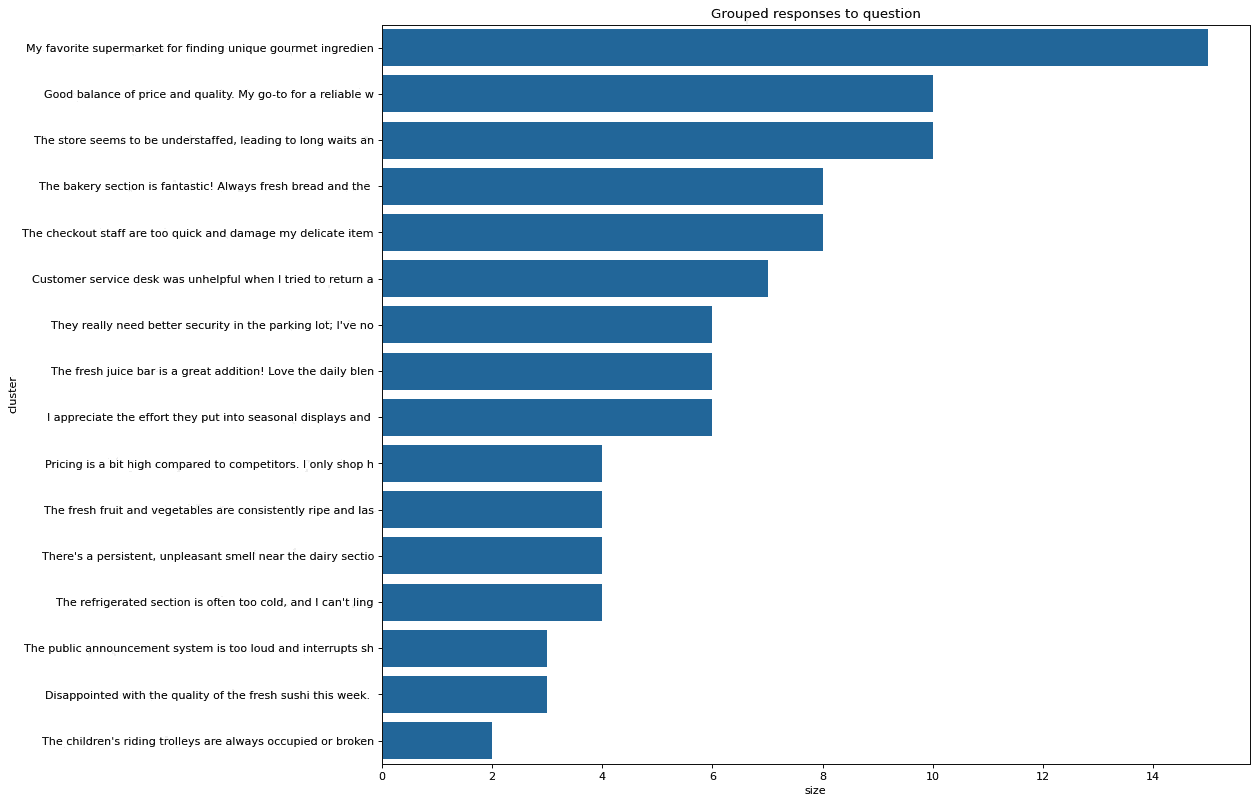

At this stage, it’s possible to plot a bar chart showing the size of each cluster and the text at the centroid of each cluster:

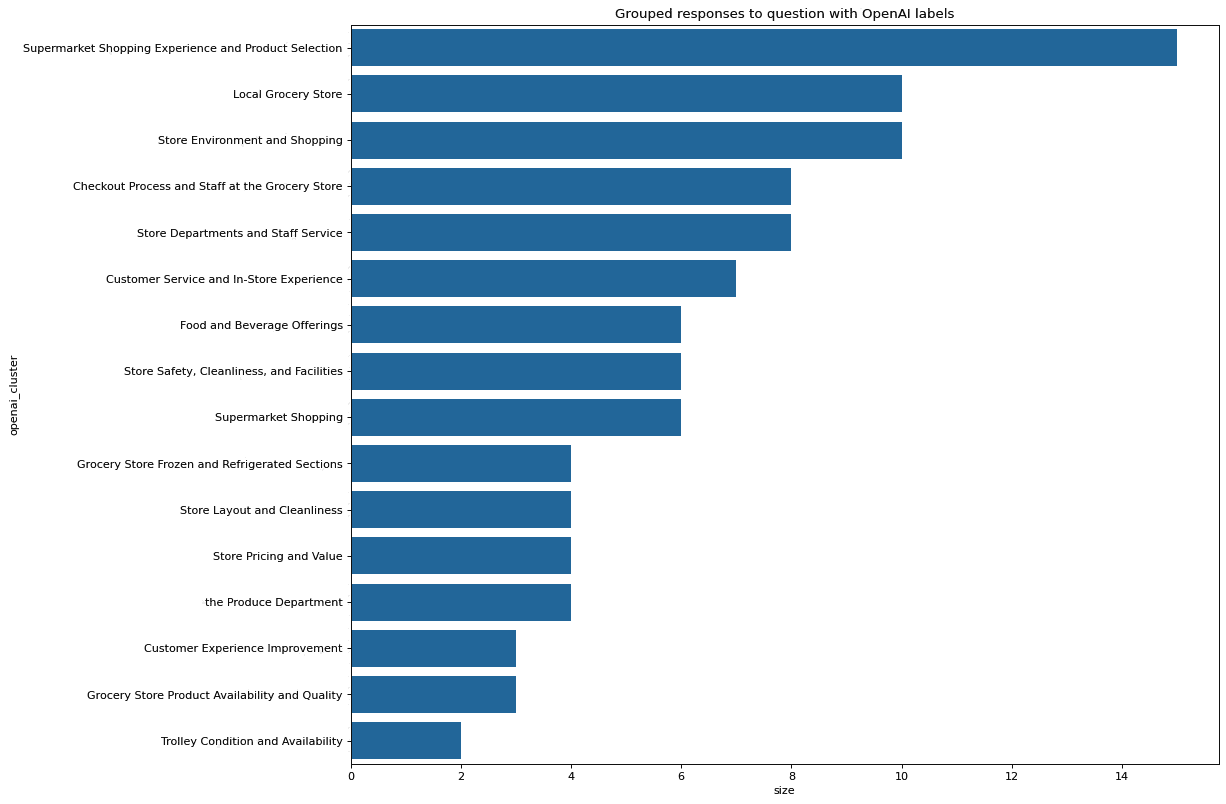

You can now take the clusters that were identified by Harmony and ask the OpenAI API to assign a topic header to each cluster. OpenAI seems to do this reasonably accurately.

Below you can see the output of a bar chart after we have used open AI to refine the cluster names:

If you prefer to use Python you use this example Jupyter notebook which uses Harmony and OpenAI’s API to analyse the responses from an online customer survey for a fictional supermarket.

If you would like to work in R then Alex Nikic has prepared an R port of the same functionality here.

Ready to take the next step in your NLP journey? Connect with top employers seeking talent in natural language processing. Discover your dream job!

Find Your Dream Job

We are excited to introduce the new Harmony Meta platform, which we have developed over the past year. Harmony Meta connects many of the existing study catalogues and registers.

Guest post by Jay Dugad Artificial intelligence has become one of the most talked-about forces shaping modern healthcare. Machines detecting disease, systems predicting patient deterioration, and algorithms recommending personalised treatments all once sounded like science fiction but now sit inside hospitals, research labs, and GP practices across the world.

If you are developing an application that needs to interpret free-text medical notes, you might be interested in getting the best possible performance by using OpenAI, Gemini, Claude, or another large language model. But to do that, you would need to send sensitive data, such as personal healthcare data, into the third party LLM. Is this allowed?

What we can do for you