Natural language processing (NLP) is the science of getting computers to talk, or interact with humans in human language. Examples of natural language processing include speech recognition, spell check, autocomplete, chatbots, and search engines.

Natural language processing has been around for years but is often taken for granted. Here are eight examples of applications of natural language processing which you may not know about. If you have a large amount of text data, don’t hesitate to hire an NLP consultant such as Fast Data Science.

When companies have large amounts of text documents (imagine a law firm’s case load, or regulatory documents in a pharma company), it can be tricky to get insights out of it.

For example, a pharmaceutical executive may want to know of the thousands of clinical trials that the firm has run, how many resulted in a particular side effect, when that information is stored in a stack of documents and nobody has time to read them all.

Natural language processing provides us with a set of tools to automate this kind of task.

Traditional Business Intelligence (BI) tools such as Power BI and Tableau allow analysts to get insights out of structured databases, allowing them to see at a glance which team made the most sales in a given quarter, for example. But a lot of the data floating around companies is in an unstructured format such as PDF documents, and this is where Power BI cannot help so easily.

A natural language processing expert is able to identify patterns in unstructured data. For example, topic modelling (clustering) can be used to find key themes in a document set, and named entity recognition could identify product names, personal names, or key places. Document classification can be used to automatically triage documents into categories.

Natural language processing can be used for topic modelling, where a corpus of unstructured text can be converted to a set of topics. Key topic modelling algorithms include k-means and Latent Dirichlet Allocation. You can read more about k-means and Latent Dirichlet Allocation in my review of the 26 most important data science concepts.

I often work using an open source library such as Apache Tika, which is able to convert PDF documents into plain text, and then train natural language processing models on the plain text. However even after the PDF-to-text conversion, the text is often messy, with page numbers and headers mixed into the document, and formatting information lost.

Enter a text snippet to categorize it as pharmaceuticals or finance.

Awaiting input...

Spelling and grammar checkers are now commonplace and help us to fill out web forms correctly, and avoid typos. In fact, when I type on a mobile phone screen I find that the spell checker probably corrects most of the words!

You would think that writing a spellchecker is as simple as assembling a list of all allowed words in a language, but the problem is far more complex than that. How can such a system distinguish between their, there and they’re? Nowadays the more sophisticated spellcheckers use neural networks to check that the correct homonym is used. Also, for languages with more complicated morphologies than English, spellchecking can become very computationally intensive.

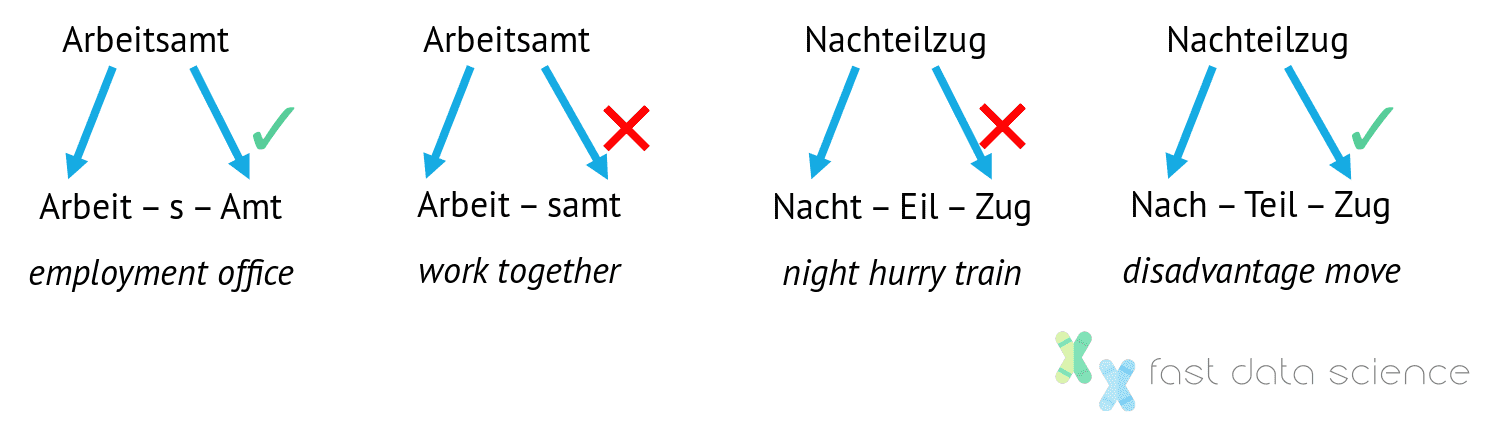

As an example of a non-English-specific problem in natural language processing, a German spellchecker must deal with the problem of Kompositazerlegung: splitting compound words into their constituent parts. Sometimes there is more than one valid splitting, although only one makes sense to a human reader. Open-source software such as LibreOffice can perform this task using the library Hunspell, which was first developed for Hungarian, a language with a very complex morphology.

There has recently been a lot of hype about transformer models, which are the latest iteration of neural networks. Transformers are able to represent the grammar of natural language in an extremely deep and sophisticated way and have improved performance of document classification, text generation and question answering systems. The best known of these tools are BERT, GPT-2 and GPT-3.

Fast Data Science - London

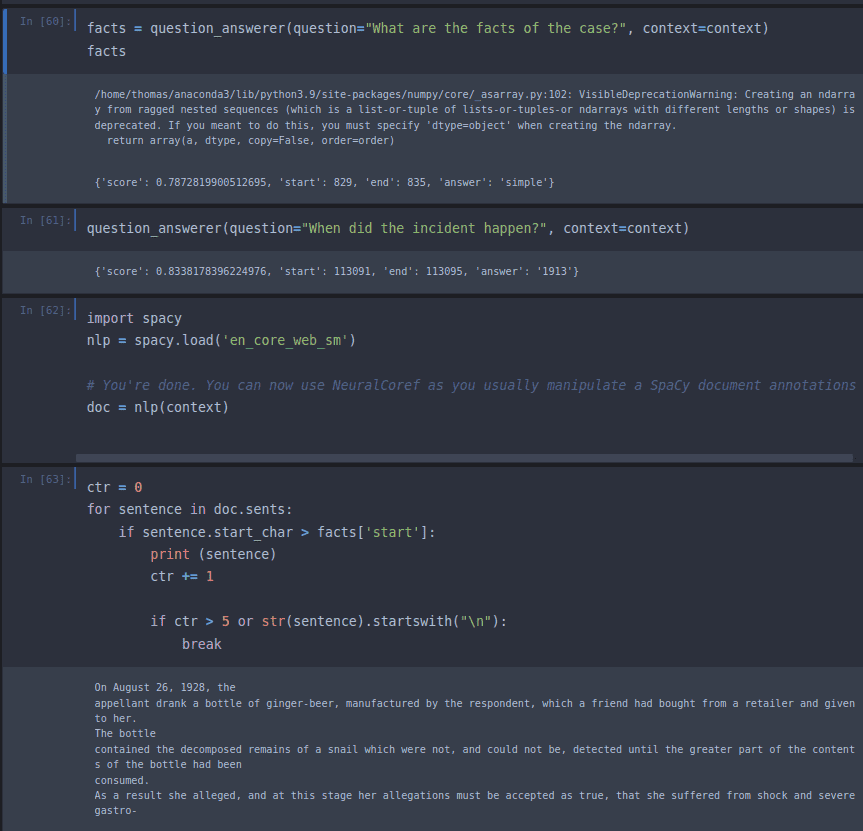

The easiest way to get started with BERT is to install a library called Hugging Face. Below you can see my experiment retrieving the facts of the Donoghue v Stevenson (“snail in a bottle”) case, which was a landmark decision in English tort law which laid the foundation for the modern doctrine of negligence. You can see that BERT was quite easily able to retrieve the facts (On August 26th, 1928, the Appellant drank a bottle of ginger beer, manufactured by the Respondent…). Although impressive, at present the sophistication of BERT is limited to finding the relevant passage of text.

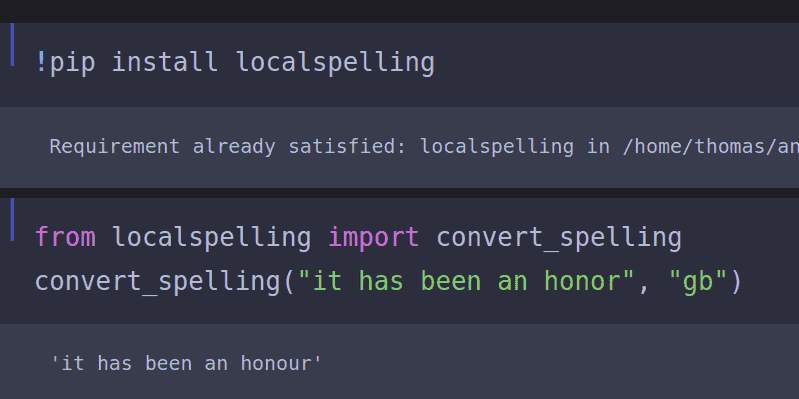

One problem I encounter again and again is running natural language processing algorithms on documents corpora or lists of survey responses which are a mixture of American and British spelling, or full of common spelling mistakes. One of the annoying consequences of not normalising spelling is that words like normalising/normalizing do not tend to be picked up as high frequency words if they are split between variants. For that reason we often have to use spelling and grammar normalisation tools.

After this problem appeared in so many of my projects, I wrote my own Python package called localspelling which allows a user to convert all text in a document to British or American, or to detect which variant is used in the document.

Although spelling normalisation may seem unimportant, the BBC reported in 2022 that spelling mistakes are costing the UK millions of pounds in lost revenue, and that a single spelling mistake on a website can half a conversion rate. Unbeleivable!

Given a text in an unknown language, it’s surprisingly easy for natural language processing to identify the language. There are two main approaches to language identification:

An NLP system can look for stopwords (small function words such as the, at, in) in a text, and compare with a list of known stopwords for many languages. The language with the most stopwords in the unknown text is identified as the language. So a document with many occurrences of le and la is likely to be French, for example.

A slightly more sophisticated technique for language identification is to assemble a list of N-grams, which are sequences of characters which have a characteristic frequency in each language. For example, the combination ch is common in English, Dutch, Spanish, German, French, and other languages.

But the combination sch is common only in German and Dutch, and eau is common as a three-letter sequence in French. Likewise, while East Asian scripts may look similar to the untrained eye, the commonest character in Japanese is の and the commonest character in Chinese is 的, both corresponding to the English ’s suffix.

By counting the one-, two- and three-letter sequences in a text (unigrams, bigrams and trigrams), a language can be identified from a short sequence of a few sentences only.

The below demo of a language detector uses the “Efficient Language Detector” library available at: https://github.com/nitotm/efficient-language-detector-js

Enter text below and click "Detect Language" to see the results from the `eld` library.

As an extension of the above problem, sometimes a text appears with an unknown author and we want to know who wrote it.

Examples include novels written under a pseudonym, such as JK Rowling’s detective series written under the pen-name Robert Galbraith, or the pseudonymous Italian author Elena Ferrante. In politics we have the anonymous New York Times op-ed I Am Part of the Resistance Inside the Trump Administration, which sparked a witch-hunt for its author, and the open question about who penned Dominic Cummings’ rose garden statement.

The excellent linguistics YouTuber Joshua R has walked through a qualitative analysis of a French message written by one of the Bataclan terrorists in 2015, where he has identified key demographic information behind the author (education level, cultural upbringing, etc).

The science of identifying authorship from unknown texts is called forensic stylometry. Every author has a characteristic fingerprint of their writing style - even if we are talking about word-processed documents and handwriting is not available.

You can read more about forensic stylometry in my earlier blog post on the topic, and you can also try out a live demo of an author identification system on the site.

Although forensic stylometry can be viewed as a qualitative discipline and is used by academics in the humanities for problems such as unknown Latin or Greek texts, it is also an interesting example application of natural language processing.

We are past the days when machine translation systems were notorious for turning text such as “The spirit is willing but the flesh is weak” into “The vodka is good but the meat is rotten.” (Although the Economist reliably informs me that this story is apocryphal.)

Today, Google Translate covers an astonishing array of languages and handles most of them with statistical models trained on enormous corpora of text which may not even be available in the language pair. Transformer models have allowed tech giants to develop translation systems trained solely on monolingual text.

In 2022, Meta announced the creation of a single AI model capable of translating across 200 different languages, democratising the access to natural language processing to lesser spoken languages such as Twi (Ghana) which were previously not supported by NLP tools.

The monolingual based approach is also far more scalable, as Facebook’s models are able to translate from Thai to Lao or Nepali to Assamese as easily as they would translate between those languages and English. As the number of supported languages increases, the number of language pairs would become unmanageable if each language pair had to be developed and maintained. Earlier iterations of machine translation models tended to underperform when not translating to or from English.

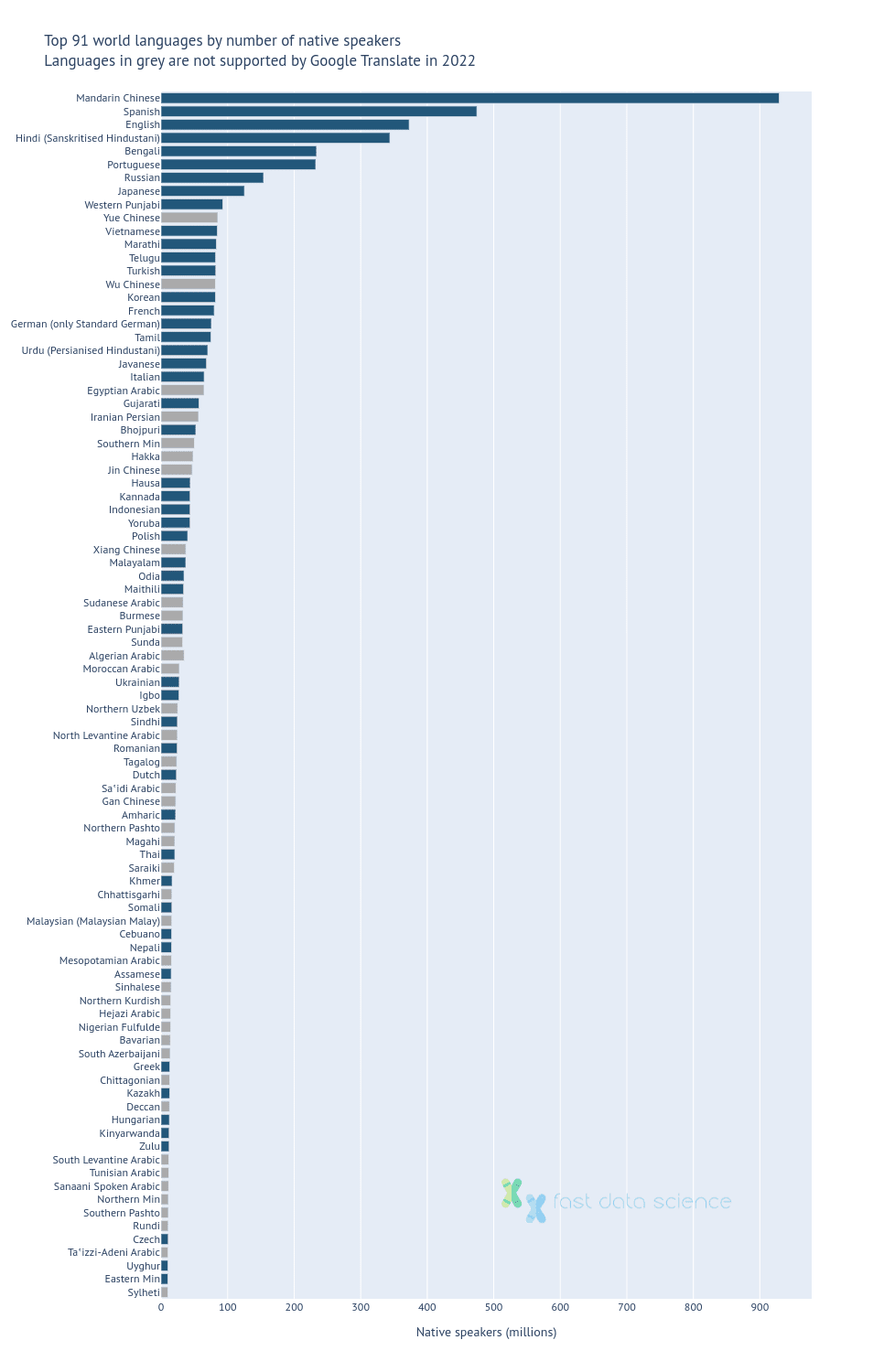

However, there is still a lot of work to be done to improve the coverage of the world’s languages. Facebook estimates that more than 20% of the world’s population is still not currently covered by commercial translation technology. In general coverage is very good for major world languages, with some outliers (notably Yue and Wu Chinese, sometimes known as Cantonese and Shanghainese).

Top 91 languages showing Google Translate coverage. Data source: Ethnologue (2022, 25th edition), Google Translate homepage.

Many of the unsupported languages are languages with many speakers but non-official status, such as the many spoken varieties of Arabic.

Interestingly, the Bible has been translated into more than 6,000 languages and is often the first book published in a new language.

Sentiment analysis is an example of how natural language processing can be used to identify the subjective content of a text. This is naturally very useful for companies that want to monitor social media traffic regarding their brands and competitor brands or key topics, and also to monitor the sentiment of dialogue between users and chatbots or customer support agents. Sentiment analysis has been used in finance to identify emerging trends which can indicate profitable trades.

For more examples of how this area of natural language processing can be applied in your business please check out my blog post on trends in sentiment analysis which includes an interactive demo of a sentiment analysis tool and shows how sentiment analysis technology has progressed from the 1970s up to today.

Natural language processing can rapidly transform a business. Businesses in industries such as pharmaceuticals, legal, insurance, and scientific research can leverage the huge amounts of data which they have siloed, in order to overtake the competition.

Natural language processing can be used to improve customer experience in the form of chatbots and systems for triaging incoming sales enquiries and customer support requests.

For further examples of how natural language processing can be used to your organisation’s efficiency and profitability please don’t hesitate to contact Fast Data Science.

SIL International, Ethnologue: Languages of the World (2022, 25th edition)

The Economist, A Gift of Tongues (2009)

NLLB Team, Scaling neural machine translation to 200 languages, Nature 630.8018 (2024): 841.

Ready to take the next step in your NLP journey? Connect with top employers seeking talent in natural language processing. Discover your dream job!

Find Your Dream Job

We are excited to introduce the new Harmony Meta platform, which we have developed over the past year. Harmony Meta connects many of the existing study catalogues and registers.

Guest post by Jay Dugad Artificial intelligence has become one of the most talked-about forces shaping modern healthcare. Machines detecting disease, systems predicting patient deterioration, and algorithms recommending personalised treatments all once sounded like science fiction but now sit inside hospitals, research labs, and GP practices across the world.

If you are developing an application that needs to interpret free-text medical notes, you might be interested in getting the best possible performance by using OpenAI, Gemini, Claude, or another large language model. But to do that, you would need to send sensitive data, such as personal healthcare data, into the third party LLM. Is this allowed?

What we can do for you