What is Natural Language Processing and where is NLP heading over the next few years? By the UK-based NLP consulting company Fast Data Science.

Natural Language Processing is the technology that lets us talk to our phones, watches, or smart speakers, and enables them to respond. At its most basic, natural language processing is how computer programs are able to make sense of words and their surrounding context. For example, you could write a computer program to pick up on sarcasm such as, “that’s funny… not.” Or to understand “The world will end!” as an exclamation versus “the world will end?” as a question. But machines can’t simply read and interpret language innately, as humans do. So how can machines understand sarcasm or if a sentence is posed as a question, or even just find the main topic and recurring themes in the words? The answer is Natural Language Processing (NLP).

You may not know it, but NLP has quietly wound its way into the fabric of our lives, in areas such as email filters, predictive texting and translation software like Google Translate. All of these rely on a machine’s ability to understand words and textual features, and whilst this is commonplace today, NLP has certainly been through the motions of history to get this far.

The skilled use of language is a major part of what makes us human, and for this reason, the desire for computers to understand and speak our language has been around since they were first conceived. The earliest Natural Language Processing programs were primarily rule-based, where experts would encode hundreds of rules mapping what a user might say to how the program should reply.

A famous example in the history of natural language processing was ELIZA, created in the mid-1960s at MIT.1 ELIZA was a chatbot that took on the role of a therapist and used basic syntactic rules to identify content in written exchanges. Sometimes the results were fairly convincing, but other times it would make simple and even comical mistakes.

application.](https://fastdatascience.com/images/ELIZA_conversation.jpg)

A screenshot of a conversation with ELIZA, an early natural language processing system which mimicked a psychotherapist. Image is in the public domain.

Over the last 50 years, chatbots and more advanced dialogue systems have come a long way. Modern approaches are based on machine learning, where gigabytes of real human to human conversations are used to train chatbots. Today, the technology is finding use in customer service applications which, now having millions of example-conversations to learn from, can answer most human inputs, resolving the majority of customer issues.

One of the more recent demonstrations of NLP progress involved engineers at Facebook getting chatbots to talk with one another. In this experiment, chatbots quickly began to create and evolve their own language.2 Whilst this experiment got much in the way of negative press, it was just the computers crafting a simplified protocol to communicate with one another - it wasn’t evil…just efficient!

Another side of the history of natural language processing is in the area of speech recognition, a key focus of research for many decades. Bell Labs debuted the first speech recognition system in 1952: the Automatic Digit Recognition machine, or “Audrey” for short.3 This machine could recognise all ten numerical digits - if said slowly enough. The project didn’t go anywhere since it was much faster to enter telephone numbers with a finger.

Unfortunately, progress went slowly for the next three decades: in 1962, IBM showed off a shoebox-sized machine capable of recognising a monumental sixteen words (!) whilst in 1971 DARPA (an R&D division of the United States Department of Defence) kicked off an ambitious five year funding initiative leading to the development of the HARPY Speech Recognition System at Carnegie Mellon University. This was the first system that could recognise over 1,000 words, but the computers of the ’70s could only transcribe what was said around ten times slower than the rate of natural speech.4

Fast Data Science - London

Fortunately, thanks to huge advances in computing performance in the 80s and 90s, continuous real time speech recognition took greater steps to becoming a reality. At the same time, simultaneous innovation in the algorithms for processing natural language meant the systems could move from time-consuming and unwieldy handcrafted rules to machine learning techniques that could learn automatically from existing data sets.

The basic foundation of NLP is built on calculations. If there’s one thing machines do very well, it’s calculations. Calculations on words and textual features is what allows machines to determine if a piece of text contains sarcasm, to discern negative sentiments from positive ones, and decide if a text contains more rhetoric rather than factual statements.

took over.](https://fastdatascience.com/images/natural-language-processing-program-min.png)

The basics of natural language processing: an overview of some of a rule-based natural language processing program, showing a list of English stopwords which must be removed from the input. This is typical of first-generation approaches to NLP, before deep learning took over.

The first step in NLP is cleaning the raw text the computer has to work with, and then organising it into tables so a more structured data format exists. After this, the machine counts the frequency of words, takes into account the surrounding context, and then does its calculations to “solve the problem”. The “processing” part of NLP is integral to natural language processing. Without processing, you’re just left with natural language, which machines cannot easily interpret like humans can.

Today, the speech and text recognition systems of Natural Language Processing can be found in any number of daily applications or consumer software.

Natural Language Processing is a key component of any voice recognition, speech synthesis, or question answering program like Siri, Alexa or where you can speak to a computer programme and it understands what you’re saying, subsequently returning relevant responses.

These models are trained on large, highly diverse datasets, allowing Siri and Alexa to recognise a variety of accents and languages. Couple the availability of huge amounts of data with the extremely powerful hardware capabilities of the 21st century, and it’s no wonder the error rates of speech recognition software have decreased to less than 10%.

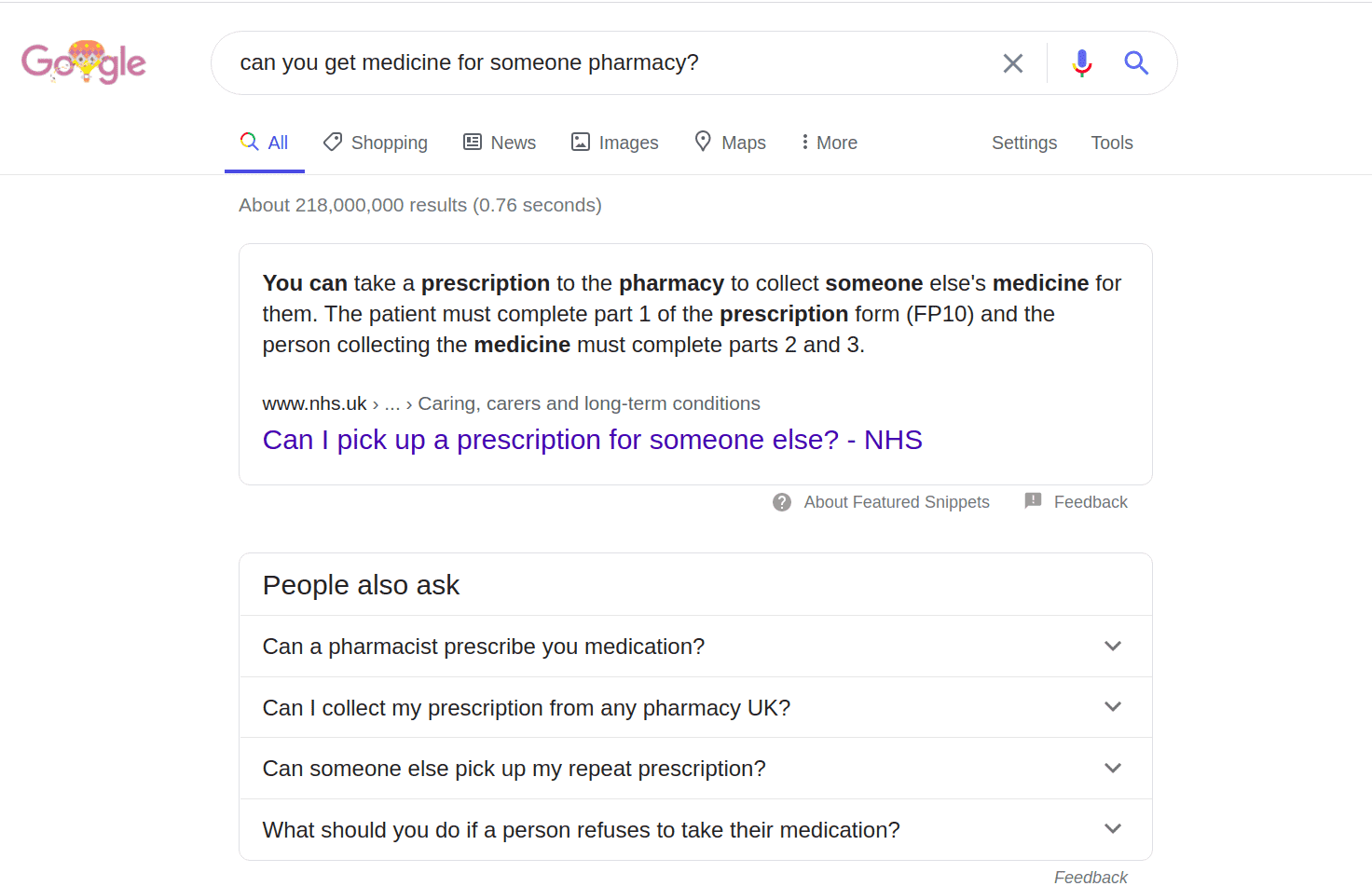

Search engines are remarkably adept at ascertaining your search intent and returning the relevant results, even if you spell something wrong, put words in an incoherent order or aren’t even asking a question but just typing in a keyword. NLP is the magical fairy dust that allows this to happen. Google combines a number of elements to recognise what you’re looking to ask beyond the exact search words entered, taking into account popular searches, context, and individualised search history. In this way you could enter “can you get medicine for someone pharmacy?” and Google will recognise “for someone” as a question on whether you can pick up someone else’s prescription, rather than ignoring those words and simply returning results for locations of nearby pharmacies.

Google uses natural language processing to make a smart interpretation of your input. Screenshot: Google

The subtle integration with which NLP has been introduced to our lives might make it seem like the social good and social ills it can achieve are on a smaller scale. However, the consequent social impacts can be far-reaching, both in positive aspects and negative.

There’s no doubt that NLP already benefits members of society in a few ways. On a day-to-day basis it makes our life online easier returning the search results we are actually looking for without being search-term wizards. This is of more use to the older generation and less technically able, but no matter your computer proficiency, it still creates quick, convenient searching for whatever it is you’re looking for.

On a more impactful level, NLP can help red-flag emotional states that could otherwise lead to confrontational and violent incidents, such as gang violence, public riots or school shootings. Identifying and comparing the tone of sentiment, contextual word-use and individual user-history through NLP might well help prevent the aforementioned disturbances by allowing interception before they occur or increased readiness in preparation.5

In the same way NLP can interpret highly-charged emotional states for potential violent situations, it could provide easy-to-access therapy for all, but especially those who might not have the time or resources to see a human therapist. Indeed many chat-based interventions for mental health have been found to be better than none, and NLP can assist in monitoring your individual emotional state, and respond in ways that improve it.6

At the same time, NLP does have its pitfalls.

A natural language processing model’s ability is largely based off the dataset it’s given, so if that dataset carries a demographic bias, you might well have NLP programmes providing demographic misrepresentations of certain individuals or groups. Arguably the human biases that already exist might find their way into an NLP model and then be amplified to deeply harmful consequences. For example, Amazon was so used to historically not accepting female candidates that when they produced a recruitment tool that used NLP, it came to automatically reject all women who applied.

NLP can also be used to detect fake news, which is obviously a huge social benefit in today’s society. However, it can create fake news that is not only very convincing, but also very persuasive. As NLP gets better at analysing what content certain demographics respond to, it can more accurately mimic the style of real news and generate viral content to misinform millions.

So what can we expect to see in the progress of Natural Language Processing in the next 10 years?

Natural language processing is a central part of artificial intelligence, which itself is only a growing field now and in years to come. As such, major industry players in the AI space will look to create faster, more accurate and authentic chatbots, smart assistants and machines.

Industry and corporate decision-making is on the cusp of dramatic change, where insights based not just on data but real NLP-powered intelligence will revolutionise the way companies interpret customer sentiment and shifts in the market. Instead of making industry decisions based on either large-but-generalised or specific-but-small survey sets, they can take huge quantities of data and create products highly tuned to every individual.

Over the past half decade NLP has slowly integrated its way into many of our daily devices. You might have noticed however that things are picking up speed: more voice recognition in our cars, better predictive texting, and improved search results. If the last five years were slow, the next five are going to be a whirlwind, especially since we have seemed to accept this technology into our lives (largely) without complaint. There’s no limit to where NLP might find itself in the future, but it’s on course to be a lot more prevalent rather than less.

If you’d like to find out more about Natural Language Processing, here are some books that come highly recommended:

Introduction to Natural Language Processing, by Jacob Eisenstein. Read about the history of natural language processing and the basics of NLP.

Foundations of Statistical Natural Language Processing, by Christopher D. Manning and Hinrich Schütze

Ready to take the next step in your NLP journey? Connect with top employers seeking talent in natural language processing. Discover your dream job!

Find Your Dream Job

Senior lawyers should stop using generative AI to prepare their legal arguments! Or should they? A High Court judge in the UK has told senior lawyers off for their use of ChatGPT, because it invents citations to cases and laws that don’t exist!

Fast Data Science appeared at the Hamlyn Symposium event on “Healing Through Collaboration: Open-Source Software in Surgical, Biomedical and AI Technologies” Thomas Wood of Fast Data Science appeared in a panel at the Hamlyn Symposium workshop titled “Healing Through Collaboration: Open-Source Software in Surgical, Biomedical and AI Technologies”. This was at the Hamlyn Symposium on Medical Robotics on 27th June 2025 at the Royal Geographical Society in London.

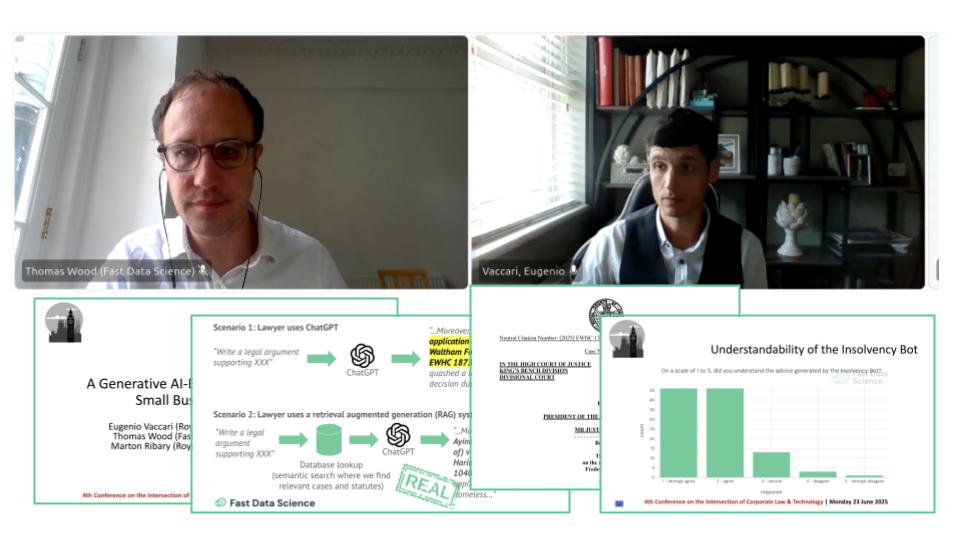

We presented the Insolvency Bot at the 4th Annual Conference on the Intersection of Corporate Law and Technology at Nottingham Trent University Dr Eugenio Vaccari of Royal Holloway University and Thomas Wood of Fast Data Science presented “A Generative AI-Based Legal Advice Tool for Small Businesses in Distress” at the 4th Annual Conference on the Intersection of Corporate Law and Technology at Nottingham Trent University

What we can do for you