Natural language processing or NLP is the area of artificial intelligence to do with analysing human language. Natural language processing is an emerging field with a huge number of business applications. Large companies which have their own data science team often do not have NLP specialists in-house and may need to bring NLP experts as consultants.

We need natural language processing when we are faced with unstructured text documents. An unstructured document could be something like:

According to Dr S pts with comorbidities are better treated with the combination treatment however she had seen some resistance among older pts. Treatment-naive pts responded positively.

(an example document from the pharmaceutical industry)

or

In particular the safety warnings were ignored by the crew. Due to an electronic fault the audible alarm was not functioning. The first officer’s performance was impacted by long working hours on the vessel. Furthermore the officer of the watch had fallen asleep. The investigation concluded that all the above factors contributed to the loss of the vessel.

(a maritime accident investigation report)

You can imagine that a paragraph like this is hard to understand for a layperson, although industry experts in the respective fields (pharmaceuticals and shipping in this case) will have no trouble making sense of the texts.

So this is where natural language processing comes in.

If a shipping insurance company where setting about building a database of incidents and their causes, or a pharmaceutical company wanted to analyse patterns in the feedback given by healthcare providers, the first thing we would have to do would be to convert the information in the text into a form that a computer can process.

That could take the form of an XML or JSON file such as the following:

{

...

"causes": {

"PRIMARY": [

"ELECTRONIC",

"WORKING_HOURS"

],

"SECONDARY": [

"EQUIPMENT_MALFUNCTION"

]

},

...

}

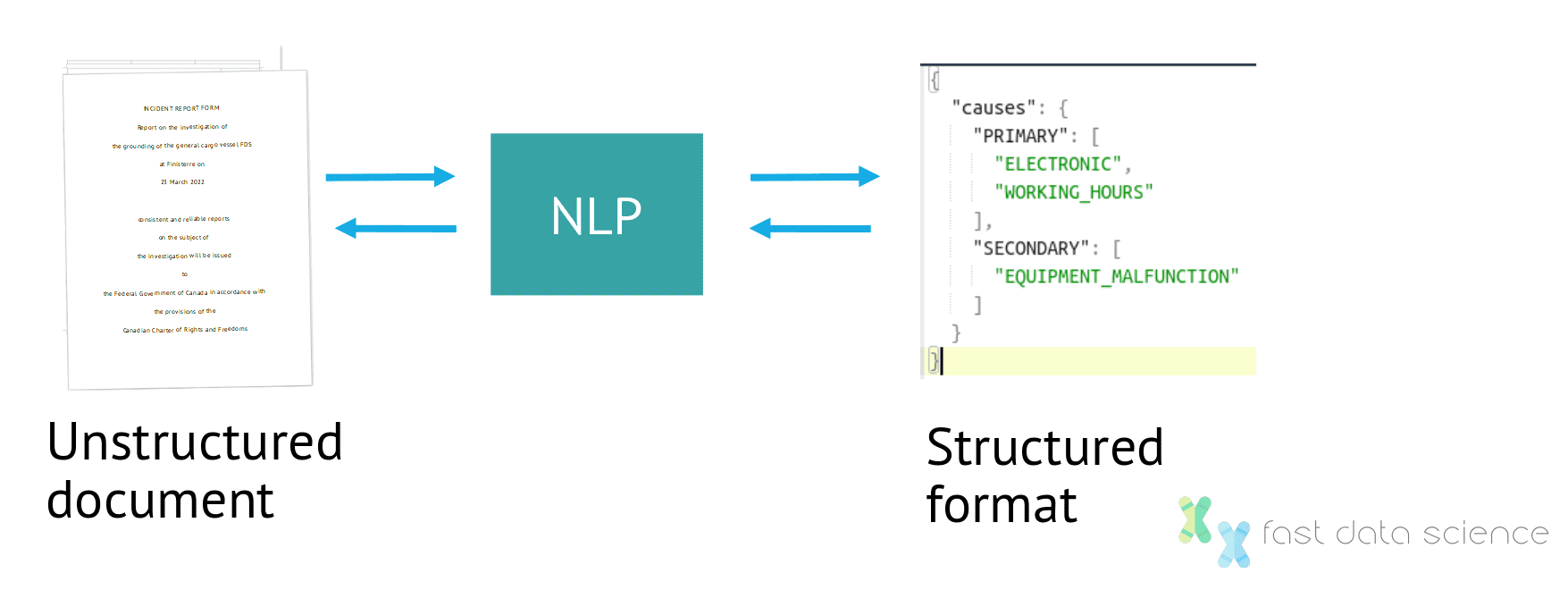

One purpose of natural language processing is to translate between the unstructured human-friendly representation of the information and the structured format.

So we can view NLP as a component sitting between these two representations. The task of translating a human-readable text into a structured format is called natural language understanding or NLU, while the opposite task of converting a structured list into a human readable text is called natural language generation or NLG. Due to the huge variety of possible phrasings that humans are prone to using in text, natural language understanding is the harder of the two tasks.

Natural language processing translates between an unstructured and structured data format, such as a PDF of an accident report and a computer-readable representation of the relevant information.

Once the information has been converted to the structured format, it can be stored in databases and easily and quickly queried, retrieved, aggregated, and compared. Imagine trying to compare 100 accident reports or clinical trial summaries when they are all on your computer in PDF format! This shows how invaluable NLP can be in some fields.

Fast Data Science - London

There are a huge range of applications of natural language processing. Some of them will be obvious to you and some of them are less obvious.

For example, Google Translate, and other machine translation software, are a clear application of natural language processing. Computer scientists have been working on machine translation algorithms for more than 50 years, and in the past this was done using rule-based systems, whereas nowadays data-driven approaches such as neural networks are preferred.

Smartphone users will also be familiar with the virtual assistants that are shipped with every smartphone today. The virtual assistants combine two powerful areas of natural language processing: speech recognition and synthesis (also known as speech to text and text to speech), and natural language dialogue systems for managing the conversation.

In my line of work as their natural language processing consultant I am brought into projects in pretty much any industry and many of the tasks I’m faced with are completely new but nevertheless absolutely fascinating.

Just off the top of my head the last few projects I have been working on I have been

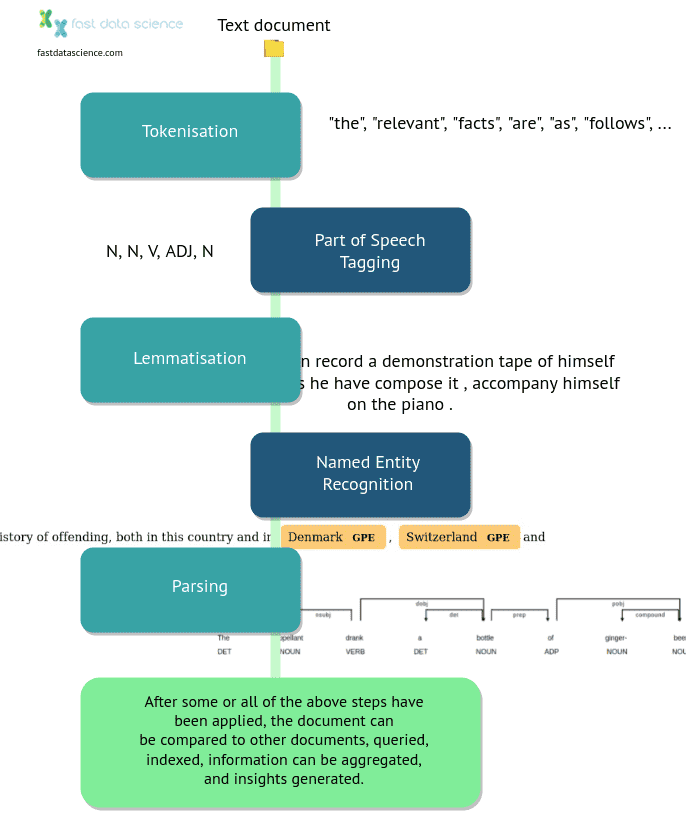

The traditional way of approaching natural language processing problems is to pass the text through a pipeline of components which each independently operate on the output of the previous component.

The first component in a pipeline is usually a tokenizer. After a PDF or Word document has been converted into plain text, it is split up into to units of words and punctuation marks called tokens. For English text, tokenisation is relatively straightforward, but for East Asian languages if can be tricky because the boundary of a word is not always a well-defined concept.

The tokens can then be passed to a series of components which annotate them with extra information, such as

The traditional concept of an NLP pipeline. Steps are applied in order such as tokenisation, lemmatisation, and so on, and an unstructured text document is gradually transformed into a structured format.

In recent years huge strides have been made in the field of natural language processing and for many applications, the traditional NLP pipeline is a technique of the past. One important innovation is the invention of the Transformer, which is the latest neural network-based approach to natural language processing.

A transformer is essentially a huge neural network optimised to handle sequences which makes it ideal for processing text or sound signals. At its heart a transformer will convert a sequence of tokens into a sequence of vectors in a very high-dimensional space and these vectors can be used to perform nearly any NLP task from grammatical sentence parsing to question answering and information retrieval.

The best-known transformer model is BERT, although the latest and largest transformer model is GPT-3.

Transformers are so complex and hard to train that the average user is no longer able to custom build and train their own Transformers on the fly as they would with an NLP pipeline like I described above. The simplest approach is to use a pre-built library such as Hugging Face or Open AI and use the transformer models supplied out of the box.

However for most of the natural language processing consulting projects which I have been working on, transformers have not been relevant. In many cases what has been more important than a huge deep learning neural network or gigabytes of data has been domain understanding and a willingness to learn about the industry.

For example, I have been working on a project to quantify the risk of a clinical trial, and the enormous amount of data that a transformer would need was simply not available. My only option was to to gather any domain knowledge, speak to experts in the field, and attempt to build a simple machine learning model which operated on tokens.

If you are interested in getting started in natural language processing or or know something about the area but want to become an expert there are a number of sources that I would recommend for you.

I hope that you have been able to process the information in this article and you have a better understanding of what natural language processing is and what NLP is used for. If you have a large set of unstructured data in whichever industry (industries such as pharmaceutical, legal, or insurance have large amounts of text data), and you need to hire an NLP expert or NLP consultant to help you make sense of the data please don’t hesitate to contact us.

Ready to take the next step in your NLP journey? Connect with top employers seeking talent in natural language processing. Discover your dream job!

Find Your Dream Job

We are excited to introduce the new Harmony Meta platform, which we have developed over the past year. Harmony Meta connects many of the existing study catalogues and registers.

Guest post by Jay Dugad Artificial intelligence has become one of the most talked-about forces shaping modern healthcare. Machines detecting disease, systems predicting patient deterioration, and algorithms recommending personalised treatments all once sounded like science fiction but now sit inside hospitals, research labs, and GP practices across the world.

If you are developing an application that needs to interpret free-text medical notes, you might be interested in getting the best possible performance by using OpenAI, Gemini, Claude, or another large language model. But to do that, you would need to send sensitive data, such as personal healthcare data, into the third party LLM. Is this allowed?

What we can do for you