You may have seen the news about Facebook’s new futuristic chatbot trained for empathy on 1.5 billion Reddit posts.

You might be wondering, how it is possible to make a computer program converse with humans in a natural way? Where are chatbots going and what does the future hold for them?

Natural language dialogue systems, also known as virtual assistants or chatbots, are an interesting area of artificial intelligence, and a field with a long history,

There are several challenges we encounter when making a chatbot. We need to:

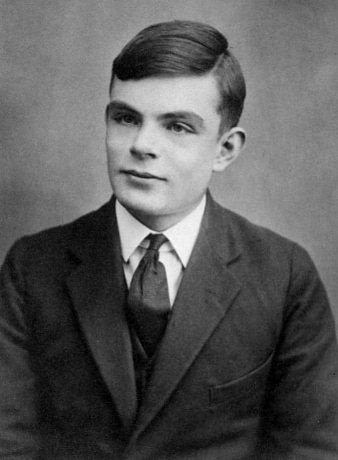

Back in 1950, Alan Turing proposed that we can consider a computer as being capable of thinking like a human being, if we can interrogate it and we are unable to distinguish its answers from the answers of a real human. This thought experiment is now called the Turing Test. Of course whether a bot would pass the Turing Test depends a lot on the kind of questions we ask: if we limit ourselves to a small domain then bots tend to perform quite well.

Alan Turing, inventor of the Turing Test among other things. Source: Wikipedia

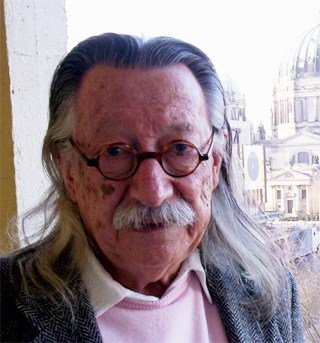

Joseph Weizenbaum, inventor of ELIZA. Source: Wikipedia

In the 1960s Joseph Weizenbaum created a program called ELIZA, a psychotherapist bot which re-phrased the user’s inputs by means of clever rules:

User: You are like my father in some ways.

ELIZA: What resemblance do you see?

User: You are not very aggressive but I think you don’t want me to notice that.

ELIZA: What makes you think I am not aggressive?

Example conversation with ELIZA

Clearly even if ELIZA managed to fluke the Turing Test, there is no human-like intelligence present. ELIZA has no idea of the meaning of aggressive, would not understand that belligerent is a synonym. If you told ELIZA, You are potato, she would probably blindly respond with Why do you think I am potato.

Fast forward to the 2010s and chatbots were already becoming a common solution for large organisations to cut costs in call centre staff. If you visit the website of any large airline, retailer or bank, you are often greeted by a little chat window where an avatar offers to guide you through the site.

These bots have two things in common: they operate within a narrow domain, and they are normally rule based, which means that a human has carefully crafted a set of rules to determine what response the bot should give in what context. In short, the same trick as ELIZA used but with more smoke and mirrors.

For example if you give an input I want to open an account, a banking bot will probably be listening for keywords open + account, and will trigger the corresponding pre-written response. Normally there is a cascade of rules that the bot attempts to match, going from strict to broad. So first the bot will check for open + account, and other two-word triggers, then simply account, and then fall back to a catch-all response such as I’m sorry, I didn’t quite understand what you’re looking for.

Fast Data Science - London

Retail website chatbots perform acceptably for the purpose for which they were designed, and can even cope with maintaining dialogue context, pronouns such as it/he/she, and rudimentary small talk. However they can be easily thrown by phrases or situations that they haven’t been designed for, and it’s labour intensive to develop them.

They are nearly always designed with a chat handover: when the bot fails to understand the input, the user is handed over to a human operator.

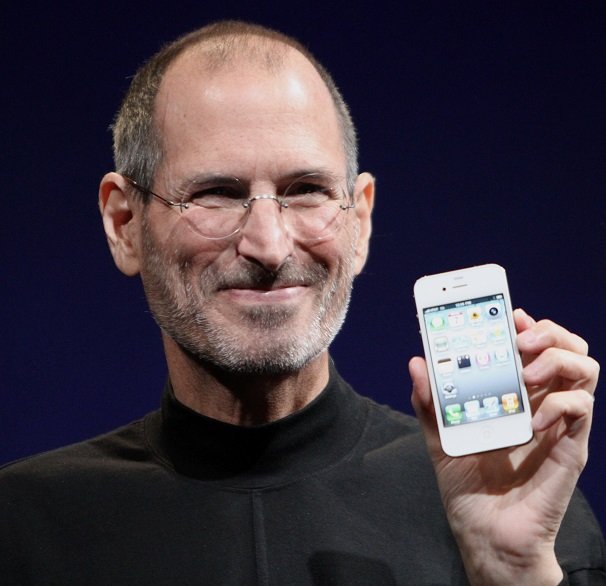

Steve Jobs, CEO of Apple, demonstrating an iPhone. Source: Wikipedia

One development which brought chatbots to the public consciousness more than any other in the last ten years was Steve Jobs’ introduction of Siri to the iPhone in 2011. Siri was a program that allows you to say things like Set my alarm for 5 am tomorrow instead of doing this via the touchscreen.

To the best of my knowledge Siri was no more sophisticated than the bots I have described above, but the idea of combining a bot with voice interaction and to put it on a smartphone was very novel at the time, and brought a storm of publicity to the previously niche field of dialogue systems.

Siri sparked an arms race with other electronics companies, mobile phone manufacturers and Silicon Valley giants rushing to acquire or develop their own voice controlled virtual assistant. The next few years saw the release of Microsoft’s Cortana, Samsung’s Bixby, Amazon’s Alexa and Google Now.

Now it is quite easy to get started making your own chatbot. For example Google, Microsoft and Amazon all have options for you to get started making a bot for free.

We are starting to move away from hand-developed rules. Modern bot designing interfaces involve you entering a set of sample phrases that you want to recognise, and they will use machine learning to generalise this to a pattern so that a new unseen utterance can be correctly categorised.

In recent years we’ve seen some exciting advances in deep learning for natural language processing.

For example we no longer need to listen for key words in an utterance in order to guess as to the user’s intent.

](https://fastdatascience.com/images/word2vec-dialog-1.png)

Example of how words are all assigned vector locations in space by a word embedding. Here I am showing the words in 3D space so that we can understand the image, but in practice we would use more dimensions. Note that in this image the past tense verbs are clustered together and the present tense verbs are also close together, and there is a relationship between the two groups.

In 2003 Yoshua Bengio developed the idea of word embeddings. Every word in the English language is assigned a vector in a multi-dimensional space. For example want and desire mean nearly the same thing, so their word vectors would be close together in the space.

If you use word embeddings then you can start to calculate distances between words and move towards a probability that a user wants to open an account, or contact support.

The next step up from word embeddings is a technology called BERT, developed in 2018. BERT is a neural network design that allows us to calculate a word vector taking into account the entire sentence, so that bank in the sense of financial institution and in the sense of riverbank would have different vectors. With BERT it’s possible to calculate a vector of an entire sentence.

Currently in all the dialogue system software that I’ve tried, you can upload a list of sample utterances to train a model, and you manually define what values you want your bot to listen for (destination cities, account types, product names, etc). You then manually define the desired behaviour if the user utters the right words.

What I would like to imagine on the horizon would be to really leverage machine learning to improve future chatbots from all angles. Some examples of the kind of ideas that researchers are experimenting with at the moment are:

If you think I’ve missed anything important please add it in the comments below.

Of course it takes some time for any of these ideas to become commercially viable. But we can expect to see some exciting leaps in the next decade as the field becomes more democratised and more accessible to non-programmers.

Dive into the world of Natural Language Processing! Explore cutting-edge NLP roles that match your skills and passions.

Explore NLP Jobs

We are excited to introduce the new Harmony Meta platform, which we have developed over the past year. Harmony Meta connects many of the existing study catalogues and registers.

Guest post by Jay Dugad Artificial intelligence has become one of the most talked-about forces shaping modern healthcare. Machines detecting disease, systems predicting patient deterioration, and algorithms recommending personalised treatments all once sounded like science fiction but now sit inside hospitals, research labs, and GP practices across the world.

If you are developing an application that needs to interpret free-text medical notes, you might be interested in getting the best possible performance by using OpenAI, Gemini, Claude, or another large language model. But to do that, you would need to send sensitive data, such as personal healthcare data, into the third party LLM. Is this allowed?

What we can do for you